Improving Processes Through Additions and Upgrades to Automation Equipment

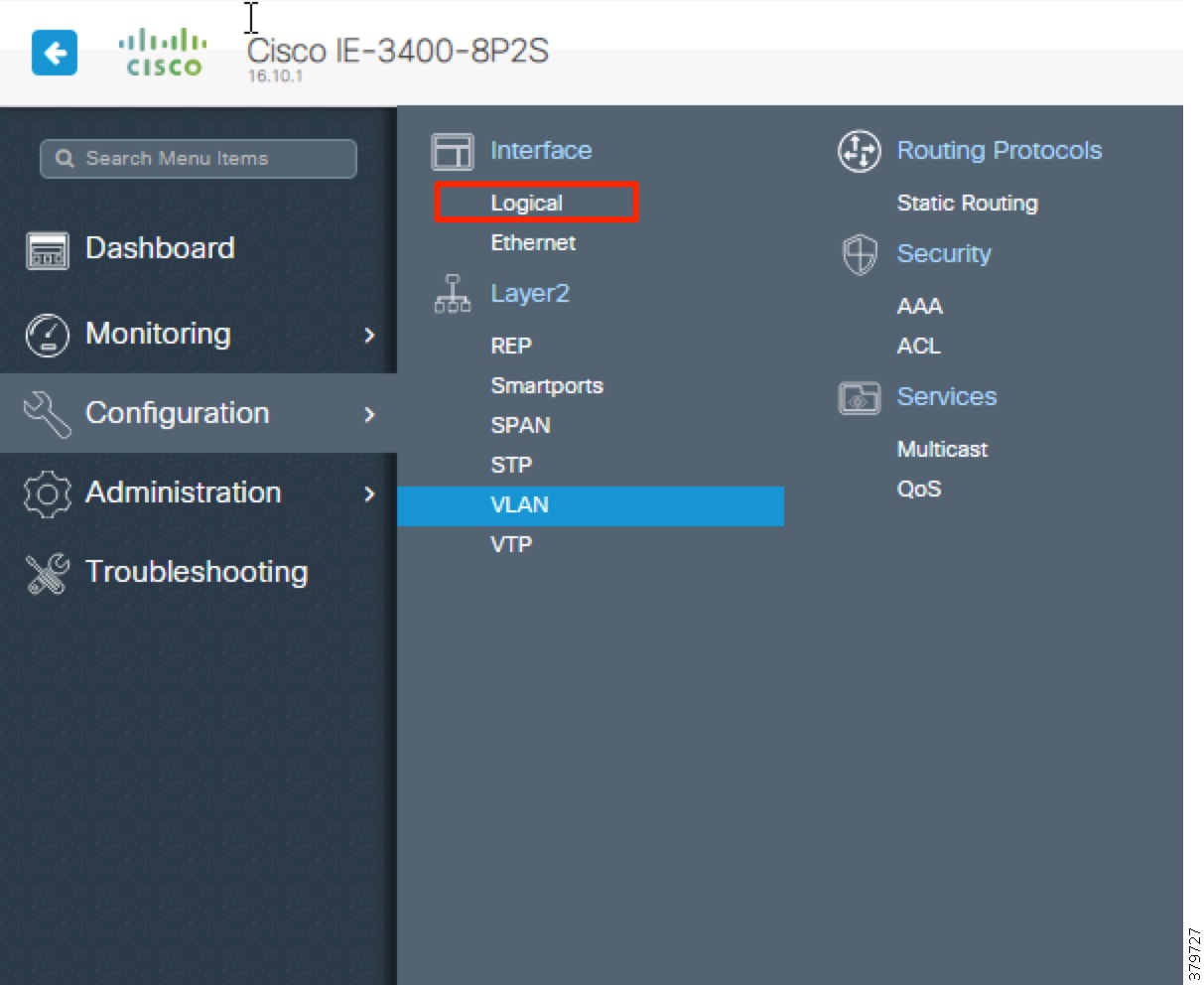

Networking and Security in Industrial Automation Environments

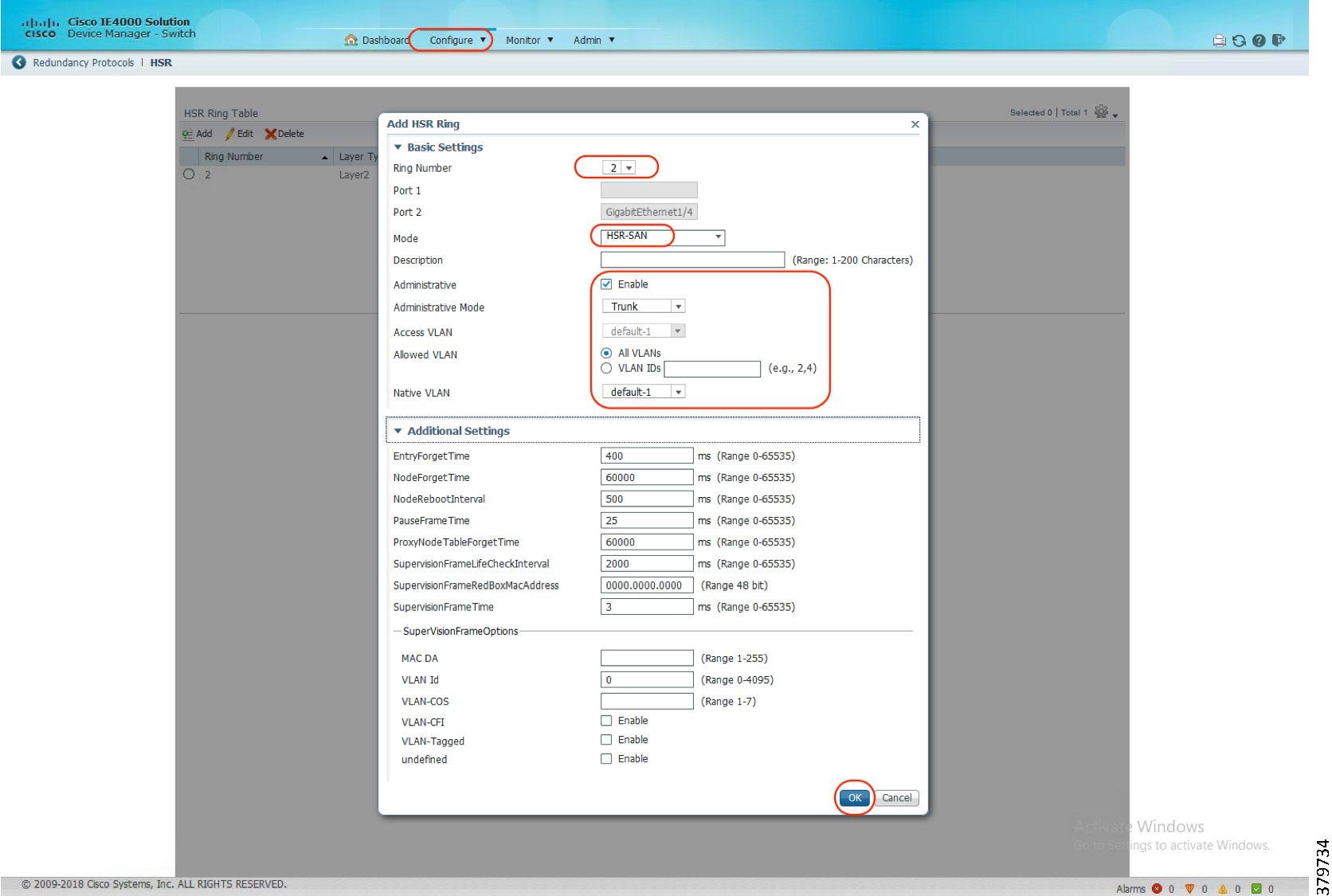

Executive Summary

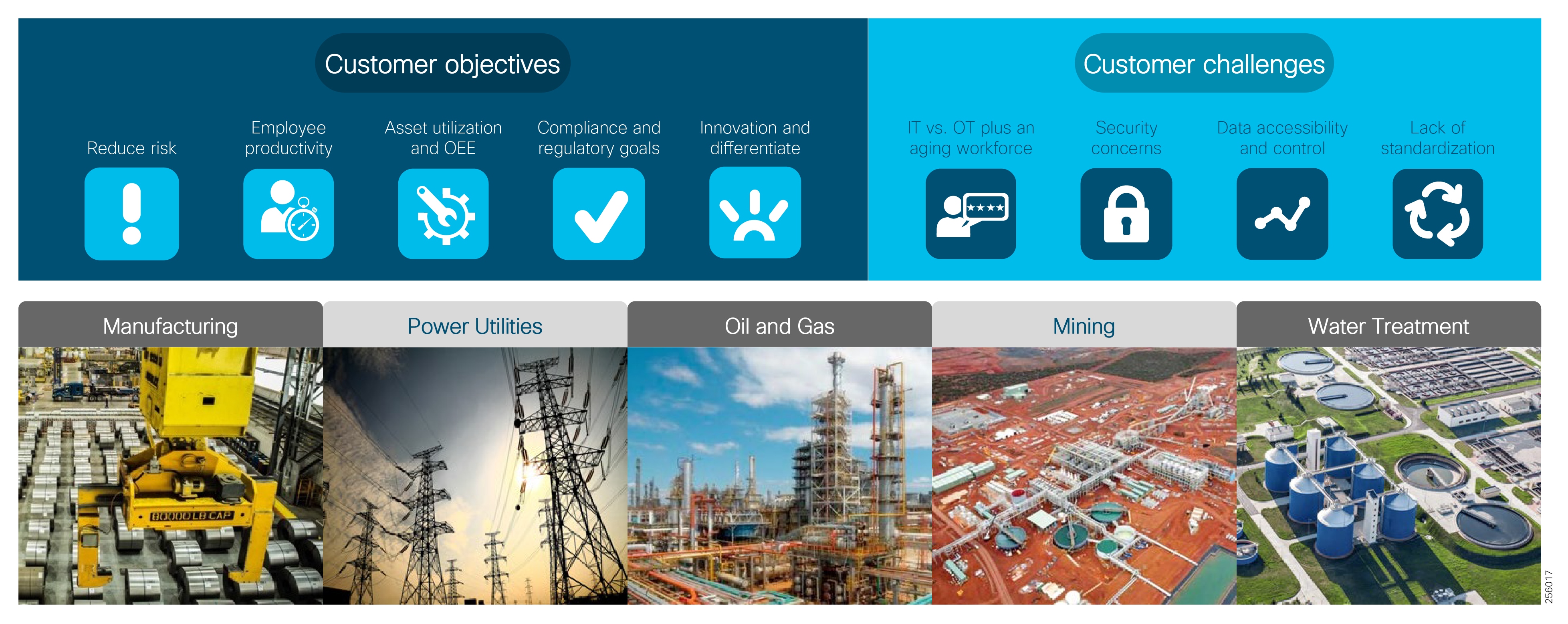

Industrial companies are seeking to drive operational improvements into their production systems and assets through convergence and digitization by leveraging the new paradigms in Industrial Internet of Things (IIoT) and Industry 4.0. However, these initiatives require securely connecting production environments via standard networking technologies to allow companies and their key partners access to a rich stream of new data, real-time visibility, and when needed remote access to the systems and assets in the operational environments.

New data and visibility are the key to IIoT and Industry 4.0 initiatives that unlock new business value and transformational use cases. The industrial ecosystem is seeking to continuously improve efficiency, reduce costs, and increase Overall Equipment Effectiveness (OEE) through better access to information from real time production systems in industrial areas. With a constant flow of data, industrial companies can develop more efficient ways to connect globally with suppliers, employees, and partners and to more effectively meet the needs of their customers. Securely connecting to plant systems and assets for improved access to new data is the key to enabling use cases such as predictive maintenance, real time quality detection, asset tracking, and safety enhancements.

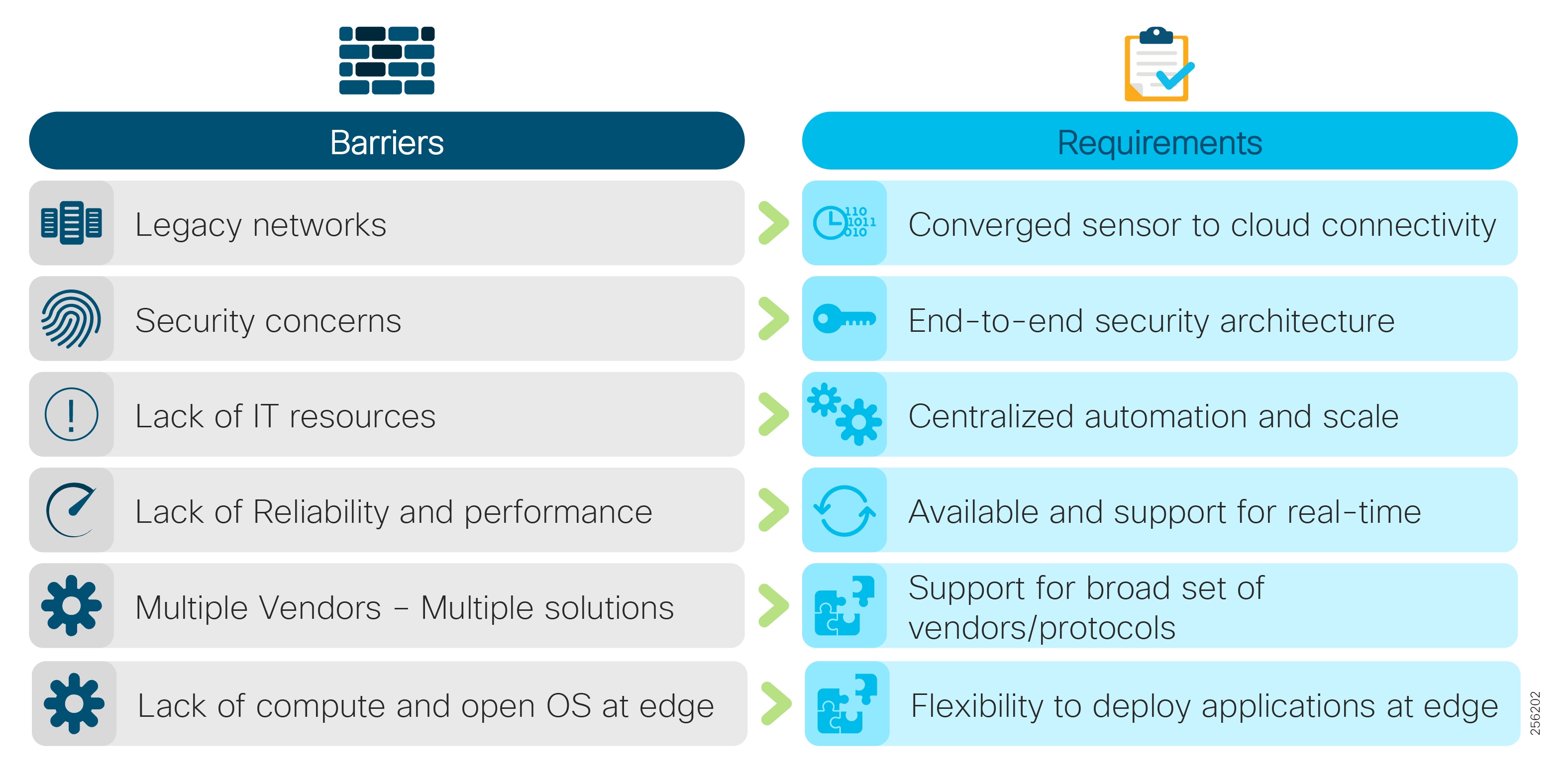

The Cisco® Industrial Automation solution and relevant product technologies are an essential foundation to securely connect and digitize industrial and production environments to achieve these significantly improved business operation outcomes. The Cisco solution overcomes top customer barriers to digitization and Industry 4.0 including security concerns, inflexible legacy networks, and complexity. The solution provides a proven and validated blueprint for connecting Industrial Automation and Control Systems (IACS) and production assets, improving industrial security, and improving plant data access and operating reliability. Following this best practice blueprint with Cisco market-leading technologies will help decrease deployment time, decrease risk, decrease complexity, and improve overall security and operating uptime.

Figure 1 Industrial Automation Customer Objectives and Challenges

Figure 2 Why Industrial Automation Solution?

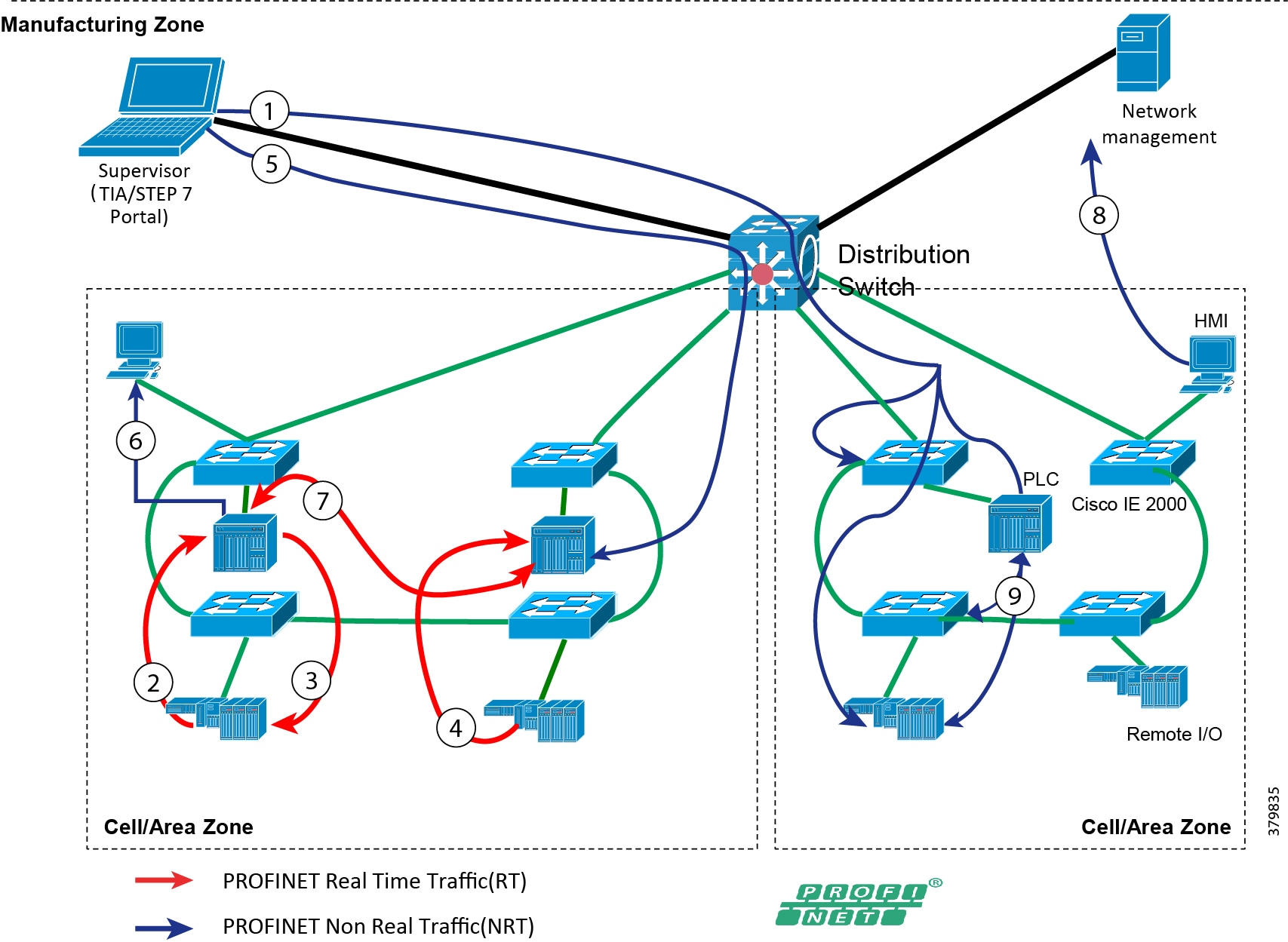

Industrial Automation Reference Architecture

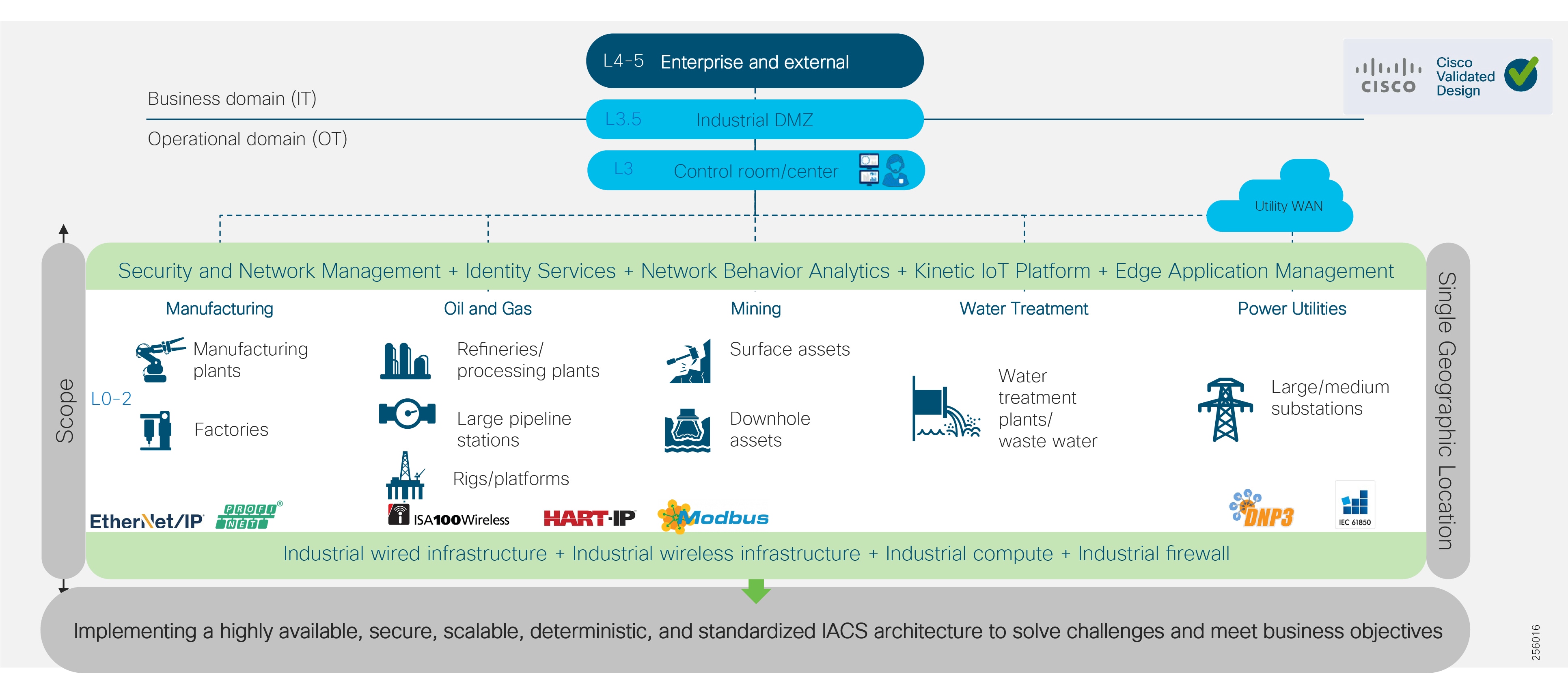

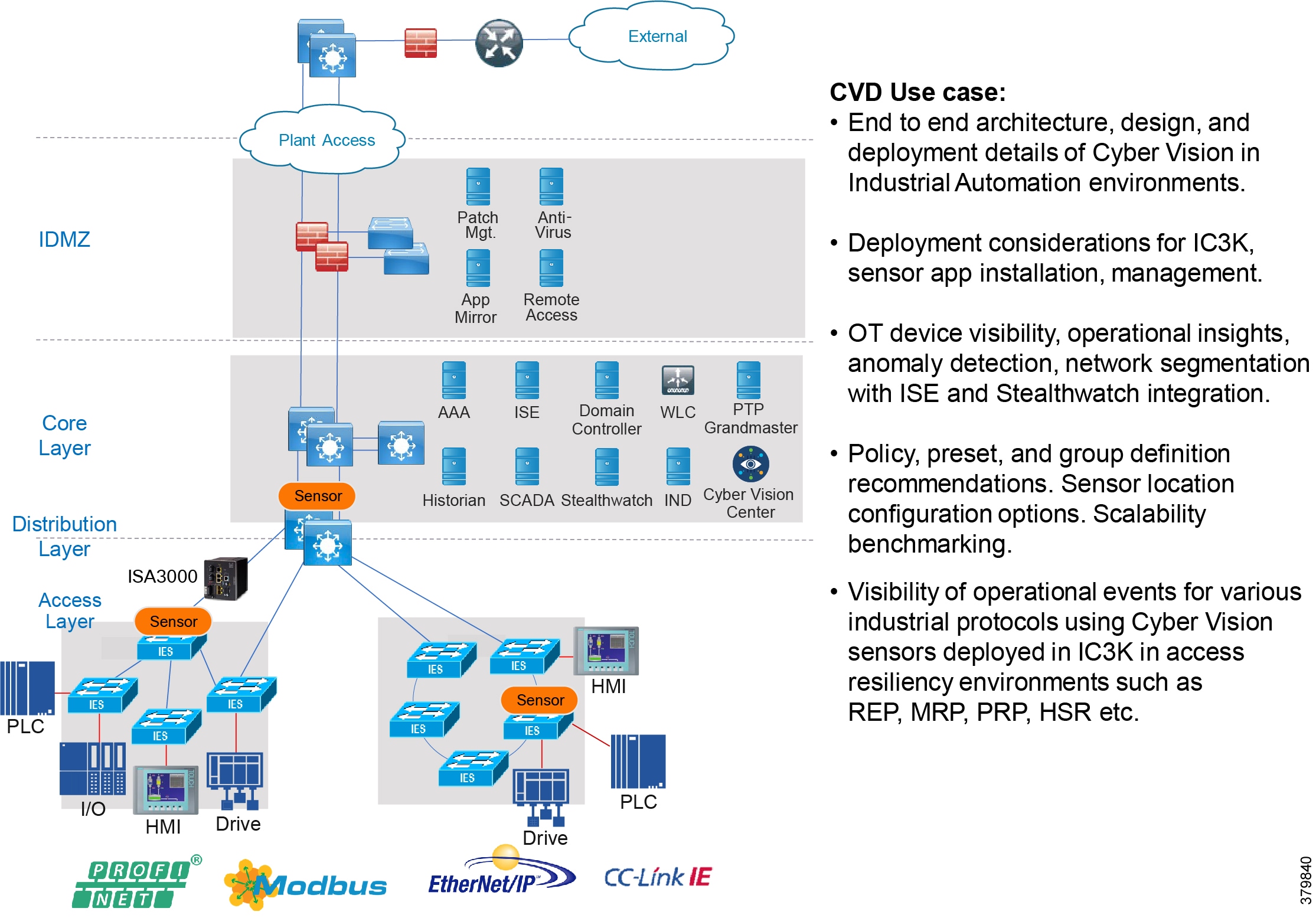

The Industrial Automation Cisco Validated Design (CVD) solution applies network, security, and data management technologies to Industrial Automation and Control System (IACS) plant environments and key production assets that are the core to operational environments. It provides a Cisco validated reference architecture and design and deployment guidance for customers, partners, and system implementers. This solution is comprehensively tested with a wide range of industrial devices (for example, sensors, actuators, controllers, remote terminal units, and so on), applications, and partners. This solution's features include high-speed connectivity, scalability, high availability, ease of use, market leading industrial security, open standards, precise time distribution, and enablement of alignment and connection of information technology (IT) and operational technology (OT) environments. The solution is meant to be applied in a range of industrial verticals for secure networking of Industrial Automation systems including manufacturing, mining, oil and gas, and utility companies and for places such as plants, factories, refineries, mines, treatment facilities, substations, and warehouses.

This solution provides a blueprint for the essential security and connectivity foundation required to deploy and implement Industry 4.0 and IIoT concepts and models. This solution is thus the key to digitizing industrial and production environments to achieve significantly improved business operation outcomes.

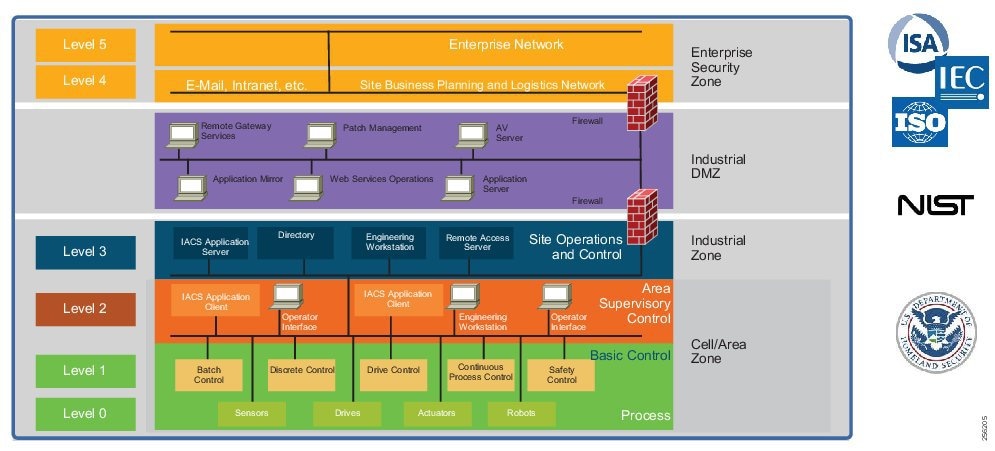

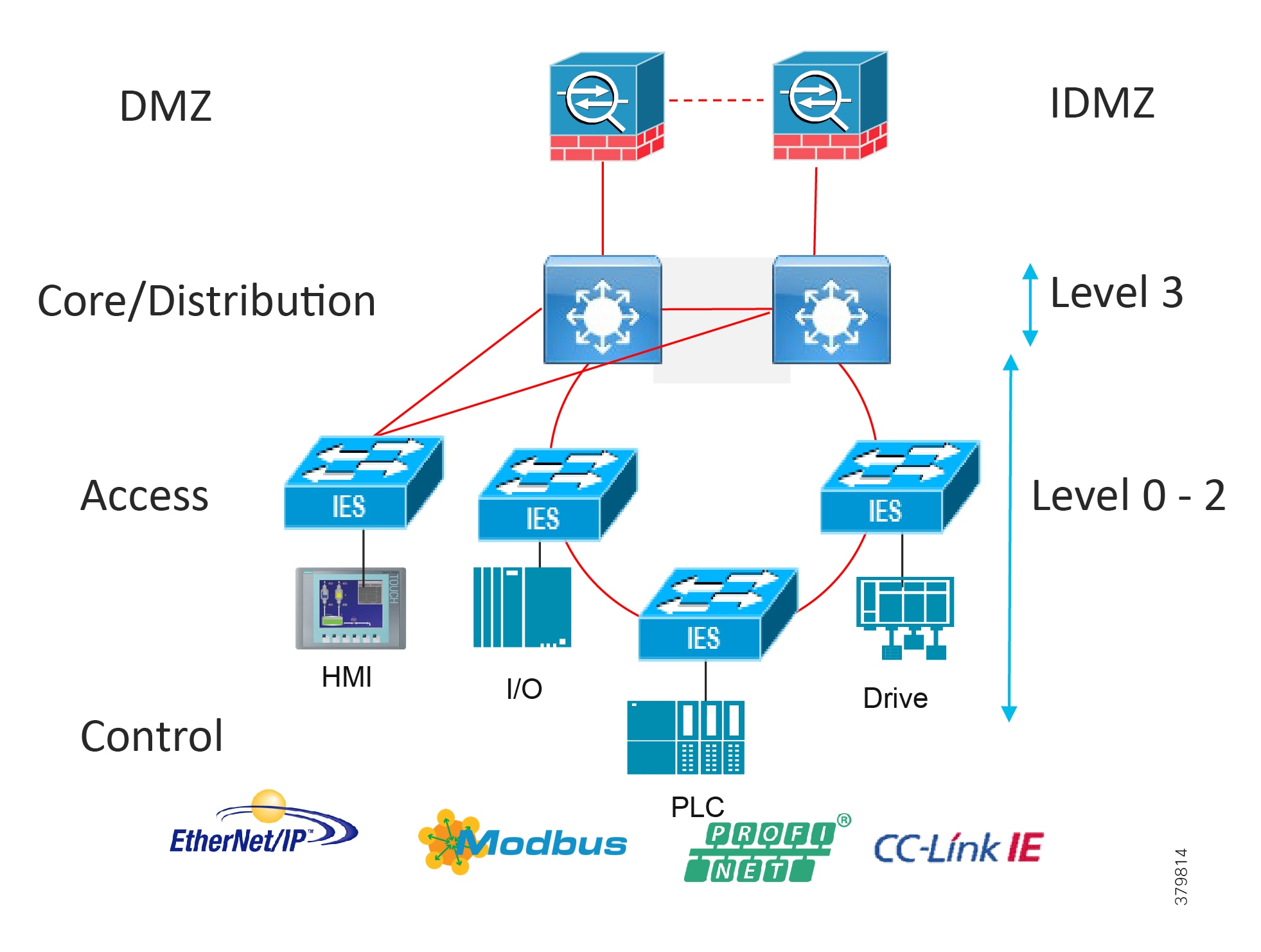

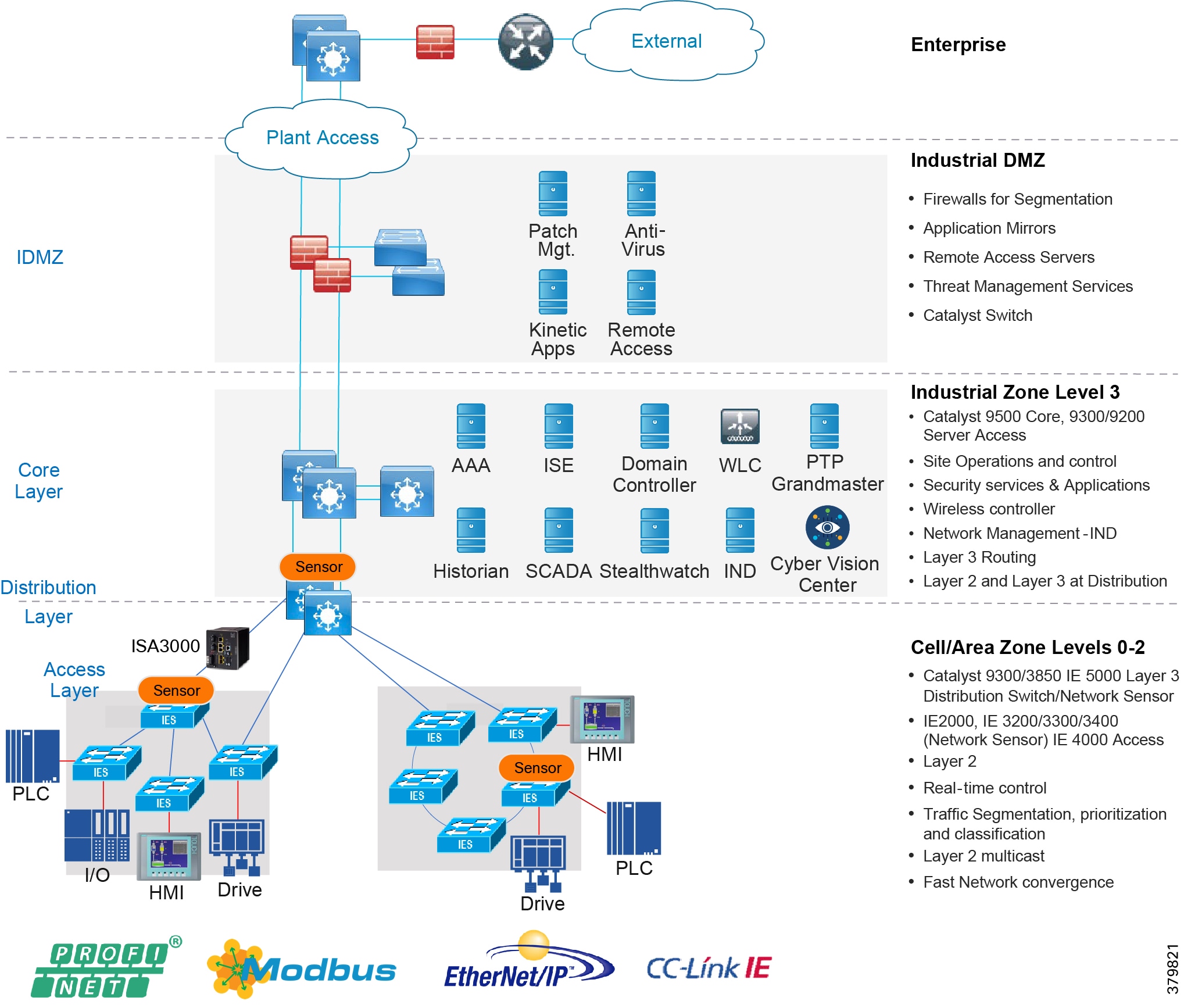

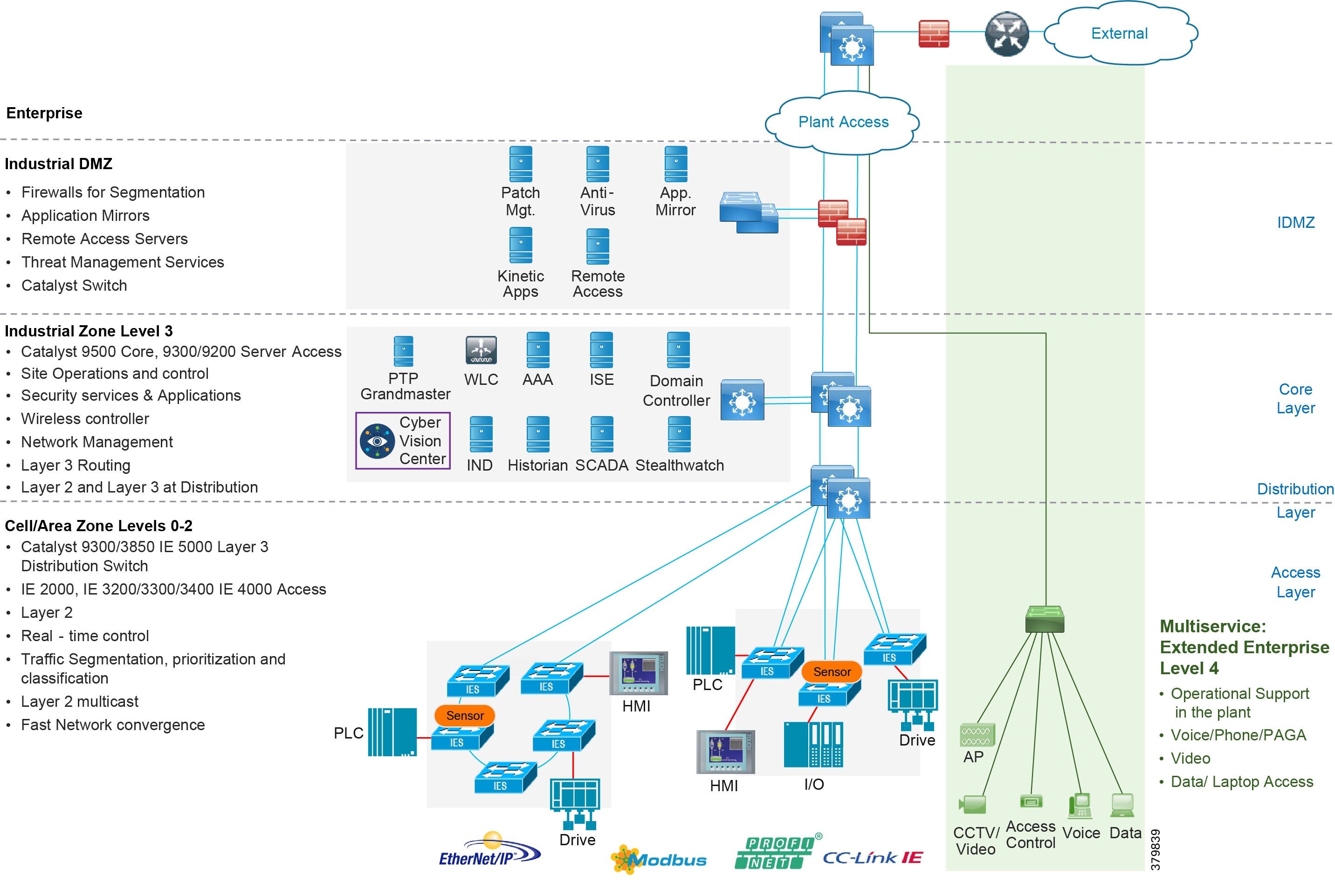

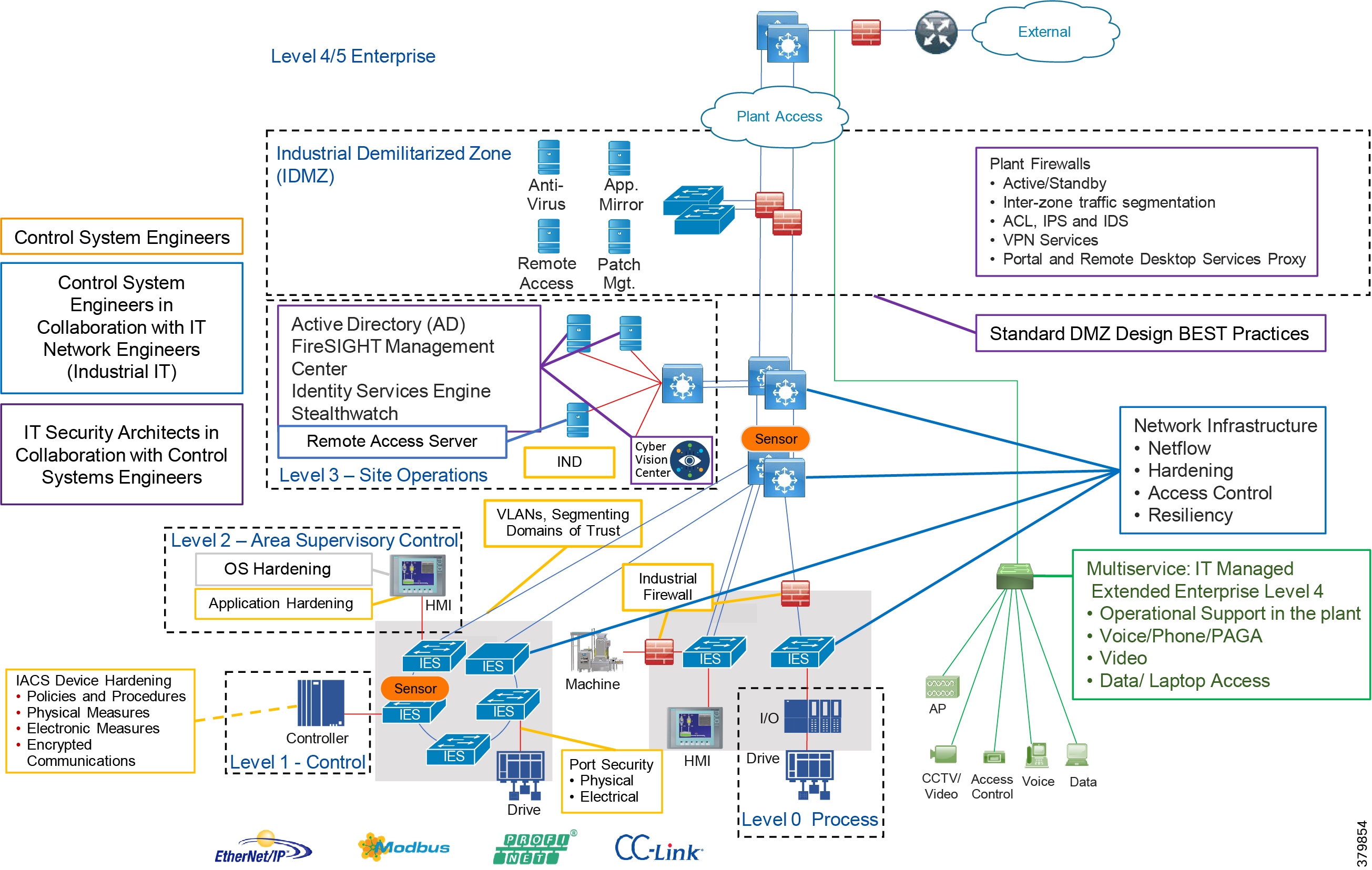

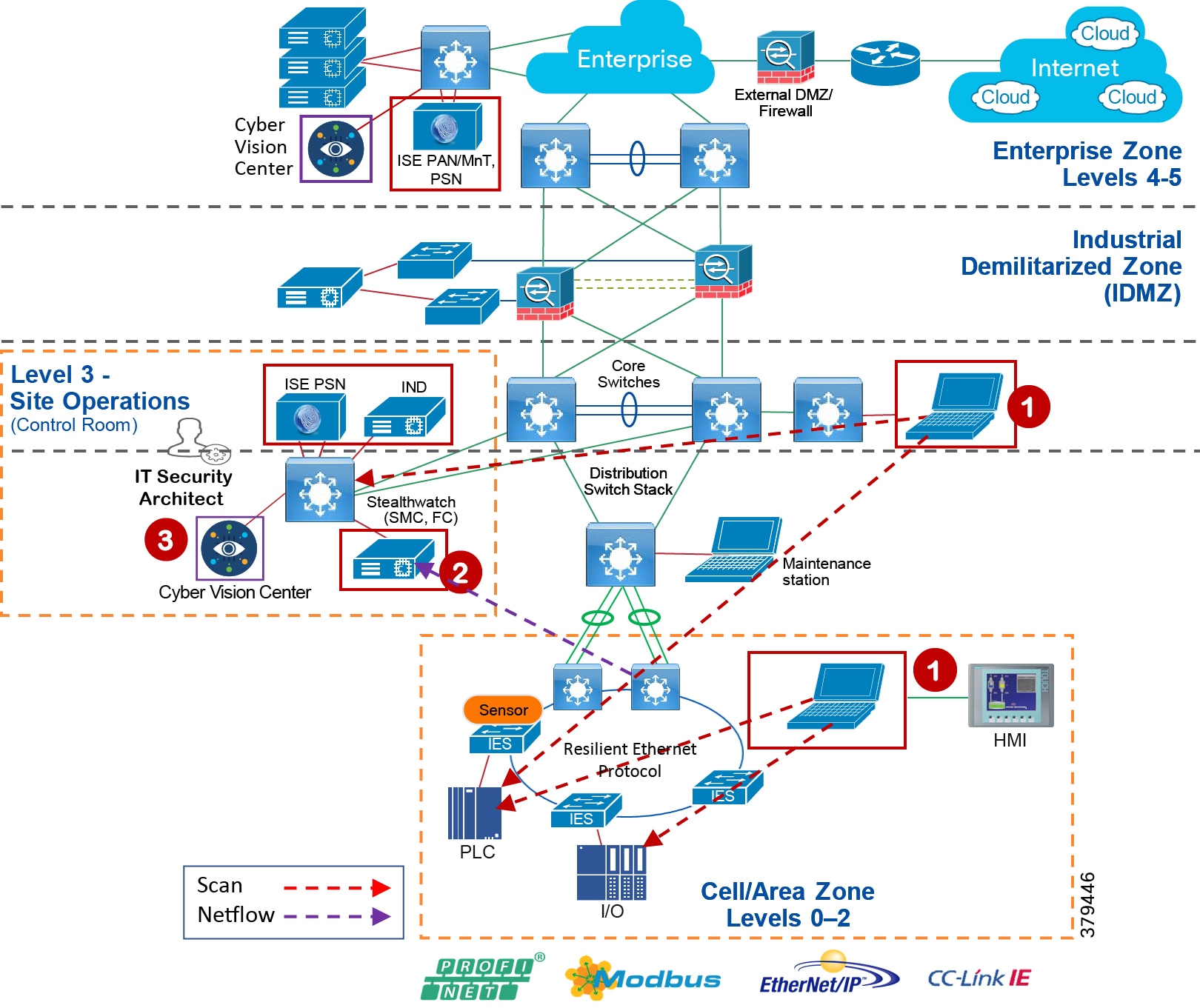

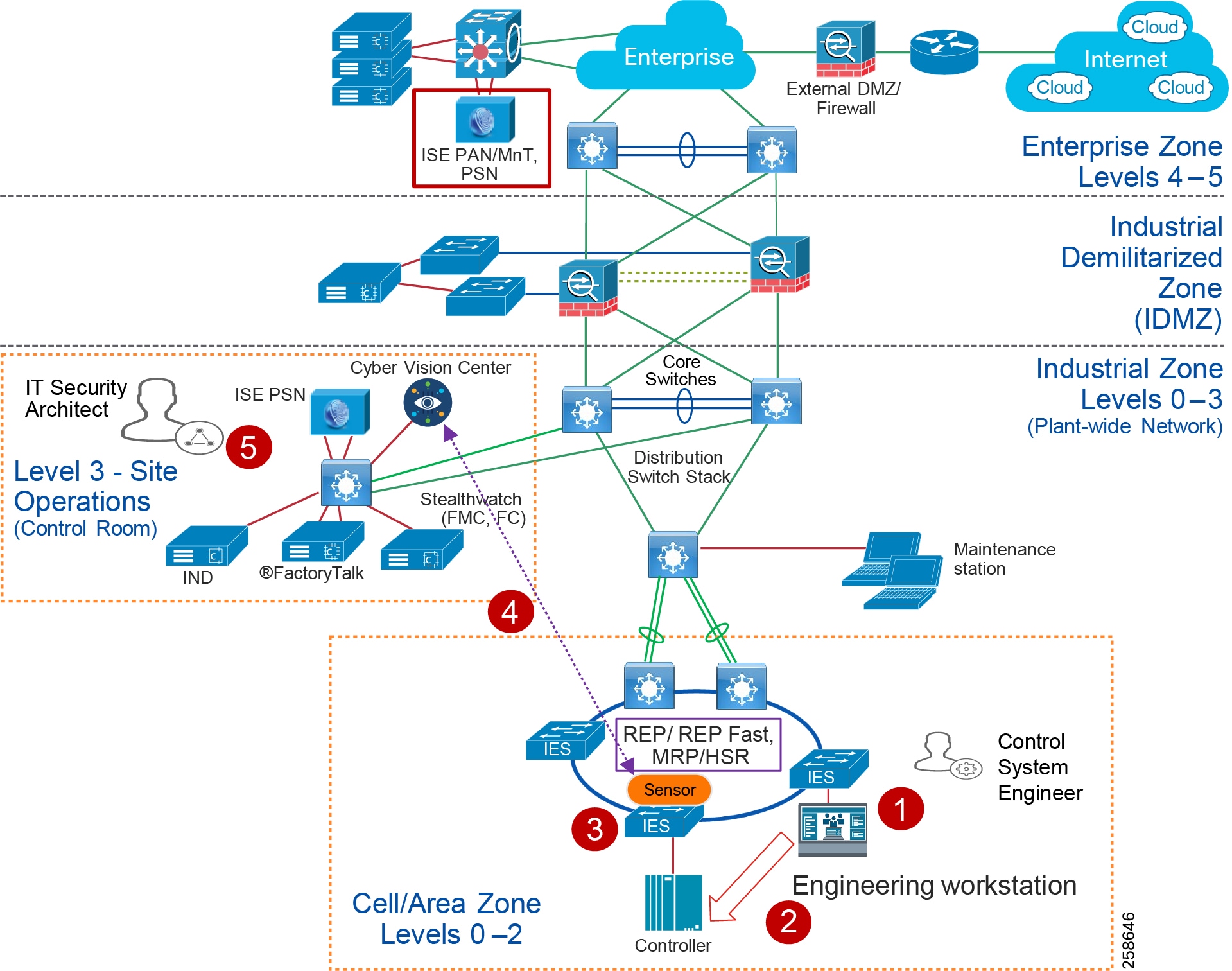

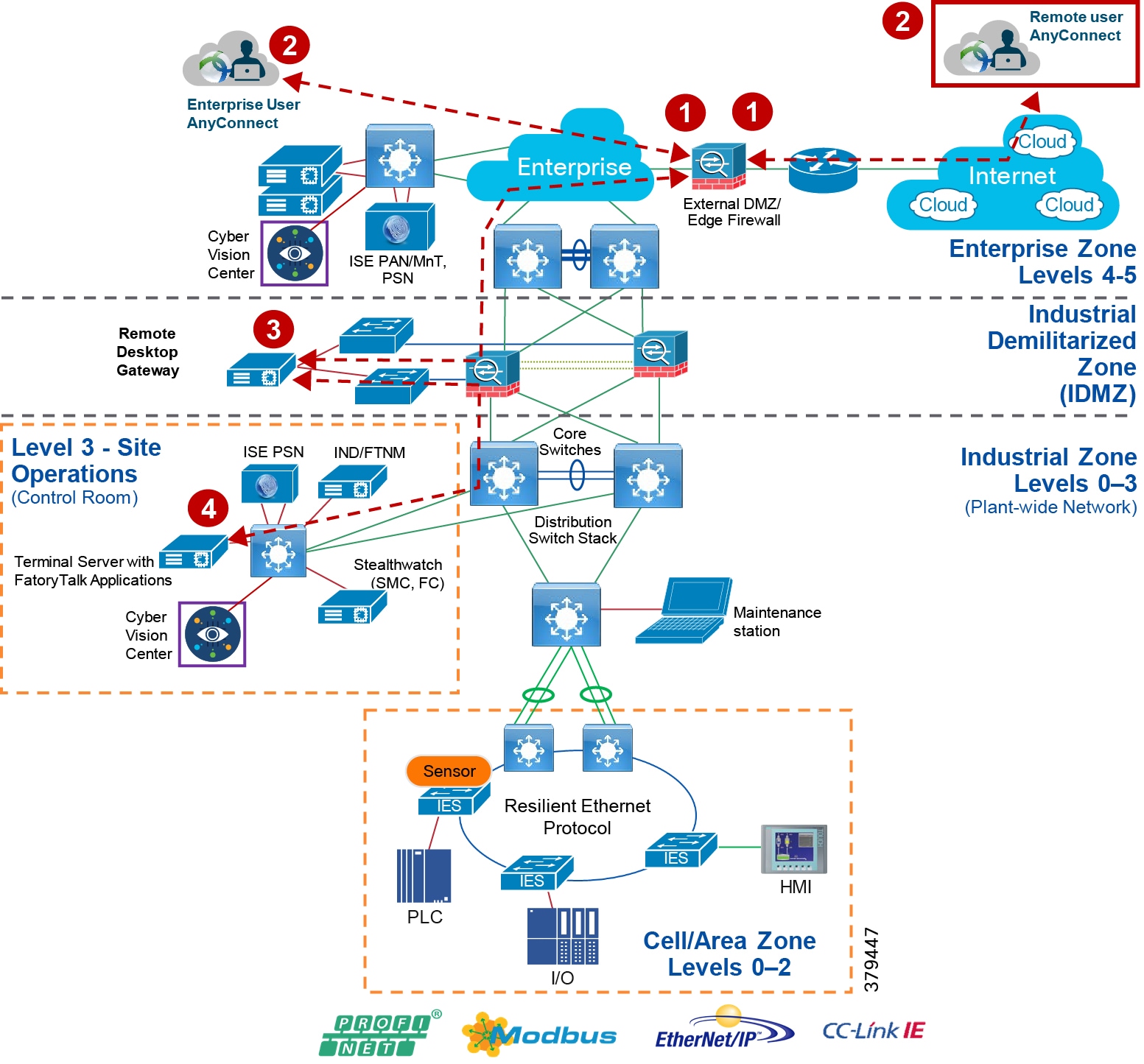

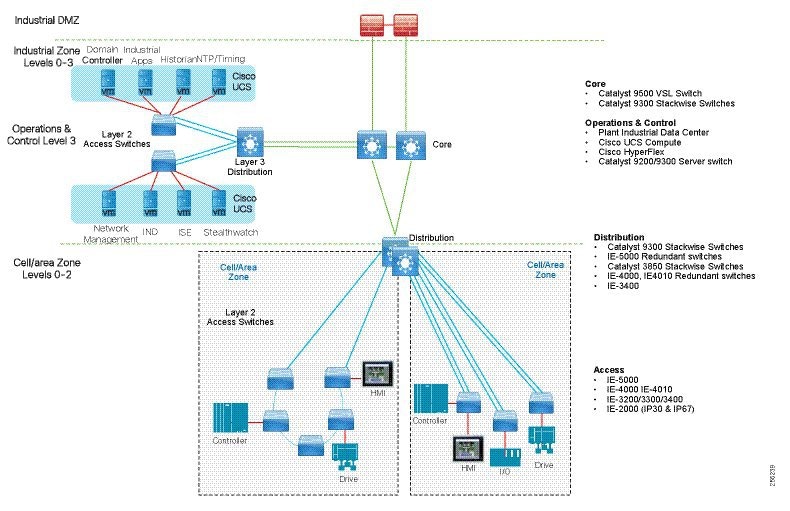

Figure 3 Industrial Automation Reference Architecture

Cross-Industry Applicability

This Industrial Automation solution encompasses networking, security, and data management applied to a wide range of industrial verticals and applications, providing a range of design and implementation alternatives that may be applicable to several industries. Although the size, vendors, applications, and devices may significantly vary among these facilities, many of the core networking and security concepts are applicable. For example, while high availability is a key requirement across all industrial use cases, oil and gas and utilities may have more stringent availability requirements than a manufacturing facility. Nonetheless, the CVD solution best practice guidance is applicable across many industries and industrial customer environments.

Use this reference architecture for the following applications:

■![]() Connectivity of IACS devices, including sensors, actuators, and controllers; key machines; and assets such as robots, CNC machines, tools, process skids, and RTUs

Connectivity of IACS devices, including sensors, actuators, and controllers; key machines; and assets such as robots, CNC machines, tools, process skids, and RTUs

■![]() Provide OT personnel with continuous visibility into and monitoring of the network and security status of the IACS devices and communication.

Provide OT personnel with continuous visibility into and monitoring of the network and security status of the IACS devices and communication.

■![]() Enable remote access to production assets and personnel to improve uptime.

Enable remote access to production assets and personnel to improve uptime.

■![]() Support plant-wide applications such as manufacturing execution systems, Supervisory Control and Data Acquisition (SCADA), historians, and asset management.

Support plant-wide applications such as manufacturing execution systems, Supervisory Control and Data Acquisition (SCADA), historians, and asset management.

■![]() Implement relevant network services, including DNS, DHCP, Sitewide Precise Time Distribution, and authentication.

Implement relevant network services, including DNS, DHCP, Sitewide Precise Time Distribution, and authentication.

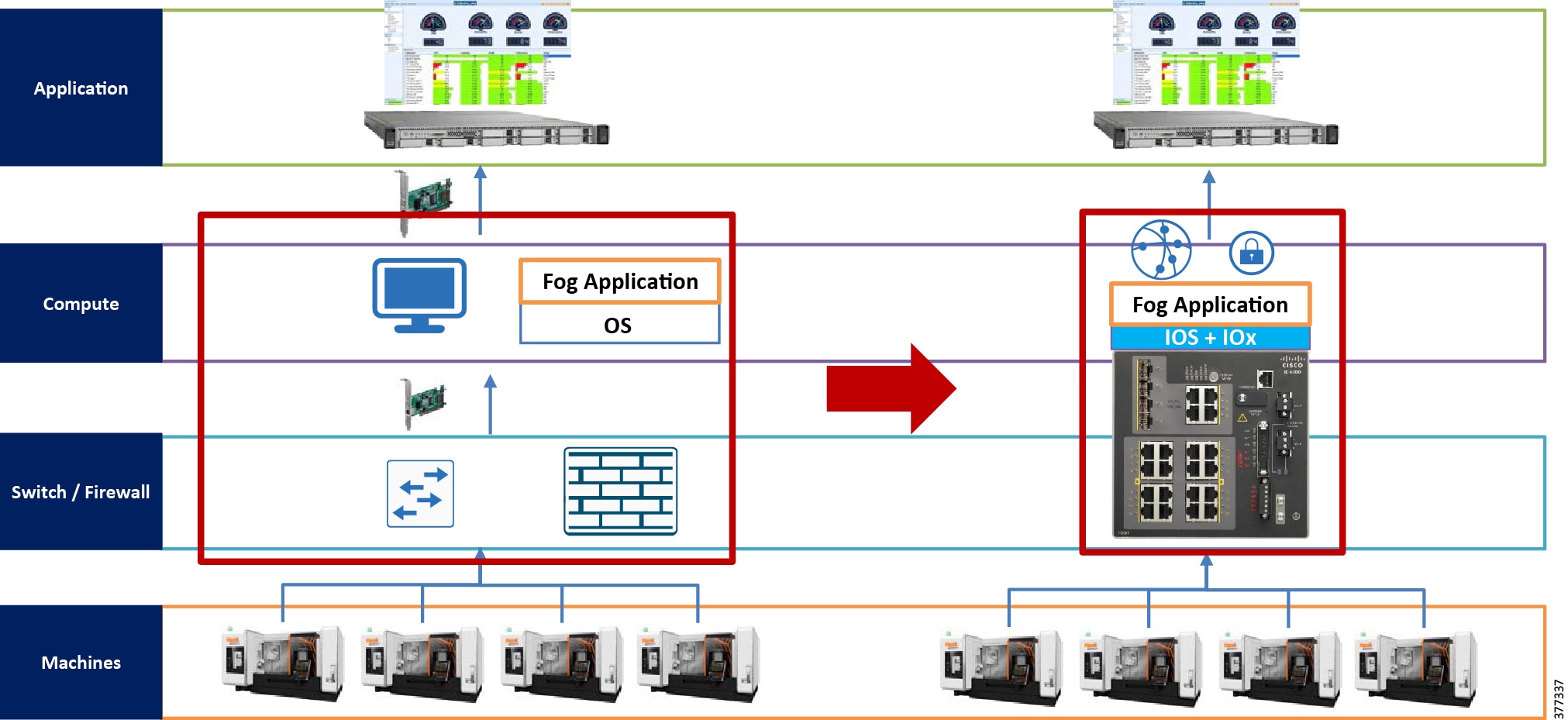

■![]() Enable IoT applications with Edge Compute such as predictive analytics and maintenance, Digital Twin, and machine learning and optimization.

Enable IoT applications with Edge Compute such as predictive analytics and maintenance, Digital Twin, and machine learning and optimization.

| | | | | | |

| | | | | | |

| Customer challenge | Access production assets and data Security risks Complex network silos creating downtime, data isolation, and vulnerabilities. Inflexible and high operating costs Expertise to manage data, networks, and security | Access production assets and data Security risks Aging infrastructure Managing and securing silo proprietary applications and networks is costly Expertise to manage data, networks, and security | Asset reliability People and asset optimization Security risks Employee safety Complex network silos creating downtime, data isolation, and vulnerabilities Inflexible networks and high operating costs Expertise to manage data, networks, and security | Asset reliability People and asset optimization Security risks Employee safety Complex network silos creating downtime, data isolation, and vulnerabilities | Rising costs of maintenance, equipment, and supplies Dealing with leakage issues, especially in drought-impacted countries Managing facilities within geographic areas with fewer personnel Remaining compliant with changing regulations |

| | | | | | |

| Cisco Industrial Automation Solution Features | High availability One solution that is interoperable across all major industrial control system protocols End-to-end connectivity for data visibility (not physically segmented) Integrated security at all levels (that works for OT and IT) Real-time, deterministic Industrial best practice designed Manageable for repair and uptime Intent-based ease of use Flexibility (modularity) | High availability One solution that is interoperable across all major industrial control system protocols End-to-end connectivity for data visibility (not physically segmented) Integrated security at all levels (that works for OT and IT) Real-time, deterministic Industrial best practice designed Manageable for repair and uptime Intent-based ease of use Flexibility (modularity) | High availability One solution that is interoperable across all major industrial control system protocols End-to-end connectivity for data visibility (not physically segmented) Integrated security at all levels (that works for OT and IT) Real-time, deterministic Industrial best practice designed Manageable for repair and uptime Intent-based ease of use Flexibility (modularity) | High availability One solution that is interoperable across all major industrial control system protocols End-to-end connectivity for data visibility (not physically segmented) Integrated security at all levels (that works for OT and IT) Real-time, deterministic Industrial best practice designed Manageable for repair and uptime Intent-based ease of use Flexibility (modularity) | High availability One solution that is interoperable across all major industrial control system protocols End-to-end connectivity for data visibility (not physically segmented) Integrated security at all levels (that works for OT and IT) Real-time, deterministic Industrial best practice designed Manageable for repair and uptime Intent-based ease of use Flexibility (modularity) |

| Customer Benefits | Reliable plant operations—higher uptime and OEE from improved data visibility Lower costs and scrap through improved real-time process visibility Lower costs from reduced OpEx– Easy to configure, upgrade, replace, and maintain Secure plant operation Less downtime | Increased operational reliability Real-Time data visibility Reduced risk from security attacks Reliable network for time-sensitive and mission-critical communications High availability Real-time visibility | Improve reliability and manage risks Reduce waste and processing Increased operational reliability Shorten turnaround times Minimize fines and penalties Improve worker safety and environmental compliance Real-time visibility Secure plant operation | OEE and availability Increased production Safety and environmental compliance Reduced OpEx—– Easy to configure, upgrade, replace, and maintain Wireless to wireline to securely connect machines and people for agility and Improved safety and productivity of staff | Secure utility information management Reduced risk from security attacks Remote monitoring High availability Reduced OpEx—– Easy to configure, upgrade, replace, and maintain Safety and environmental compliance |

Evolving Plant Environment

Over the past decade or so, the pace of change in the Industrial Automation space has clearly accelerated, driven largely by technology improvements epitomized by terms such as Industrial Internet of Things, Fog/Edge computing, Smart Factories, and Industry 4.0—the fourth revolution in industrial capabilities and digitization. This section examines some of these trends and how this solution is aligned to enable them.

Industrial Internet of Things

The Industrial Internet of Things (IIoT) is based on the idea that the growth of connected devices is more and more driven not by computers and mobile devices used by humans, but by things used in all forms of automation and in the control of the industrial ecosystem. The industrial ecosystem is moving away from fieldbus technologies based on proprietary networks for communication protocols over standard networking such as Ethernet, 802.11-based Wi-Fi, and the portfolio of IP protocols (for example, TCP and UDP). This focus on open networking standards is a foundational aspect—the devices or "things" that make up the industrial ecosystem are capable of communicating on converged, open networks, which significantly improves the accessibility of data and information. This IIoT therefore enables Digital Transformation and the revolution of the industrial ecosystem referred to as Industry 4.0. This solution, by driving converged, open networks into these industrial ecosystems establishes the IIoT for customers who adopt it.

Industry 4.0

Industry 4.0 is based on the idea that manufacturing, utilities, mining, and oil and gas are going through a fourth industrial revolution. It can be seen as the summation of industrial and computing trends. At the heart is the concept that physical devices, machines, and processes can be tightly controlled and operated significantly better by combing them with cyber-systems, IIoT, cloud computing, artificial intelligence, machine learning, and other relevant technologies.

Industry 4.0 outlines four key design principles:

■![]() Interconnection—The ability of machines, devices, sensors, and people to connect and communicate with each other via the Internet of Things (IoT).

Interconnection—The ability of machines, devices, sensors, and people to connect and communicate with each other via the Internet of Things (IoT).

■![]() Information transparency—The transparency afforded by Industry 4.0 technology provides operators with vast amounts of useful information needed to make appropriate decisions. Interconnectivity allows operators to collect immense amounts of data and information from all points in the manufacturing process, thus aiding functionality and identifying key areas that can benefit from innovation and improvement.

Information transparency—The transparency afforded by Industry 4.0 technology provides operators with vast amounts of useful information needed to make appropriate decisions. Interconnectivity allows operators to collect immense amounts of data and information from all points in the manufacturing process, thus aiding functionality and identifying key areas that can benefit from innovation and improvement.

■![]() Technical assistance—First, the ability to support humans by aggregating and visualizing information comprehensively for making informed decisions and solving urgent problems on short notice. Second, the ability of cyber physical systems to physically support humans by conducting a range of tasks that are unpleasant, too exhausting, or unsafe for their human co-workers.

Technical assistance—First, the ability to support humans by aggregating and visualizing information comprehensively for making informed decisions and solving urgent problems on short notice. Second, the ability of cyber physical systems to physically support humans by conducting a range of tasks that are unpleasant, too exhausting, or unsafe for their human co-workers.

■![]() Decentralized decisions—The ability of cyber physical systems to make decisions on their own and to perform their tasks as autonomously as possible. Only in the case of exceptions, interferences, or conflicting goals are tasks delegated to a higher level.

Decentralized decisions—The ability of cyber physical systems to make decisions on their own and to perform their tasks as autonomously as possible. Only in the case of exceptions, interferences, or conflicting goals are tasks delegated to a higher level.

The Cisco Industrial Automation solution is a foundational aspect of an Industry 4.0 approach, focusing heavily on the interconnection and cybersecurity of industrial environments, but also providing information transparency through data management capabilities, technical assistance through remote connectivity and collaboration capabilities, as well as decentralized decisions with the fog/edge computing capabilities.

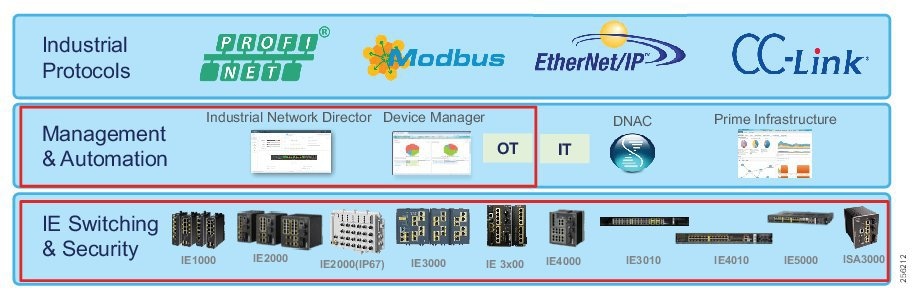

Cisco Industrial Automation Solution Features

This Industrial Automation solution applies the best IT capabilities and expertise tuned and aligned with OT requirements and applications and delivers for industrial environments:

■![]() High Availability for all key industrial communication and services

High Availability for all key industrial communication and services

■![]() Real-time, deterministic application support with low network latency and jitter for the most challenging applications, such as motion control

Real-time, deterministic application support with low network latency and jitter for the most challenging applications, such as motion control

■![]() Deployable in a range of industrial environmental conditions with Industrial-grade as well as commercial-off-the-shelf (COTS) IT equipment

Deployable in a range of industrial environmental conditions with Industrial-grade as well as commercial-off-the-shelf (COTS) IT equipment

■![]() Scalable from small (tens to hundreds of IACS devices) to very large (thousands to 10,000s) deployments

Scalable from small (tens to hundreds of IACS devices) to very large (thousands to 10,000s) deployments

■![]() Intent-based manageability and ease-of-use to facilitate deployment and maintenance especially by OT personnel with limited IT capabilities and knowledge

Intent-based manageability and ease-of-use to facilitate deployment and maintenance especially by OT personnel with limited IT capabilities and knowledge

■![]() Compatible with industrial vendors, including Rockwell Automation, Schneider Electric, Siemens, Mitsubishi Electric, Emerson, Honeywell, Omron, and SEL

Compatible with industrial vendors, including Rockwell Automation, Schneider Electric, Siemens, Mitsubishi Electric, Emerson, Honeywell, Omron, and SEL

■![]() Reliance on open standards to ensure vendor choice and protection from proprietary constraints

Reliance on open standards to ensure vendor choice and protection from proprietary constraints

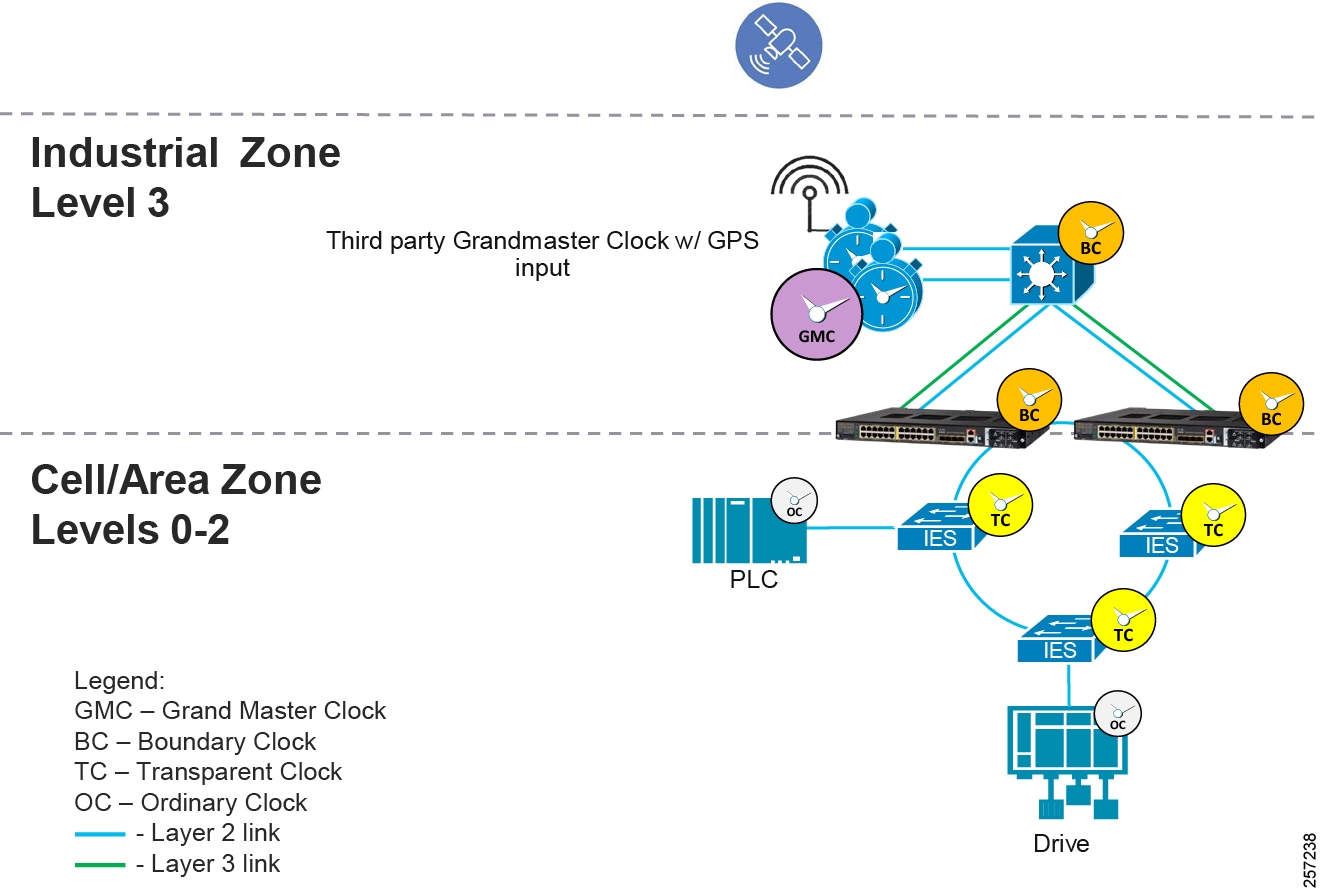

■![]() Distribution of Precise Time across the site to support motion applications and Schedule of Events data collection

Distribution of Precise Time across the site to support motion applications and Schedule of Events data collection

■![]() Converged network to support communication from sensor to cloud enabling many Industry 4.0 use cases

Converged network to support communication from sensor to cloud enabling many Industry 4.0 use cases

■![]() IT-preferred security architecture integrating OT context and applicable and validated for Industrial applications (achieves best practices for both OT and IT environments)

IT-preferred security architecture integrating OT context and applicable and validated for Industrial applications (achieves best practices for both OT and IT environments)

■![]() Deploy IoT application with support for Edge Compute

Deploy IoT application with support for Edge Compute

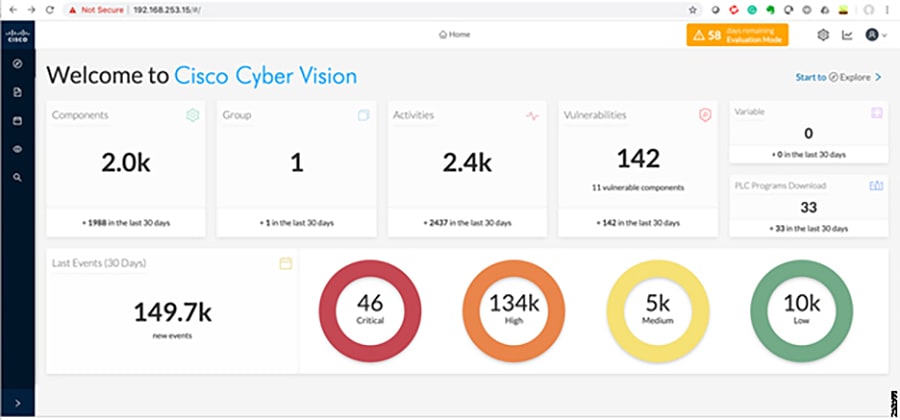

■![]() OT-focused, continuous cybersecurity monitoring of IACS devices and communications

OT-focused, continuous cybersecurity monitoring of IACS devices and communications

Solution Benefits for Industrial Environments

Benefits of securely connecting industrial automation systems via deploying this solution and the relevant Cisco technologies include:

■![]() Reduce risk in the production environment through industry-leading IT- and OT-focused security

Reduce risk in the production environment through industry-leading IT- and OT-focused security

■![]() Improve operational equipment effectiveness (OEE) and asset utilization through increased production availability and increased control system and asset visibility

Improve operational equipment effectiveness (OEE) and asset utilization through increased production availability and increased control system and asset visibility

■![]() Reduce product defects through early indication of quality impacting events or conditions

Reduce product defects through early indication of quality impacting events or conditions

■![]() Faster deployment of new lines or line modifications or new plants

Faster deployment of new lines or line modifications or new plants

■![]() Faster troubleshooting of equipment (with reduction in connectivity or security-related downtime)

Faster troubleshooting of equipment (with reduction in connectivity or security-related downtime)

What's New for Industrial Environments in this CVD?

This solution leverages and extends existing documentation and testing, as indicated in the executive summary. This version relies on that body of work and incorporates new products and technologies to further enhance the offer. Solution enhancements include:

■![]() Oil and Gas —Support for Process Control and Refinery applications with a focus on wireless support based upon the new IW6300 Intrinsically safe Wi-Fi access points backhauling wireless sensor traffic from Wireless Hart systems. This support includes support for uninterrupted service migration from legacy 1552 APs to IW6300s, including the wireless LAN controllers to manage them.

Oil and Gas —Support for Process Control and Refinery applications with a focus on wireless support based upon the new IW6300 Intrinsically safe Wi-Fi access points backhauling wireless sensor traffic from Wireless Hart systems. This support includes support for uninterrupted service migration from legacy 1552 APs to IW6300s, including the wireless LAN controllers to manage them.

■![]() Expanded support of Cisco Software-Defined Access (SDA) Ready platforms —The Cisco IE 3200, Cisco IE 3300, and Cisco IE 3400 Rugged Series switches, now including the IP67 rated Cisco IE 3400 H, have expanded support for Profinet, a range of resiliency protocols, and fully participate in the Cell/Area Zone security features.

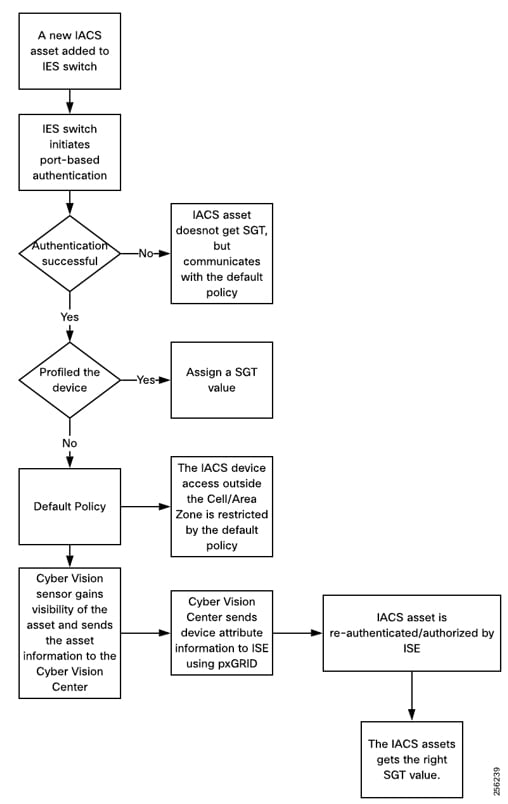

Expanded support of Cisco Software-Defined Access (SDA) Ready platforms —The Cisco IE 3200, Cisco IE 3300, and Cisco IE 3400 Rugged Series switches, now including the IP67 rated Cisco IE 3400 H, have expanded support for Profinet, a range of resiliency protocols, and fully participate in the Cell/Area Zone security features.

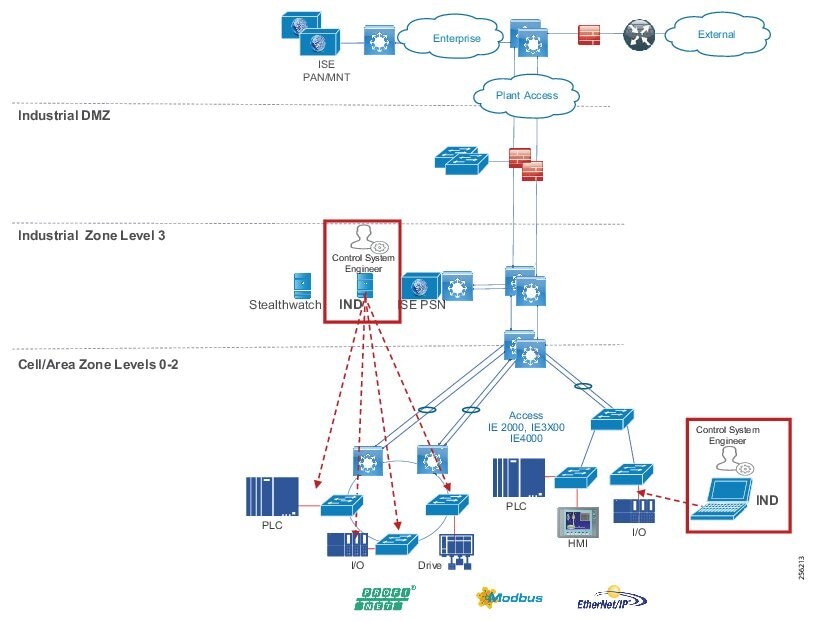

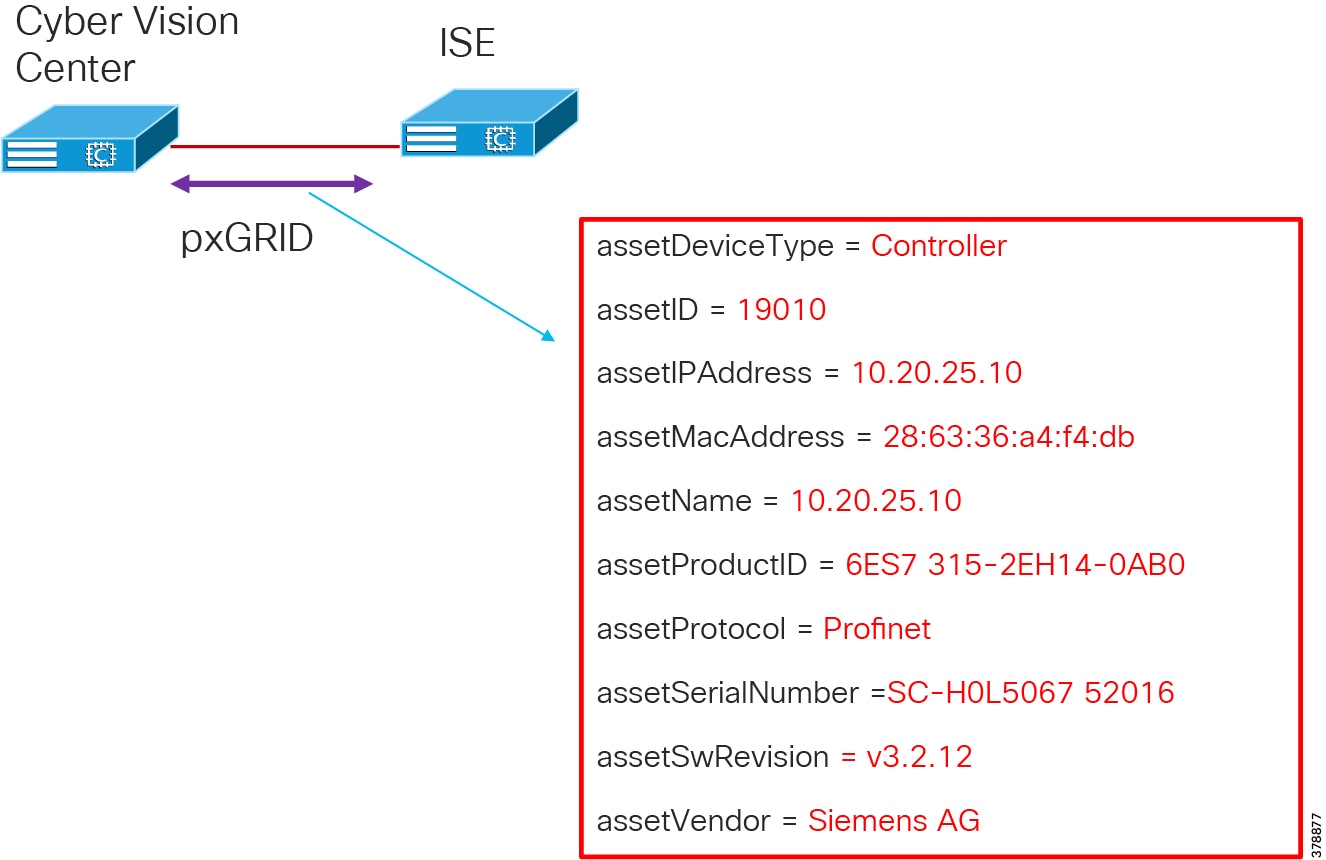

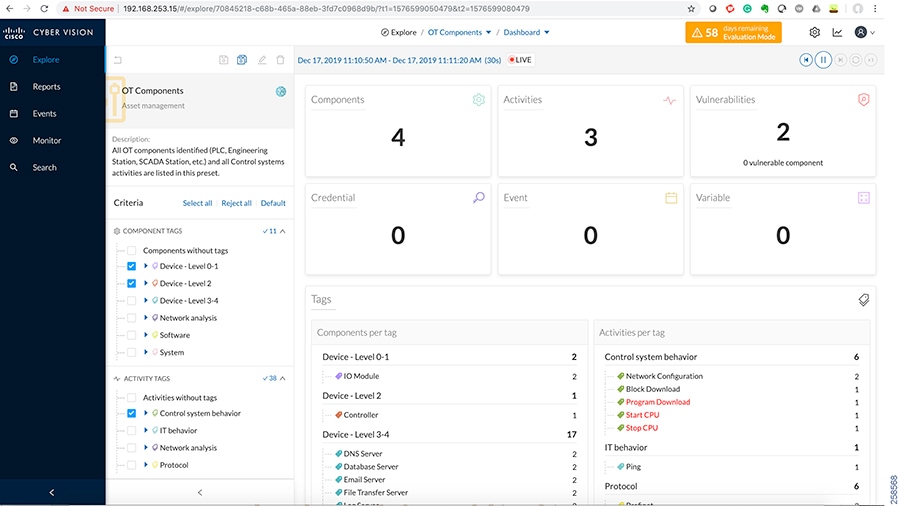

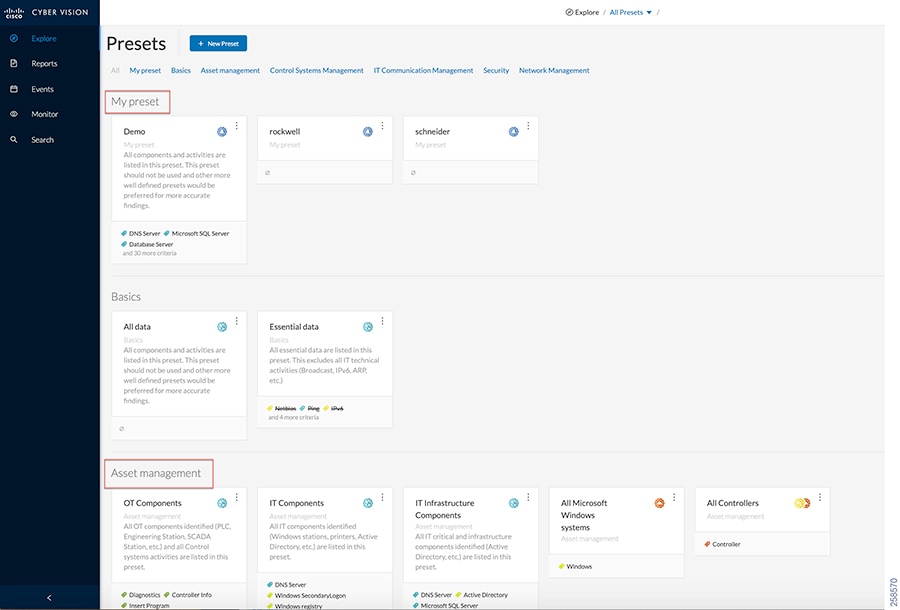

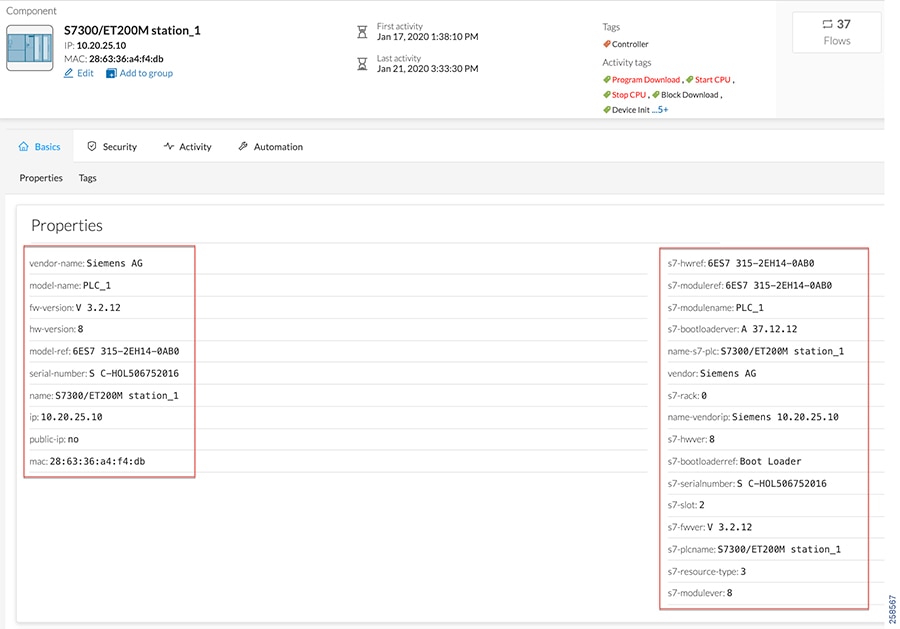

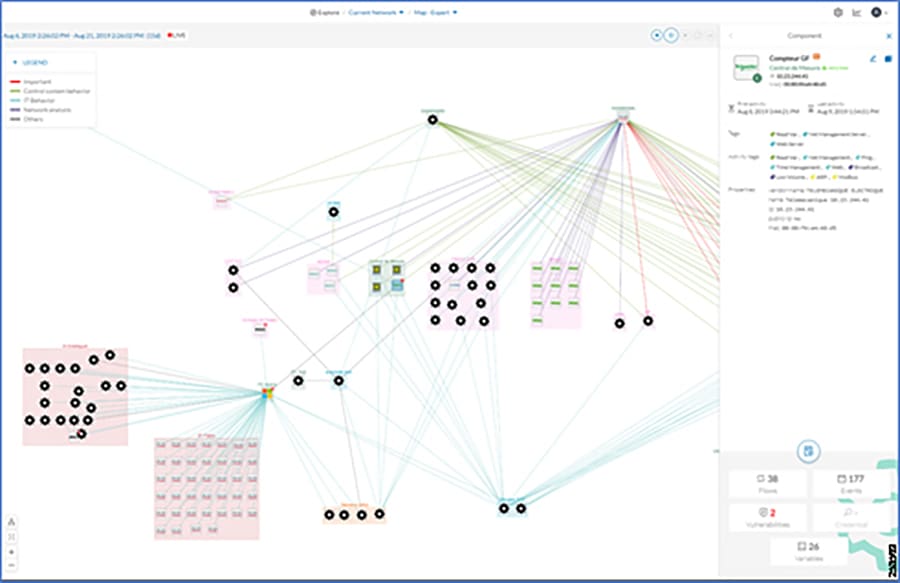

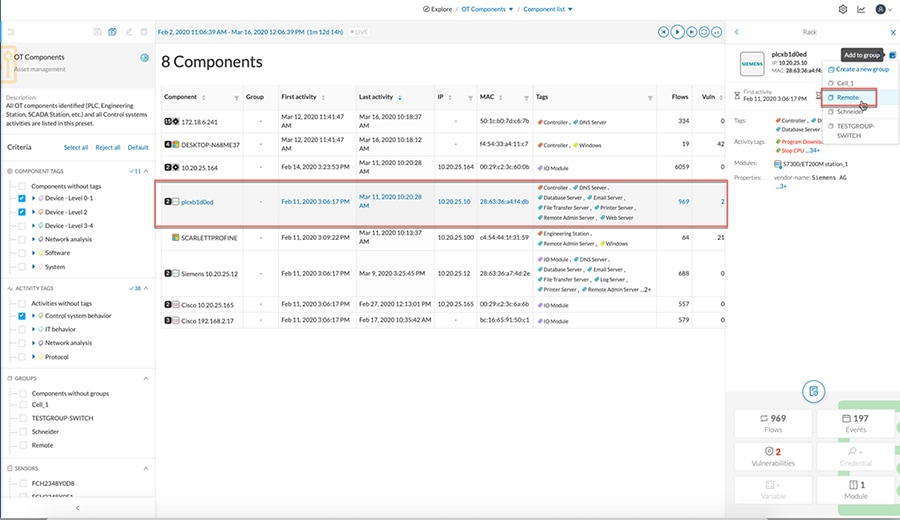

■![]() Cisco Cyber Vision Industrial Cyber Security —Integrated Cisco Cyber Vision OT-focused industrial cybersecurity visibility and monitoring

Cisco Cyber Vision Industrial Cyber Security —Integrated Cisco Cyber Vision OT-focused industrial cybersecurity visibility and monitoring

Note on SDA-Ready Platforms—The Cisco Catalyst 9300 switch was introduced and validated as the distribution switch for the Cell/Area Zone. The Cisco Catalyst 9300 platform currently supports Software-Defined Access, which provides automated configuration and end-to-end segmentation to separate user, device, and application traffic without redesigning the network. SDA automates user access policy so organizations can make sure the right policies are established for any user or device with any application across the network. Ease of management and intent-driven networking with policy will be valuable additions for the industrial plant environments. Cisco is leveraging SDA in our Cisco IoT Extended Enterprise solutions for non-carpeted spaces (see www.cisco.com/go/iotcvd) where IT manages portions of industrial plants, warehouses, parking lots, roadways/intersections, etc. However, SDA is not yet validated for deployment to support industrial automation and control (the control loop) applications in the Cell/Area Zone in this solution. The new IE platforms are being positioned in the architecture to prepare for when SDA is able to support Cell/Area Zone industrial automation and control application requirements and protocols.

Intended Audience

This CVD is intended for anyone deploying IACS systems. It is intended to be used by both IT and OT personnel to drive secure convergence of industrial systems into Enterprise networks. The solution provides industrial automation network and security design and implementation guidance for vendors, partners, system implementers, customers, and service providers involved in designing, deploying, or operating production systems. This design and implementation guide provides a comprehensive explanation of the Cisco recommended networking and security for IACS. It includes information about the system architecture, possible deployment models, and guidelines for implementation and configuration. This guide also recommends best practices when deploying the validated reference architecture.

Industrial Automation Architecture Considerations

The section provides foundational concepts, building blocks, and considerations for industrial automation environments.

Plant Logical Framework

The 20th century saw a significant increase in the output of industrial processes and verticals, from utilities to process and discrete manufacturing. These developments were largely driven through automation and control technology advancements including the invention of the programmable logic controllers (PLCs), industrial robots, computerized-numeric control machines (machine tools), and the like; these paired with software-based applications, such as SCADA, Manufacturing Execution System (MES), and Historian and Asset Management systems launched IACS.

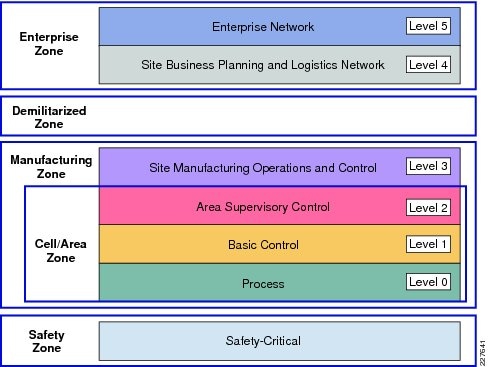

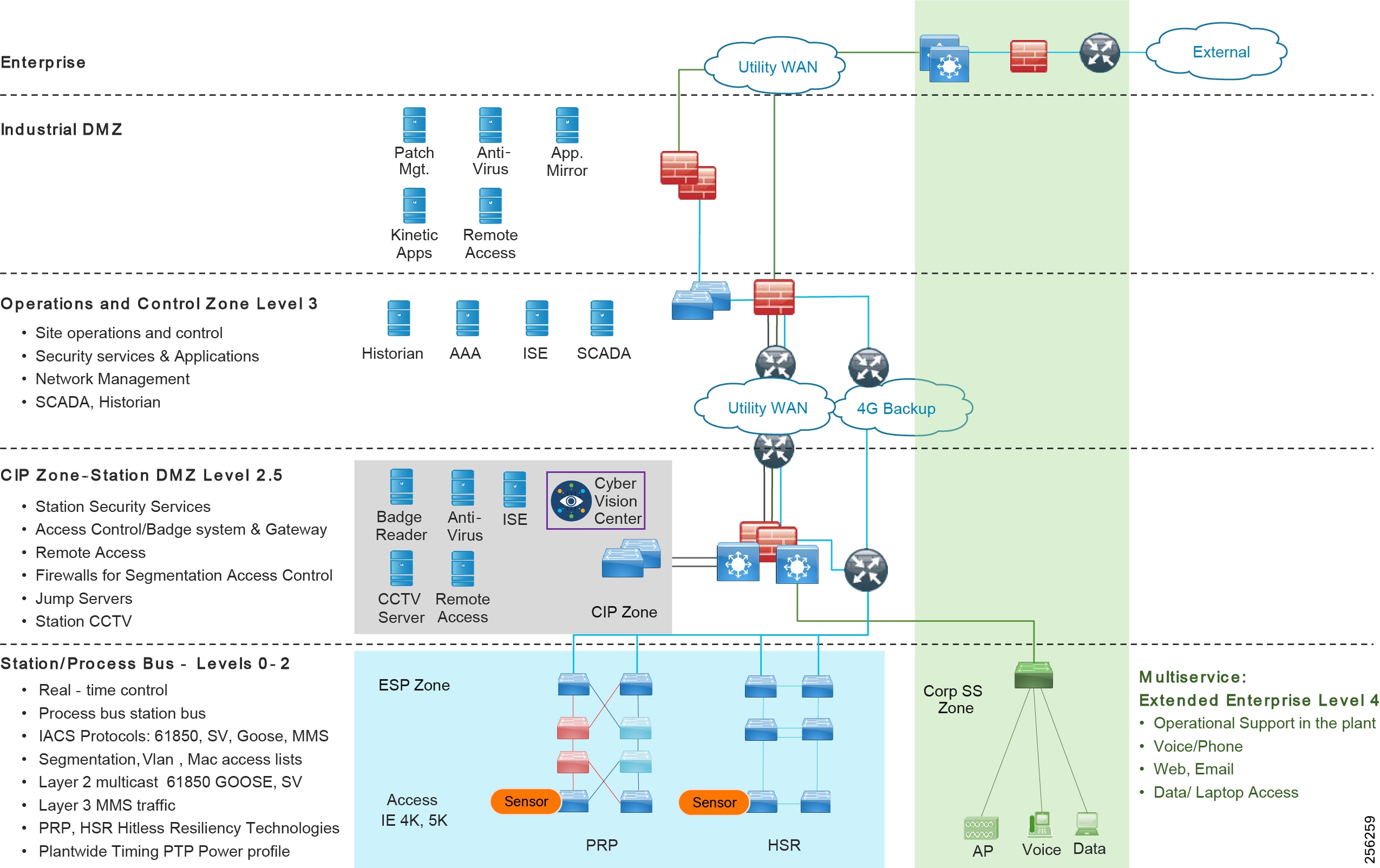

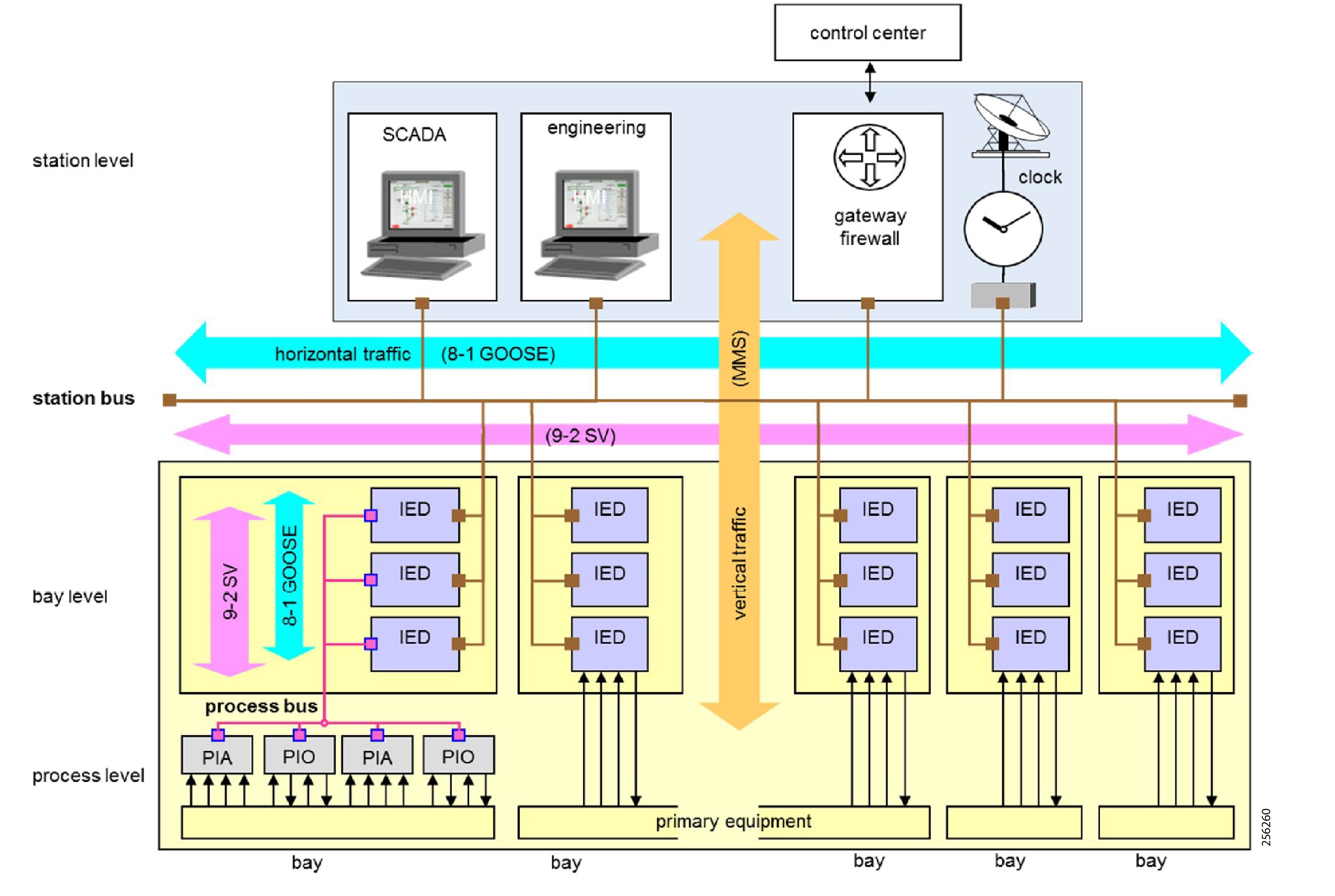

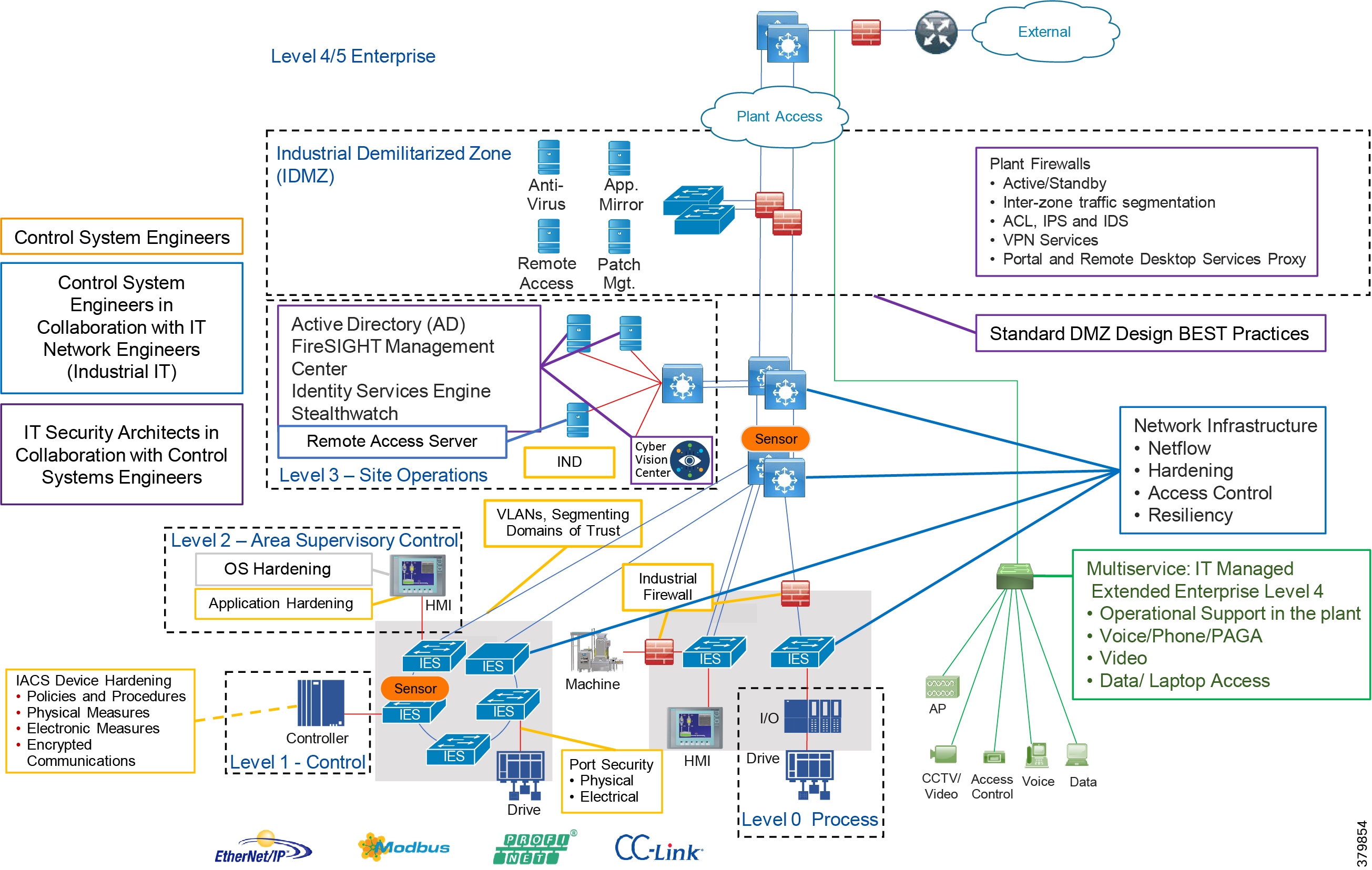

To understand the security and network systems requirements of an IACS, this guide uses a logical framework to describe the basic functions and composition of an industrial system. The Purdue Model for Control Hierarchy (reference ISBN 1-55617-265-6) is a common and well-understood model in the industry that segments devices and equipment into hierarchical functions. Based on this segmentation of IACS technology, the International Society of Automation ISA-99 Committee for Industrial and Control Systems Security and IEC 62443 Industrial Cybersecurity framework have identified the levels and logical framework shown in Figure 3. Each zone and the related levels are then subsequently described in detail in the section "Industrial Automation and Control System Reference Model" in Chapter 2 of the Converged Plantwide Ethernet (CPwE) Design and Implementation Guide :

https://www.cisco.com/c/dam/en/us/td/docs/solutions/Verticals/CPwE/CPwE-CVD-Sept-2011.pdf

Figure 4 Plant Logical Framework

This model identifies levels of operations and defines each level. In this CVD, levels refer to this concept of levels of operations. The Open Systems Interconnection (OSI) reference model, which defines layers of network communications, is also commonly referred to when discussing network architectures. The OSI model refers to layers of network communication functions. In this CVD, unless specified, layers refer to layers of the OSI model.

Safety Zone—Safety in the Industrial Automation Control System

The need for safety is imperative in industrial environments. For example in a manufacturing environment, a robot can cause a fatal impact to personnel if proper safety procedures are not followed. Indeed, even when such procedures are followed, the robot can cause harm if it is under malicious control. Another example is Substation Automation, where the need for safety is crucial in such a high-voltage environment. As with robots in Manufacturing, a malicious actor could easily impact safety by simply engaging relays that were expected to provide isolation.

Safety in the IACS is so important that not only are safety networks isolated from (and overlaid on) the rest of the IACS, they typically have color-coded hardware and are subject to more stringent standards. In addition, Personal Protection Equipment (PPE) and physical barriers are required to promote safety. Industrial automation allows safety devices to coexist and interoperate with standard IACS devices on the same physical infrastructure, which reduces cost and improves operational efficiency.

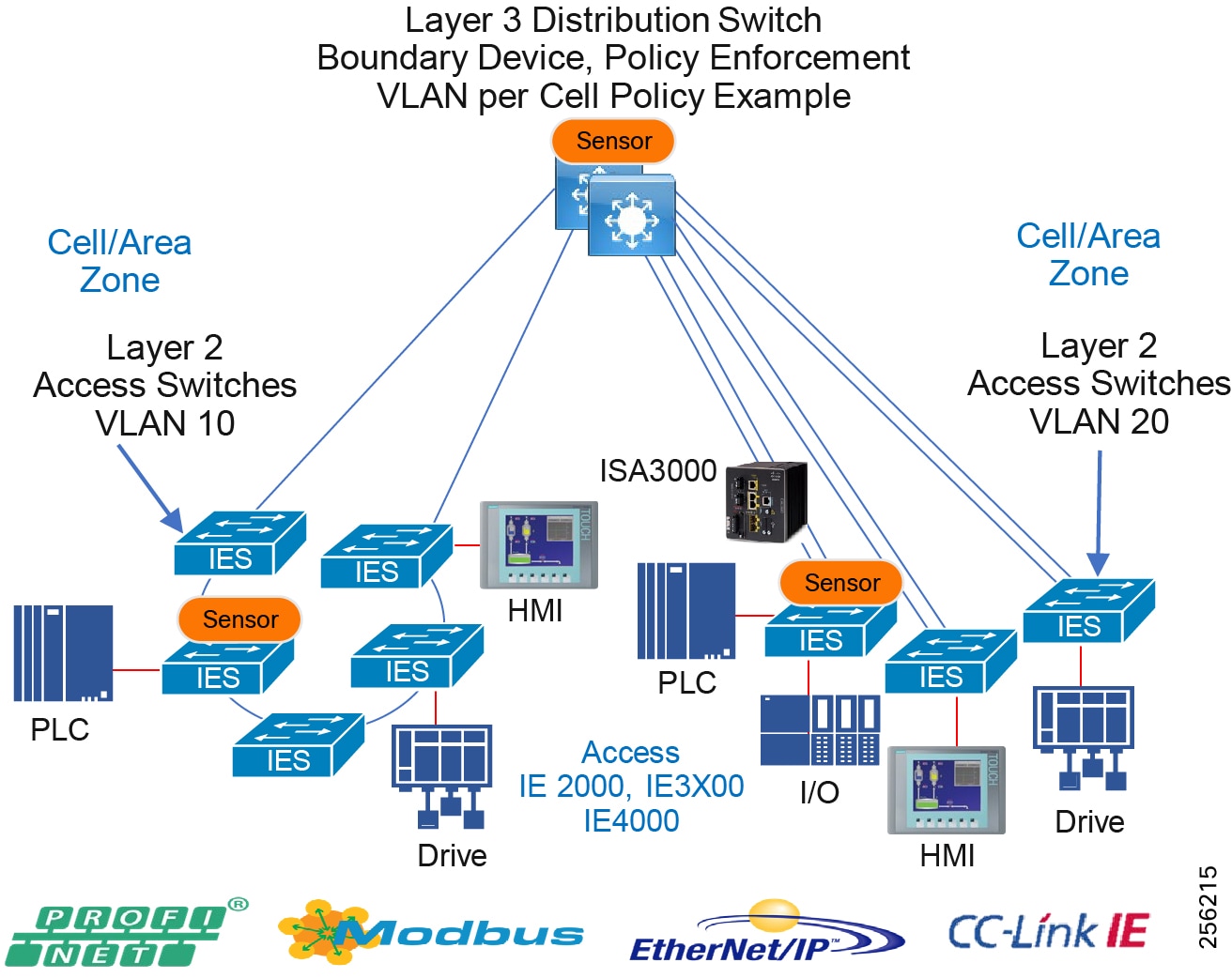

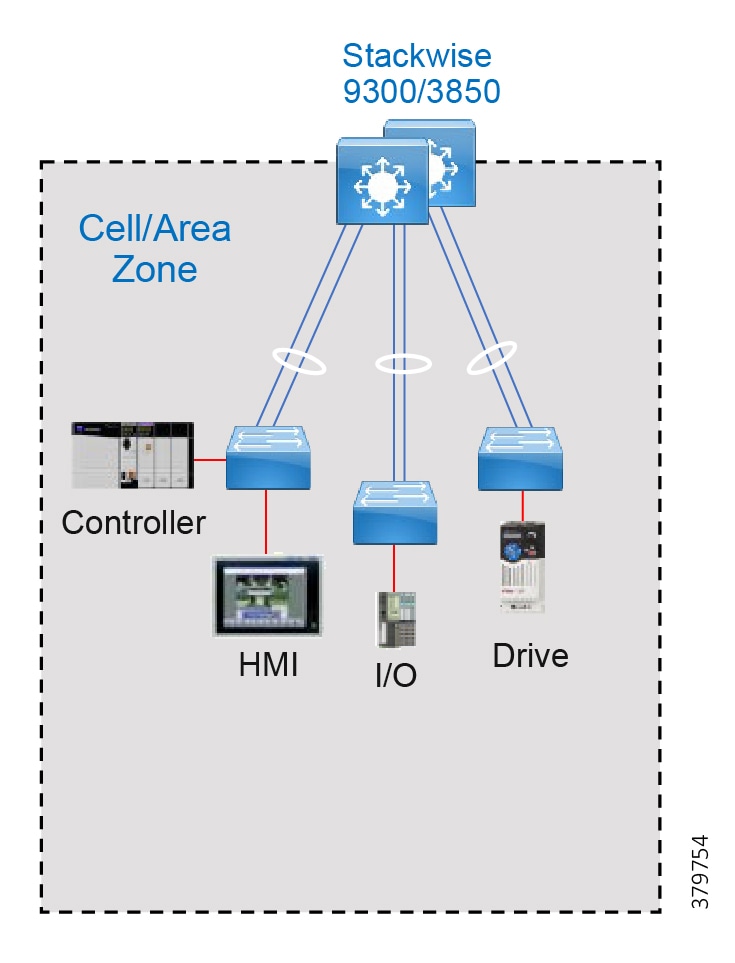

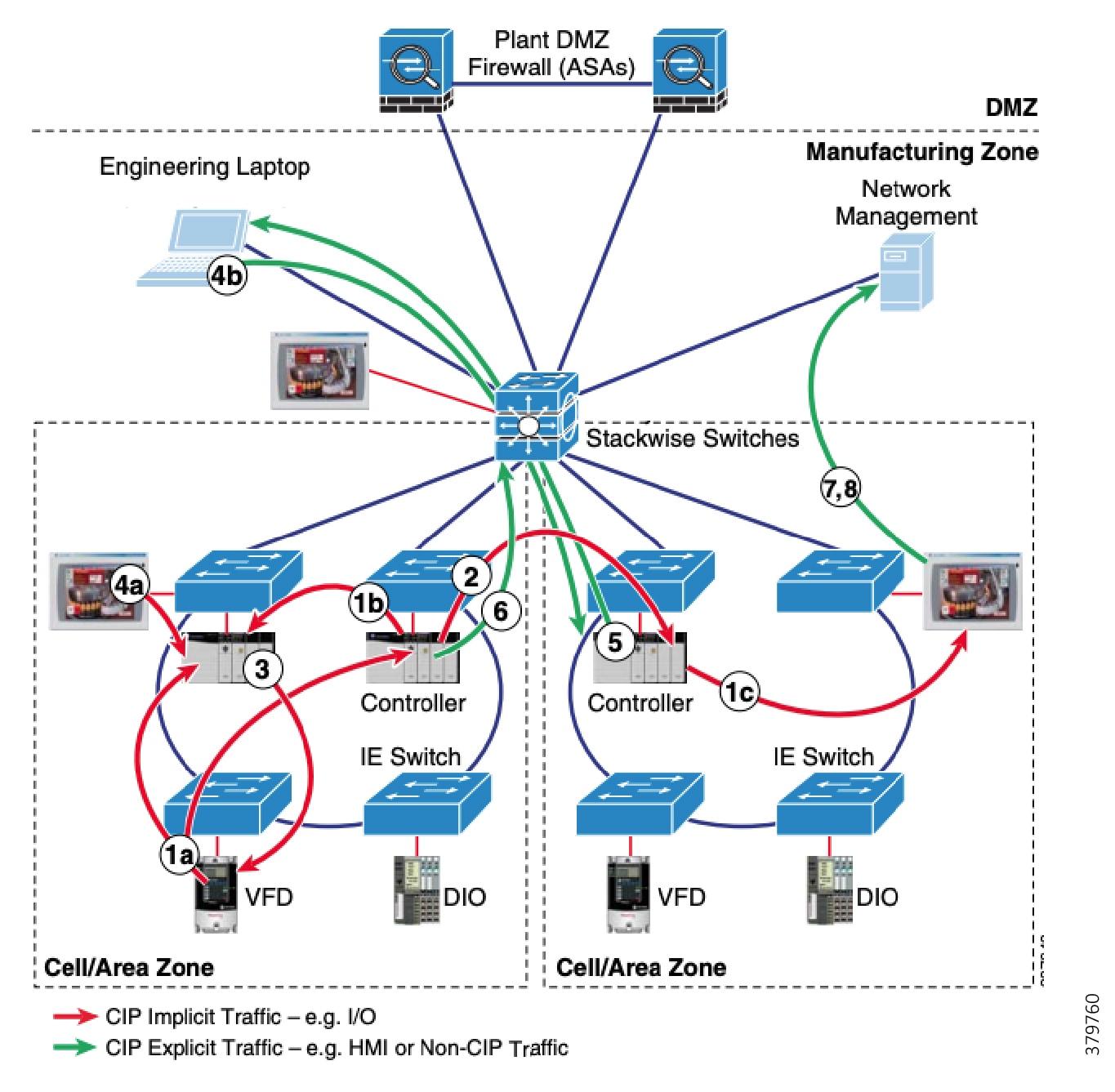

Cell Area/Zones—Access and Control

The Cell/Area Zone is a functional area within a plant facility and many plants have multiple Cell/Area Zones. Larger plants might have "Zones" designated for fairly broad processes that have smaller subsets of "Cell Areas" within them where the process is broken down into ever smaller subsets. For example, in an automotive assembly plant, a "Zone"" might be a paint shop—where unfinished chassis arrive from the stamping plant, are painted, and then proceed on to the rest of assembly. In this case, a "Cell" within that Zone might be a Cell for priming that feeds another Cell for base color and yet another cell for top coat.

Because most networks in this area carry both non-critical traffic (for example, Historian) as well as time critical, deterministic traffic (for control loops), managed switching with strict quality of service (QoS) requirements is necessary. All switches, routers, firewalls, and so on are strictly maintained. However, unmanaged switches do exist in the machine networks (but still not routers or firewalls), but these are only possible because of the highly controlled nature of the traffic. These unmanaged switches are preferred because of their boot time speed and ease of replacement, versus a fully-managed switch equipped with troubleshooting and monitoring capabilities.

This zone has essentially three levels of activity occurring, as described in the following subsections.

Level 0—Process

Level 0 consists of a wide variety of sensors and actuators involved in the basic industrial process. These devices perform the basic functions of the IACS, such as driving a motor, measuring variables, setting an output, and performing key functions such as painting, welding, bending, and so on. These functions can be very simple (temperature gauge) to highly complex (a moving robot).

These devices take direction from and communicate status to the control devices in Level 1 of the logical model. In addition, other IACS devices or applications may need to directly access Level 0 devices to perform maintenance or resolve problems on the devices. The main attributes of Level 0 devices are:

■![]() Drive the real-time, deterministic communication requirements

Drive the real-time, deterministic communication requirements

■![]() Measure the process variables and control process outputs

Measure the process variables and control process outputs

■![]() Exist in challenging physical environments that drive topology constraints

Exist in challenging physical environments that drive topology constraints

■![]() Vary according to the size of the IACS network from a small (10s) to a large (1000s) number of devices

Vary according to the size of the IACS network from a small (10s) to a large (1000s) number of devices

■![]() Once designed and installed, are not replaced all together until the plant line is overhauled or replaced, which is typically five or more years

Once designed and installed, are not replaced all together until the plant line is overhauled or replaced, which is typically five or more years

Level 1—Basic Control

Level 1 consists of controllers that direct and manipulate the manufacturing process, primarily interfacing with the Level 0 devices (for example, I/O, sensors and actuators). In discrete environments, the controller is typically a PLC, whereas in process environments, the controller is referred to as a distributed control system (DCS). For the purposes of this solution architecture, "controllers" refers to multidiscipline controllers used across industries.

IACS controllers run industry-specific operating systems that are programmed and configured from engineering workstations. IACS controllers are modular computers that consist of some or all of the following:

■![]() A controller that computes all the data and executes programs loaded onto it

A controller that computes all the data and executes programs loaded onto it

■![]() I/O or network modules that communicate with Level 0 devices, Level 2 human-machine interfaces (HMIs), or other Level 1 controllers

I/O or network modules that communicate with Level 0 devices, Level 2 human-machine interfaces (HMIs), or other Level 1 controllers

■![]() Integrated or separate power modules that deliver power to the rest of the controller and potentially other devices

Integrated or separate power modules that deliver power to the rest of the controller and potentially other devices

IACS controllers are the intelligence of the IACS, making the basic decisions based on feedback from the devices found at Level 0. Controllers act alone or in conjunction with other controllers to manage the devices and thereby the industrial process. Controllers also communicate with other functions in the IACS (for example, Historian, asset manager, and manufacturing execution system) in Levels 2 and 3. The controller performs as a director function in the Industrial zone, translating high-level parameters (for example, recipes) into executable orders, consolidating the I/O traffic from devices, and passing the I/O data on to the upper-level plant floor functions.

Thus, controllers produce IACS network traffic in three directions from a level perspective:

■![]() Downward to the devices in Level 0 that they control and manage

Downward to the devices in Level 0 that they control and manage

■![]() Peer-to-peer to other controllers to manage the IACS for a Cell/Area Zone

Peer-to-peer to other controllers to manage the IACS for a Cell/Area Zone

■![]() Upward to HMIs and information management systems in Levels 2 and 3

Upward to HMIs and information management systems in Levels 2 and 3

Level 2—Area Supervisory Control

Level 2 represents the applications and functions associated with the Cell/Area Zone runtime supervision and operation, which include:

■![]() Operator interfaces or HMIs

Operator interfaces or HMIs

■![]() Alarms or alerting systems

Alarms or alerting systems

■![]() Control room workstations

Control room workstations

Depending on the size or structure of a plant, these functions may exist at the site level (Level 3). These applications communicate with the controllers in Level 1 and interface or share data with the site level (Level 3) or enterprise (Levels 4 to 5) systems and applications through the demilitarized zone (DMZ). These applications can be implemented on dedicated IACS vendor operator interface terminals or on standard computing equipment and operating systems such as Microsoft Windows. These applications are more likely to communicate with standard Ethernet and IP networking protocols and are typically implemented and maintained by the industrial organization.

Industrial Zone

The Industrial zone comprises the Cell/Area zones (Levels 0 to 2) and site-level (Level 3) activities. The Industrial zone is important because all the IACS applications, devices, and controllers critical to monitoring and controlling the plant floor IACS operations are in this zone. To preserve smooth plant operations and functioning of the IACS applications and IACS network in alignment with standards such as IEC 62443, this zone requires clear logical segmentation and protection from Levels 4 and 5.

Level 3—Site Operations and Control

Level 3, the site level, represents the highest level of the IACS. This space is generally "carpeted space"-meaning it has HVAC with typical 19-inch rack-mounted equipment in hot/cold aisles utilizing commercial grade equipment.

As the name implies, this is where applications related to operating the site reside, where operating the site means the applications and services that are directly driving production. For example, what is generally not included at this level are the more enterprise-centric applications such as Engineering Resource Planning (ERP) systems or Manufacturing Execution Systems (MES), as those applications tend to be more business management applications and therefore more closely aligned and integrated with enterprise applications. Examples of services at this level would be Historians, control applications, network and IACS management software, and network security services. Control applications will vary greatly on the specifics of the plant. An example from an automotive assembly plant would be for a Paint Coordination application that might be directly controlling chassis coming from the Stamping Plant fed to Robotic Paint Controllers in the Paint zone. The Level 1 controllers (the Robotic Controller in this example) would often be ruggedized, require determinism for their control loops, and be at or near the actual operations. In contrast, the Paint Coordination applications at the Site level are not true control loop applications and can reside in carpeted space.

The systems and applications that exist at this level manage plantwide IACS functions. Levels 0 through 3 are considered critical to site operations. The applications and functions that exist at this level include the following:

■![]() Level 3 IACS network

Level 3 IACS network

■![]() Reporting (for example: cycle times, quality index, predictive maintenance)

Reporting (for example: cycle times, quality index, predictive maintenance)

■![]() Plant historian

Plant historian

■![]() Detailed production scheduling

Detailed production scheduling

■![]() Site-level operations management

Site-level operations management

■![]() Asset and material management

Asset and material management

■![]() Control room workstations

Control room workstations

■![]() Patch launch server

Patch launch server

■![]() File server

File server

■![]() Other domain services, for example Active Directory (AD), DHCP, Domain Name System (DNS), Windows Internet Naming Service (WINS), Network Time Protocol (NTP), Precision Time Protocol Grandmaster Clock, and so on

Other domain services, for example Active Directory (AD), DHCP, Domain Name System (DNS), Windows Internet Naming Service (WINS), Network Time Protocol (NTP), Precision Time Protocol Grandmaster Clock, and so on

■![]() Terminal server for remote access support

Terminal server for remote access support

■![]() Staging area

Staging area

■![]() Administration and control applications

Administration and control applications

The Level 3 IACS network may communicate with Level 1 controllers and Level 0 devices, function as a staging area for changes into the Industrial zone, and share data with the enterprise (Levels 4 and 5) systems and applications through the DMZ. These applications are primarily based on standard computing equipment and operating systems (Unix-based or Microsoft Windows). For this reason, these systems are more likely to communicate with standard Ethernet and IP networking protocols.

Additionally, because these systems tend to be more aligned with standard IT technologies, they may also be implemented and supported by personnel with IT skill sets.

Enterprise Zone

Level 4—Site Business Planning and Logistics

Level 4 is where the functions and systems that need standard access to services provided by the enterprise network reside. This level is viewed as an extension of the enterprise network. The basic business administration tasks are performed here and rely on standard IT services. These functions and systems include wired and wireless access to enterprise network services such as the following:

■![]() Access to the Internet and email (hosted in data centers)

Access to the Internet and email (hosted in data centers)

■![]() Non-critical plant systems such as manufacturing execution systems and overall plant reporting such as inventory, performance, and so on

Non-critical plant systems such as manufacturing execution systems and overall plant reporting such as inventory, performance, and so on

■![]() Access to enterprise applications such as SAP and Oracle (hosted in data centers)

Access to enterprise applications such as SAP and Oracle (hosted in data centers)

Although important, these services are not viewed as critical to the IACS and thus the plant floor operations. Because of the more open nature of the systems and applications within the enterprise network, this level is often viewed as a source of threats and disruptions to the IACS network.

The users and systems in Level 4 often require summarized data and information from the lower levels of the IACS network. The network traffic and patterns here are typical of a branch or campus network found in general enterprises where approximately 90 percent of the network traffic goes to the Internet or to data center applications.

This level is typically under the management and control of the IT organization.

Level 5—Enterprise

Level 5 is where the centralized IT systems and functions exist. Enterprise resource management, business-to-business, and business-to-customer services typically reside at this level. Often the external partner or guest access systems exist here, although it is not uncommon to find them in lower levels (Level 3) of the framework to gain flexibility that may be difficult to achieve at the enterprise level. However, this approach may lead to significant security risks if not implemented within IT security policy and standards.

The IACS must communicate with the enterprise applications to exchange manufacturing and resource data. Direct access to the IACS is typically not required. One exception to this would be remote access for management of the IACS by employees or partners such as system integrators and machine builders. Access to data and the IACS network must be managed and controlled through the DMZ to maintain the security, availability, and stability of the IACS.

The services, systems, and applications at this level are directly managed and operated by the IT organization.

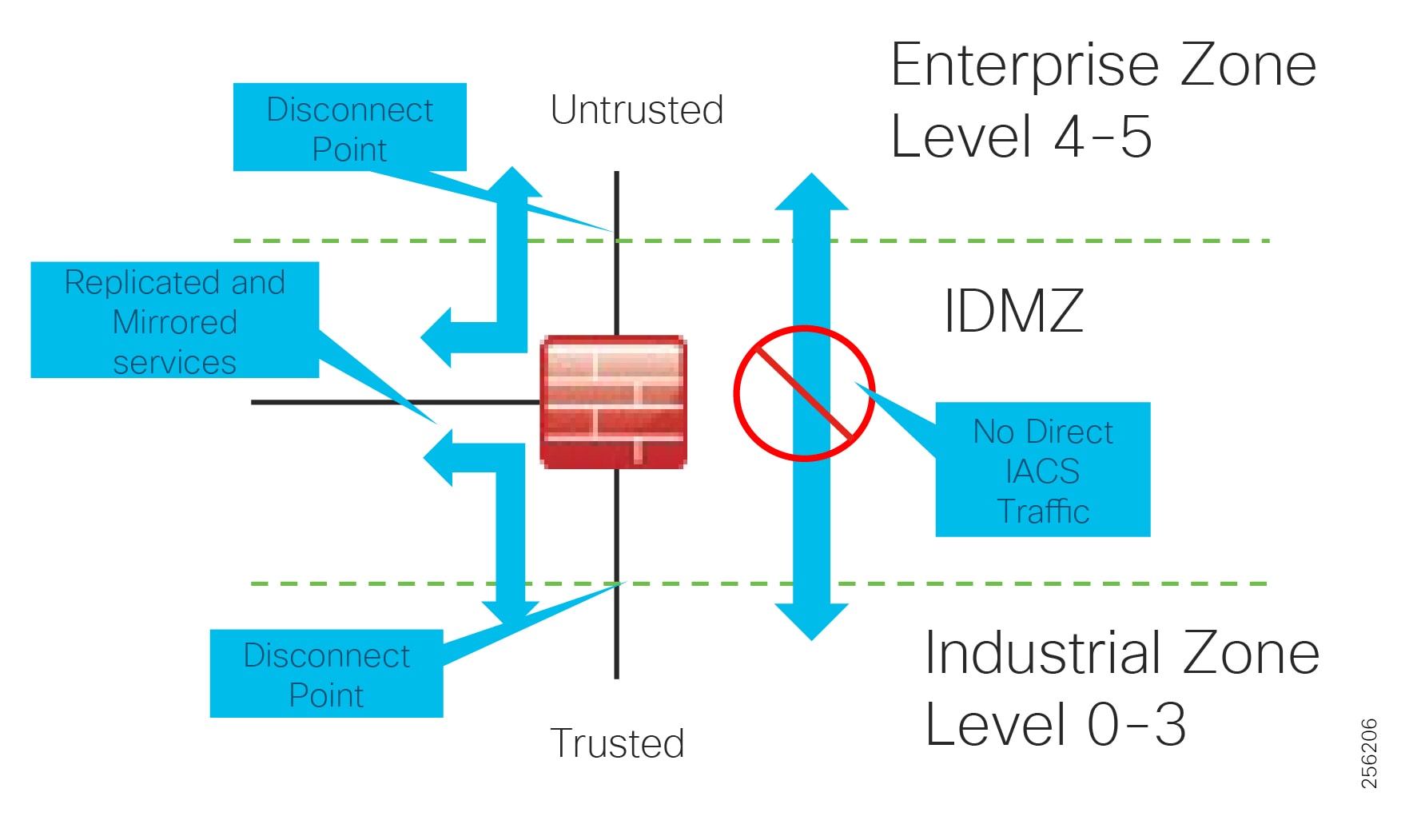

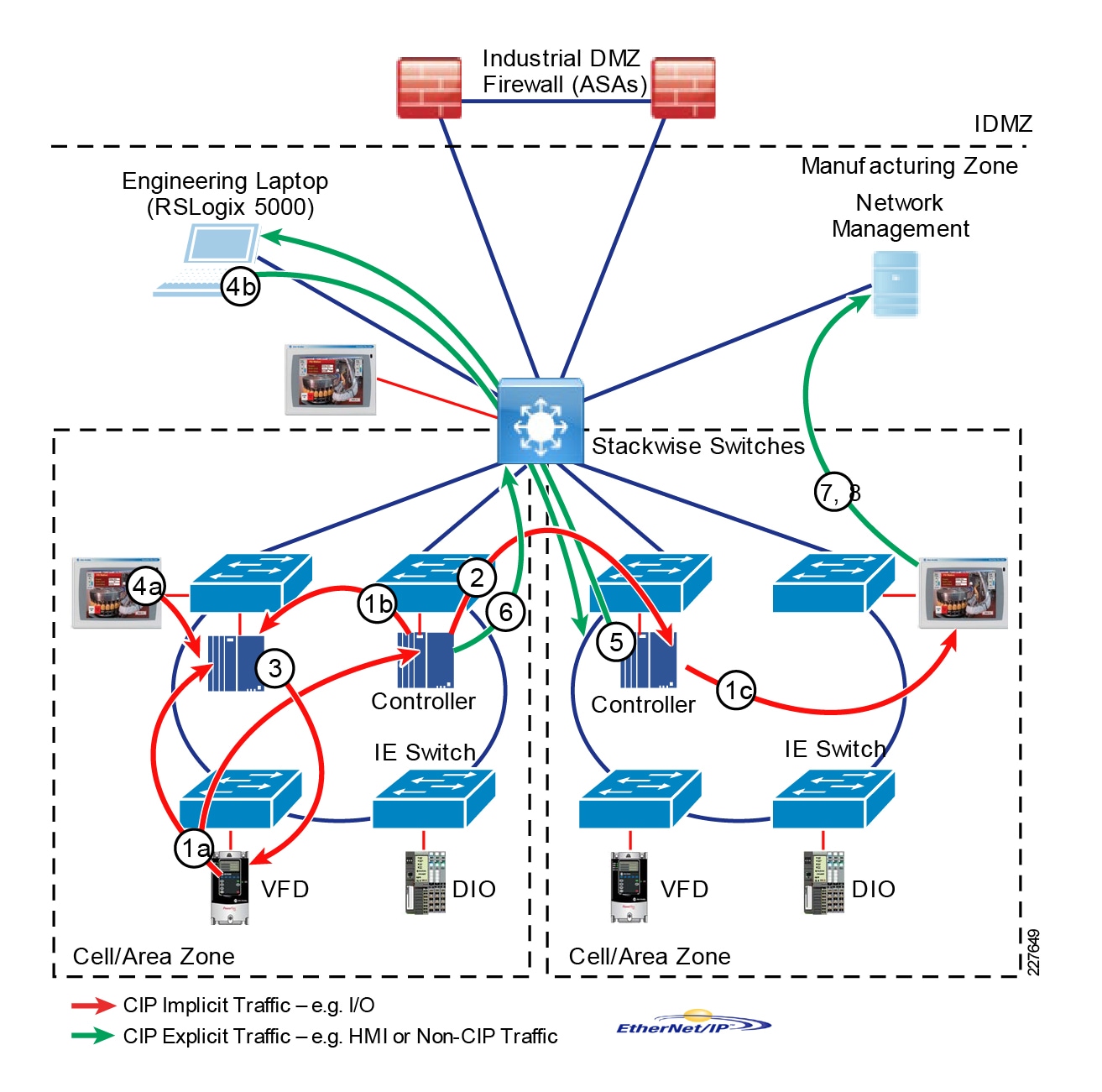

Industrial DMZ

Although not part of Purdue reference model, the industrial automation solution includes a DMZ between the Industrial and Enterprise zones. The industrial DMZ is deployed within plant environments to separate the enterprise networks and the operational domain of the plant environment. Downtime in the IACS network can be costly and have a severe impact on revenue, so the operational zone cannot be impacted by any outside influences. Network access is not permitted directly between the enterprise and the plant, however, data and services are required to be shared between the zones, thus the industrial DMZ provides architecture for the secure transport of data. Typical services deployed in the DMZ include remote access servers and mirrored services. Further details on the design recommendations for the industrial DMZ can be found later in this guide.

As with IT network DMZs, the industrial DMZ is there to primarily be a buffer between the plant floor and the Enterprise or the Internet-placing the most vulnerable services, such as email, web, and DNS servers, in this isolated network. The industrial DMZ not only isolates the factory from the outside world, but also from its own enterprise networks. The primary reason this additional isolation is recommended is that, unlike enterprise services, the plant floor contains the most critical services of the company-the services that produce the very product the company sells. Often applications on the plant floor are antiquated, running on vulnerable operating systems such as Windows 95. The industrial DMZ provides another level of security for these vulnerable systems.

Another key use of the industrial DMZ is for remote access, aiding troubleshooting of production equipment affecting the company product and therefore revenue. The risk of external access compounded with antiquated equipment underscores the need for some additional security measures. The details as to how the industrial DMZ is used for such services are described in later sections.

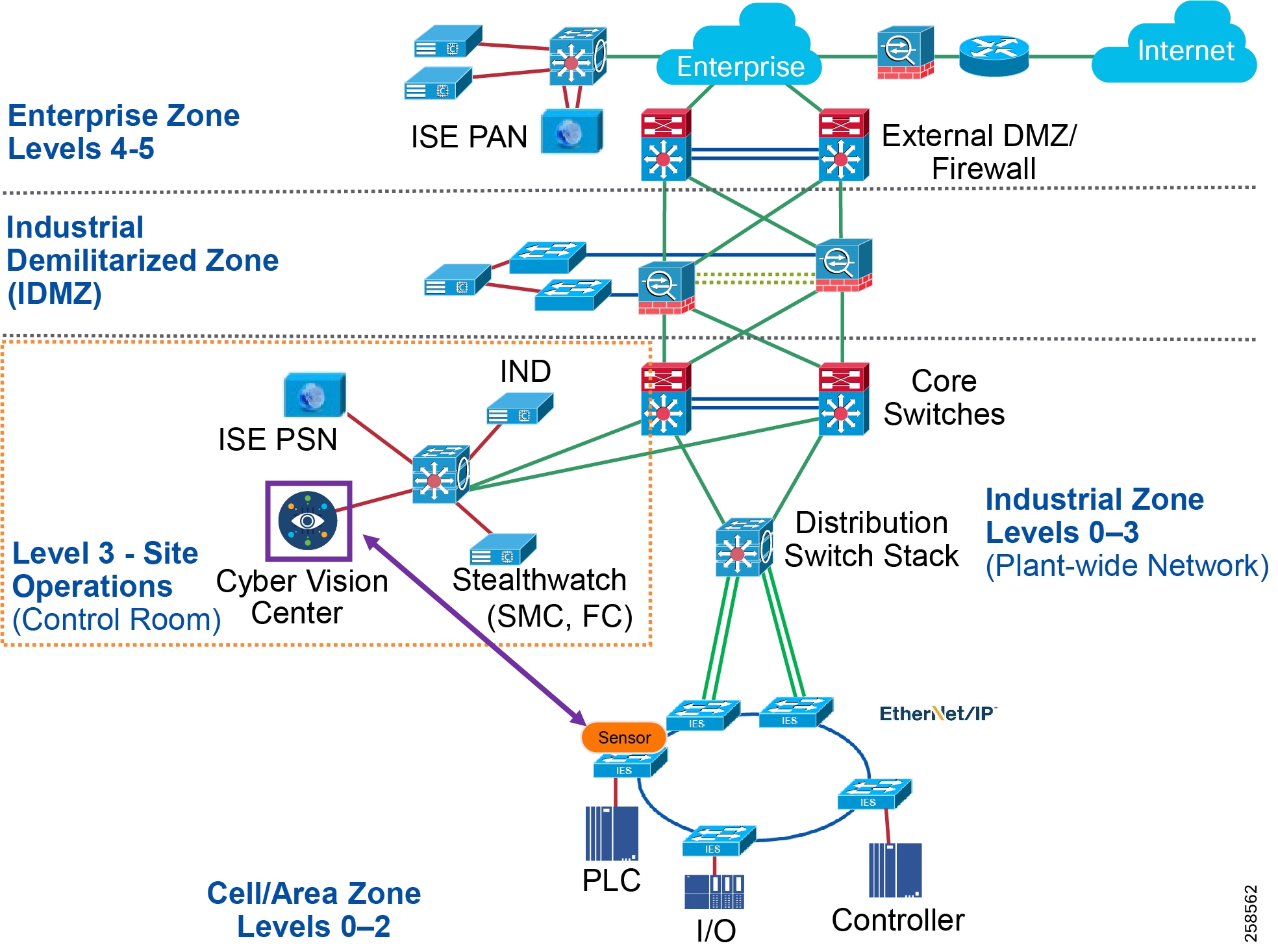

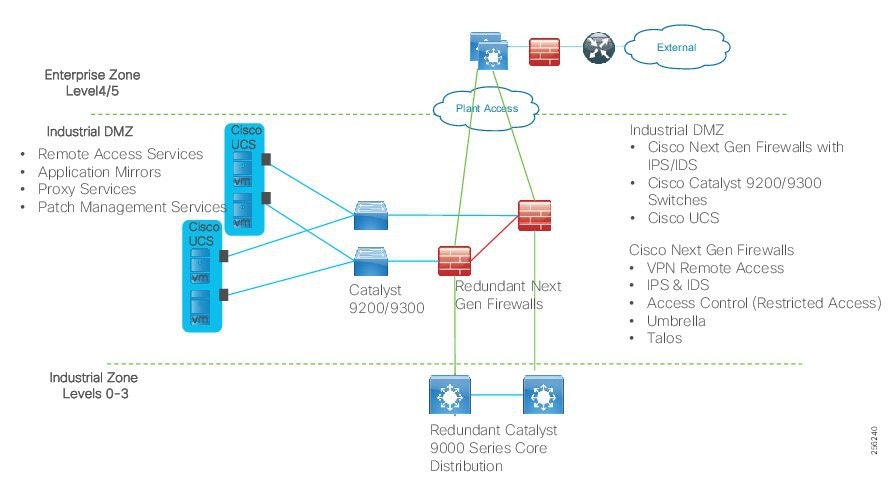

Figure 5 Industrial Plant Reference Architecture with IDMZ

IACS Requirements and Considerations

Operational Technology Application Requirements

OT applications at their core are focused on maintaining stability, continuity, and integrity of industrial processes. At the core is a loop of sensors, controllers, and actuators that must be maintained to properly operate the industrial processes. Additionally, a number of other applications need to gather information to display status, maintain history, and optimize the industrial process operations. From this standpoint, this solution outlines how to achieve a set of key requirements to support the OT applications, including:

■![]() High availability as applications often have to run 24 hours a day, 365 days a year

High availability as applications often have to run 24 hours a day, 365 days a year

■![]() Focus on local, real-time communication between IACS devices requiring low latency and jitter in the communication to maintain the control loop integrity

Focus on local, real-time communication between IACS devices requiring low latency and jitter in the communication to maintain the control loop integrity

■![]() Ability to access diagnostic and telemetry information from IACS devices for IoT-based applications

Ability to access diagnostic and telemetry information from IACS devices for IoT-based applications

■![]() Challenge to update or change devices, software, or update configurations as the processes often run for extended periods of time

Challenge to update or change devices, software, or update configurations as the processes often run for extended periods of time

■![]() Deployable in a range of industrial environmental conditions with industrial-grade as well as COTS IT equipment when applicable

Deployable in a range of industrial environmental conditions with industrial-grade as well as COTS IT equipment when applicable

■![]() Scalable from small (tens to hundreds of IACS devices) to very large (thousands to 10,000s) deployments

Scalable from small (tens to hundreds of IACS devices) to very large (thousands to 10,000s) deployments

■![]() Access to precise time for challenging applications such as Motion Control and Sequence of Events

Access to precise time for challenging applications such as Motion Control and Sequence of Events

■![]() Simple and easy to use to management tools to facilitate deployment and maintenance, especially by OT personnel with limited IT capabilities and knowledge

Simple and easy to use to management tools to facilitate deployment and maintenance, especially by OT personnel with limited IT capabilities and knowledge

■![]() Use of open standards to ensure vendor choice and protection from proprietary constraints

Use of open standards to ensure vendor choice and protection from proprietary constraints

Ruggedization and Environmental Requirements

Typical enterprise network devices reside in controlled environments, which is a key differentiator of the IACS from typical enterprise applications. The IACS end devices and network infrastructure are located in harsh environments that require compliance to environmental specifications such as IEC 529 (ingress protection) or National Electrical Manufacturers Association (NEMA) specifications. The IACS end devices and network infrastructure may be located in physically disparate locations and in non-controlled or even harsh environmental conditions such as temperature, humidity, vibration, noise, explosiveness, or electronic interference.

Due to these environmental considerations the IACS devices and network infrastructure must support and withstand these harsh conditions. Also DIN rail compliant form factor is ideal for industrial environments when compared to enterprise which typically reside in 19-inch rack mounts.

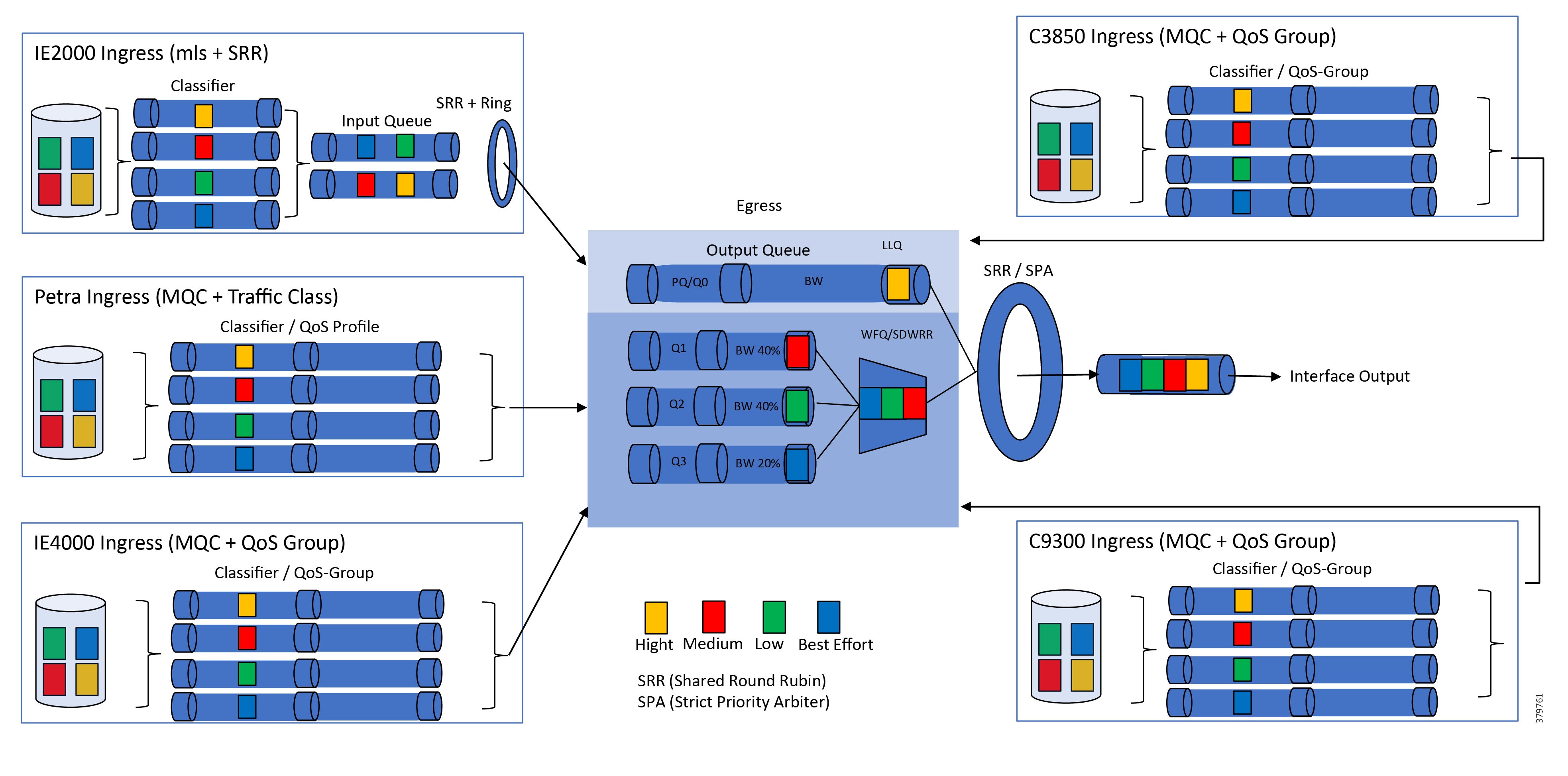

Performance in the Industrial Automation Control System

Performance is an important consideration for design of the network. Networking engineers in general have dealt with both latency and jitter for many years, especially in VoIP networks. However, in industrial automation networks, especially as the networking gets closer to the lower Purdue levels (ANSI/ISA 951/Purdue2 Level 0-1, machines, relays), the requirements for both latency and jitter become orders of magnitude more stringent than VoIP networks. Industrial automation network equipment is very demanding and some of these devices have limited software and processing capabilities, which makes them susceptible to network-related disruptions or extraneous communication. In addition, a very quickly changing manufacturing process (for example, a paper mill) or complex automation (for example, a multi-axis robot) demand very high levels of determinism-predictable inter-packet delay in the IACS. A lack of determinism in a network can fail and shut down an industrial process causing downtime which impacts the business. To support the level of determinism needed for industrial automation networks, the following must be considered:

■![]() Marking the applications that need real-time traffic with high priority

Marking the applications that need real-time traffic with high priority

■![]() Creating a QoS policy that guarantees an appropriate bandwidth for the high priority traffic

Creating a QoS policy that guarantees an appropriate bandwidth for the high priority traffic

■![]() Planning an appropriate bandwidth on the network links

Planning an appropriate bandwidth on the network links

Availability is the most important aspect of IACS networks. This highlights another key difference with other non-industrial networking; while most IT networks have become more and more "business critical" over the years, they are generally not at the core of the business, but rather part of a service organization. In contrast, OT networking is business critical in that it is part of what the company actually does; when critical parts of the OT network go down, production stops and revenue is impacted. Note that some parts of the IACS network are more critical than others and therefore have higher availability requirements. Such criticality is ultimately translated into availability or Service Level Agreement (SLA) requirements, much of which can be seen in various parts of this CVD, but most notably in sections describing various ways of increasing availability such as sections describing resiliency protocols.

To achieve availability:

■![]() No single point of failure for IACS network infrastructure. For example: redundant links, switches, Layer 3 devices, and firewalls.

No single point of failure for IACS network infrastructure. For example: redundant links, switches, Layer 3 devices, and firewalls.

■![]() Implement network resiliency and convergence protocols which meet the IACS application requirements.

Implement network resiliency and convergence protocols which meet the IACS application requirements.

■![]() Quick and easy zero-touch replacement of network devices in industrial environments.

Quick and easy zero-touch replacement of network devices in industrial environments.

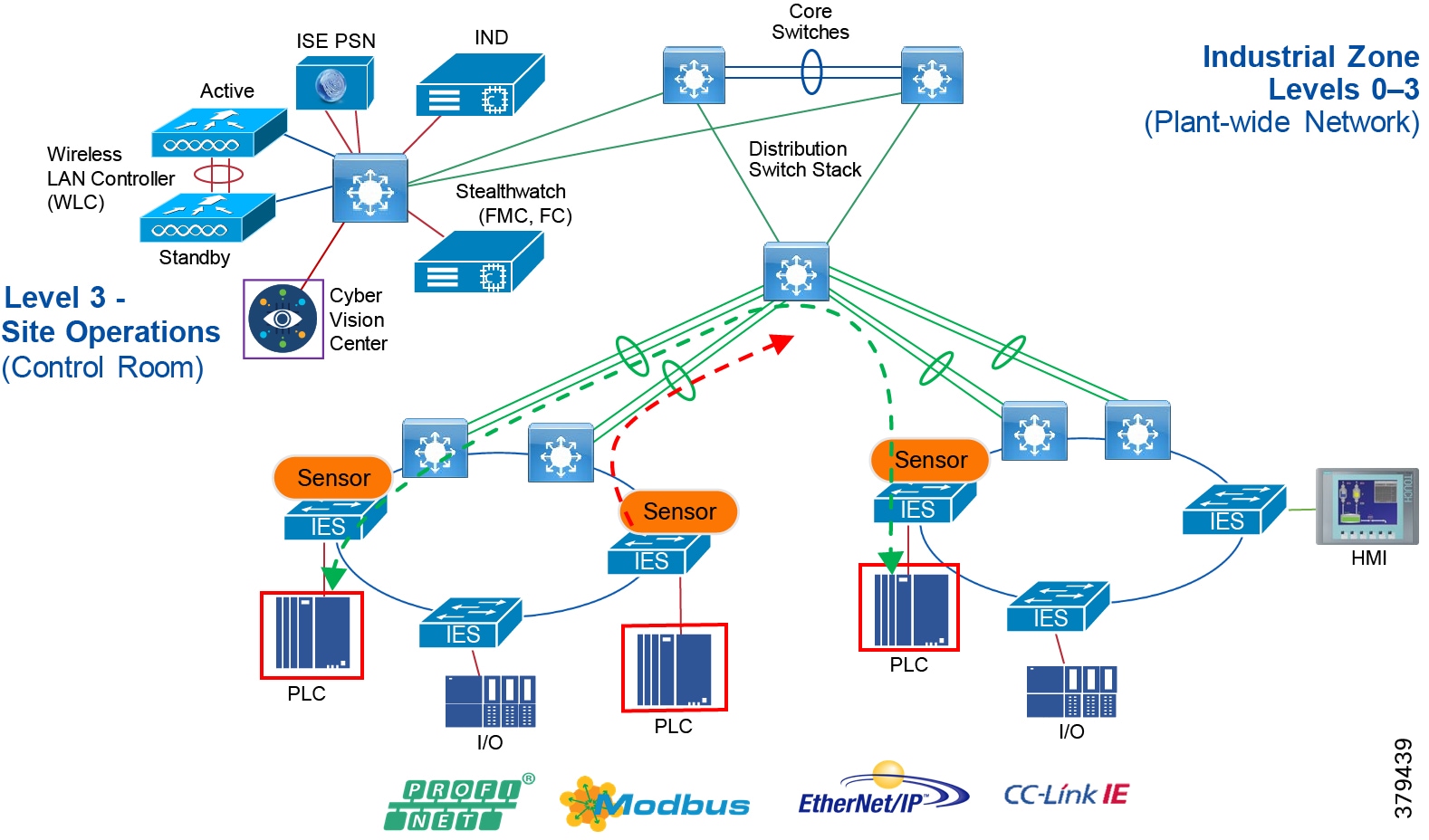

Security

The traditional approach of security deployed in Industrial automation is "security by obscurity", which is to have a very closed air-gap environment and implement proprietary protocols with no public access. The need for physical security can easily be seen in not only the examples above, but in real world examples such as Stuxnet ( https://en.wikipedia.org/wiki/Stuxnet). Stuxnet demonstrated that "security by obscurity" and even "air-gapping" are insufficient security measures (more on those topics later). In addition to the Stuxnet example, the need for physical security can be seen with more generic "man-in-the-middle" attacks or simple "network taps", where physical devices are inserted into the network. This intersection of physical and cybersecurity is generally a component of all industrial automation networks. The proprietary protocols were seen as being difficult to compromise and security incidents were more likely to be accidental. However, over the course of the last few years the industrial ecosystem has moved away from the use of proprietary network technologies to the use of open, standard networking such as Ethernet, WiFi, IP, and so on.

This approach of adding obscurity as a way of securing the networks does not meet the requirements of the current trends in industrial automation because:

■![]() Proliferation of many devices in the plant floor-First, plant networks are increasingly using COTS technology products to perform operational tasks, replacing devices that were built from the ground up specifically for the process control environment. Second, many sensors are added to the plant floor as part of big data and analytics, primarily to derive the data from the machines which can be used to enhance the productivity of the plant floor and also as a means to perform preventive maintenance on the equipment. These new devices support standard protocols and also may need access to certain resources such as cloud and internet.

Proliferation of many devices in the plant floor-First, plant networks are increasingly using COTS technology products to perform operational tasks, replacing devices that were built from the ground up specifically for the process control environment. Second, many sensors are added to the plant floor as part of big data and analytics, primarily to derive the data from the machines which can be used to enhance the productivity of the plant floor and also as a means to perform preventive maintenance on the equipment. These new devices support standard protocols and also may need access to certain resources such as cloud and internet.

■![]() Convergence of IT and OT-Organizationally, IT and OT teams and tools, which were historically separate, have begun to converge, leading to more traditionally IT-centric solutions being introduced to support operational activities. As the borders between traditionally separate OT and IT domains blur, they must align strategies and work more closely together to ensure end-to-end security.

Convergence of IT and OT-Organizationally, IT and OT teams and tools, which were historically separate, have begun to converge, leading to more traditionally IT-centric solutions being introduced to support operational activities. As the borders between traditionally separate OT and IT domains blur, they must align strategies and work more closely together to ensure end-to-end security.

Security Characteristics

With these trends in the manufacturer networks, the following fundamental principles must be adopted by the plant network operator to ensure secure systems:

■![]() Visibility of all devices in the plant network-Traditionally, enterprise devices such as laptops, mobile phones, printers, and scanners were identified by the enterprise management systems when these devices accessed the network. This visibility must be extended to all devices on the plant floor.

Visibility of all devices in the plant network-Traditionally, enterprise devices such as laptops, mobile phones, printers, and scanners were identified by the enterprise management systems when these devices accessed the network. This visibility must be extended to all devices on the plant floor.

■![]() Segmentation and zoning of the network-Segmentation is a process of bounding the reachability of a device and zoning is defining a layer where all the members in that zone will have identical security functions. Providing zones in a network provides an organized way of managing access within and across the zone. Segmenting the devices further reduces the risk of spread of an infection when a device gets subjected to malware.

Segmentation and zoning of the network-Segmentation is a process of bounding the reachability of a device and zoning is defining a layer where all the members in that zone will have identical security functions. Providing zones in a network provides an organized way of managing access within and across the zone. Segmenting the devices further reduces the risk of spread of an infection when a device gets subjected to malware.

■![]() Identification and restricted data flow-All the devices in the plant floor-enterprise (IT-managed) and operations (OT-managed)-must be identified, authenticated, and authorized and the network must enforce a policy when the users and IACS assets attach to the network.

Identification and restricted data flow-All the devices in the plant floor-enterprise (IT-managed) and operations (OT-managed)-must be identified, authenticated, and authorized and the network must enforce a policy when the users and IACS assets attach to the network.

■![]() Network anomalies-Any unusual behavior in network activity must be detected and examined to determine if the change is intended or due to a malfunction of the device. Detecting network anomalies as soon as possible gives plant operations the means to remediate an abnormality in the network sooner, which can help to reduce possible downtimes.

Network anomalies-Any unusual behavior in network activity must be detected and examined to determine if the change is intended or due to a malfunction of the device. Detecting network anomalies as soon as possible gives plant operations the means to remediate an abnormality in the network sooner, which can help to reduce possible downtimes.

■![]() Malware detection and mitigation-The unusual behavior displayed by an infected device must be detected immediately and the security tools should allow a remediate action to the infected device.

Malware detection and mitigation-The unusual behavior displayed by an infected device must be detected immediately and the security tools should allow a remediate action to the infected device.

■![]() Traditional firewalls are not typically built for industrial environments. There is a need for an industrial firewall which can perform deep packet inspection on industrial protocols to identify anomalies in IACS traffic flow.

Traditional firewalls are not typically built for industrial environments. There is a need for an industrial firewall which can perform deep packet inspection on industrial protocols to identify anomalies in IACS traffic flow.

■![]() Hardening of the networking assets and infrastructure in the plant floor is a critical consideration. This includes securing key management and control protocols such as Simple Network Management Protocols (SNMP) among others.

Hardening of the networking assets and infrastructure in the plant floor is a critical consideration. This includes securing key management and control protocols such as Simple Network Management Protocols (SNMP) among others.

■![]() Automation and Control protocols—It is also important to monitor the IACS protocols themselves for anomalies and abuse.

Automation and Control protocols—It is also important to monitor the IACS protocols themselves for anomalies and abuse.

■![]() Adhering to the security standards-In the 1990s, the Purdue Reference Model and ISA 95 created a strong emphasis on architecture using segmented levels between various parts of the control system. This was further developed in ISA99 and IEC 62443, which brought focus to risk assessment and process. The security risk assessment will identify which PMSs are defined as critical control systems, non-critical control systems, and non-control systems.

Adhering to the security standards-In the 1990s, the Purdue Reference Model and ISA 95 created a strong emphasis on architecture using segmented levels between various parts of the control system. This was further developed in ISA99 and IEC 62443, which brought focus to risk assessment and process. The security risk assessment will identify which PMSs are defined as critical control systems, non-critical control systems, and non-control systems.

Information Technology and Operational Technology Convergence

Historically, production environments and the IACS in them have been the sole responsibility of the operational organizations within enterprises. Enterprise applications and networks have been the sole responsibility of IT organizations. But as OT has started adopting standard networking, there has been a need to not only interconnect these environments, but to converge organizational capabilities and drive collaboration between vendors and suppliers.

Decisions impacting IACS networks are typically driven by plant managers and control engineers, rather than the IT department. Additionally, the IACS vendor and support supply chain are different than those typically used by the IT department. That being said, the IT departments of manufacturers are increasingly engaging with plant managers and control engineers to leverage the knowledge and expertise in standard networking technologies for the benefit of plant operations.

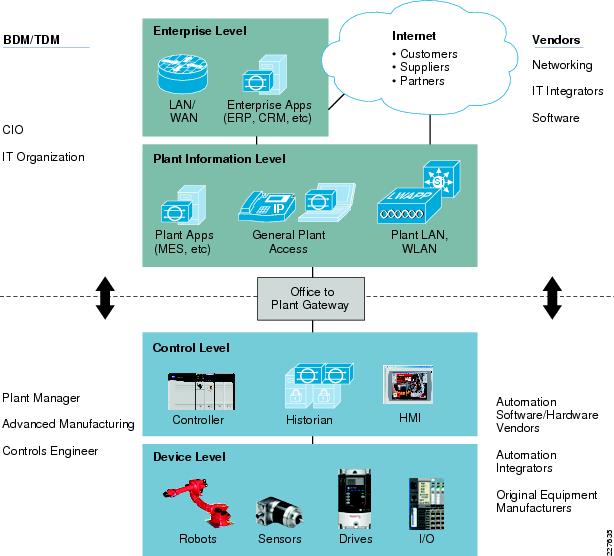

Figure 6 Business and Technical Decision Makers—IT and OT

Cross-Industry Industrial Networking Requirements

Table 2 summarizes cross-industry industrial network requirements, challenges, and Industrial Automation Solution features to help customers achieve various business outcomes.

| | | | |

| | | | |

| | | | |

| | | | |

| | | |

| | | | |

| | | | |

| | | | |

| | | |

Industry Standards and Regulations

Standards and guidelines are an essential foundation, but they do not prescribe how to secure and design specific systems. As all systems are different, standards and guidelines should be leveraged as a best practice framework and specifically tailored to business needs. In this section, a few of the industry standards are briefly described and limited to those that are both generally applicable and generally applied.

ISA-95/PERA (Purdue)

ISA-95 and PERA provide a general architecture for all types of IACS, providing not only common nomenclature but also common building blocks. More details can be found at:

■![]() ISA-95 web site

ISA-95 web site

https://isa-95.com/

■![]() PERA web site

PERA web site

http://www.pera.net/

IEC 62443/ISA-99

The IEC 62443 series builds on established standards for the security of general-purpose IT systems (for example, the ISO/IEC 27000 series), identifying and addressing the important differences present in an industrial control system (ICS). Many of these differences are based on the reality that cybersecurity risks within an ICS may have Health, Safety, or Environment (HSE) implications and that the response should be integrated with other existing risk management practices addressing these risks.

NIST Cybersecurity Framework

The National Institute of Standards and Technology (NIST) Cybersecurity Framework ( https://www.nist.gov/cyberframework) is a Best Practices guideline, not a requirements standard, the genesis of which came from the 2014 changes to the NIST National Institute of Standards and Technology Act, which was amended to add "...on an ongoing basis, facilitate and support the development of a voluntary, consensus-based, industry-led set of standards, guidelines, best practices, methodologies, procedures, and processes to cost-effectively reduce cyber risks to critical infrastructure."

NIST 800 Series

The NIST 800 Series, as it is commonly called, is a set of documents from NIST covering U.S. government security policies, procedures, and guidelines. Although NIST is a U.S. government unit (under the Commerce Department), these guidelines are referenced and indeed mandated by not only the U.S. but many governments and corporations around the world-even those not directly involved in the public sector. In particular to this CVD and the associated CVDs, the subset called NIST SP 800-82 "Guide to Industrial Control Systems Security" is of particular importance as it is specifically targeted at the IACS space. The purpose of this document is to provide guidance for securing ICS, including SCADA systems, DCS, and other systems performing control functions. The document provides a notional overview of ICS, reviews typical system topologies and architectures, identifies known threats and vulnerabilities to these systems, and provides recommended security countermeasures to mitigate the associated risks. Additionally, it presents an ICS-tailored security control overlay, based on NIST SP 800-53 Rev. 4 [22], to provide a customization of controls as they apply to the unique characteristics of the ICS domain.

NERC CIP

NERC CIP, or more properly the "North American Electric Reliability Corporation (NERC) Critical Infrastructure Protection (CIP)", as the name implies, is utility specific in origin, however it is widely referenced and adopted outside of the utility space. Also as its name implies, it targets "Critical Infrastructure Protection", which is a widely used term and the subject of many standards, guidelines, and best practices (see https://en.wikipedia.org/wiki/Critical_infrastructure_protection for more details). NERC CIP in particular is used in this CVD and the associated CVDs primarily because it was developed around the particulars of the IACS infrastructure (and is therefore more highly relevant to the subject and hand as well utilizing much of the same terminology).

IEEE 1588 Precise Time Protocol

Defined in IEEE1588 as Precision Clock Synchronization for Networked Measurements and Control Systems, it was developed to synchronize the clocks in packet-based networks that include distributed device clocks of varying precision and stability. Precise Time Protocol (PTP) is designed specifically for industrial, networked measurement and control systems, and is optimal for use in distributed systems because it requires minimal bandwidth and little processing overhead. PTP facilitate services which requires extremely precise time accuracy and stability like peak-hour billing, virtual power generators, outage monitoring and management, and so on.

PTP was originally developed in 2002. It was enhanced in 2008 (IEEE 1588-2008) and is referred to as PTPv2. This version establishes the basic concept and algorithms for distribution of precise time. These basics have been adopted into "profiles" that are specific definitions for distribution of time designed for particular use cases. The following PTP Profiles are:

■![]() Default Profile—This profile was defined by the IEEE 1588 working group. It has been adopted in many industrial applications, including the ODVA, Inc. Common Industrial Profile (CIP) as CIP Sync services. This solution supports the default profile in the Sitewide Precise Time Distribution feature. As well, the Rockwell Automation and Cisco Converged Plantwide Ethernet (CPwE) solution supports the default profile in the Deploying Scalable Time Distribution within a Converged Plantwide Ethernet Architecture ( https://www.cisco.com/c/en/us/td/docs/solutions/Verticals/CPwE/5-1/STD/DIG/CPwE-5-1-STD-DIG.html).

Default Profile—This profile was defined by the IEEE 1588 working group. It has been adopted in many industrial applications, including the ODVA, Inc. Common Industrial Profile (CIP) as CIP Sync services. This solution supports the default profile in the Sitewide Precise Time Distribution feature. As well, the Rockwell Automation and Cisco Converged Plantwide Ethernet (CPwE) solution supports the default profile in the Deploying Scalable Time Distribution within a Converged Plantwide Ethernet Architecture ( https://www.cisco.com/c/en/us/td/docs/solutions/Verticals/CPwE/5-1/STD/DIG/CPwE-5-1-STD-DIG.html).

■![]() Power Profile—This profile was defined by the International Electrotechnical Commission (IEC) standard 62439. The power profile is used in the IEC 61850 standard for communication protocol for substation automation. This profile is supported in the Cisco Substation Automation Local Area Network and Security CVD ( https://www.cisco.com/c/en/us/td/docs/solutions/Verticals/Utilities/SA/2-3-2/CU-2-3-2-DIG.html).

Power Profile—This profile was defined by the International Electrotechnical Commission (IEC) standard 62439. The power profile is used in the IEC 61850 standard for communication protocol for substation automation. This profile is supported in the Cisco Substation Automation Local Area Network and Security CVD ( https://www.cisco.com/c/en/us/td/docs/solutions/Verticals/Utilities/SA/2-3-2/CU-2-3-2-DIG.html).

■![]() Telecom Profile—The International Telecommunication Union's Telecommunications Standards (ITU-T) group has established a set of PTP profiles for the telecoms industries. A variety of Cisco products support these profiles, but are not commonly used in industrial automation. This profile is not supported in this solution.

Telecom Profile—The International Telecommunication Union's Telecommunications Standards (ITU-T) group has established a set of PTP profiles for the telecoms industries. A variety of Cisco products support these profiles, but are not commonly used in industrial automation. This profile is not supported in this solution.

■![]() IEEE 802.1 AS profile—The IEEE created the Timing and Synchronization for Time-Sensitive Applications at this profile as part of the Audio-Visual Bridging (AVB) set of technical standards. This profile is being enhanced for the industrial ecosystem driven Time-Sensitive Networks set of technical standards under the IEEE 802.1AS-Rev working group. Some Cisco products support 802.1AS for AVB and TSN applications. This solution does not support 802.1AS at this time.

IEEE 802.1 AS profile—The IEEE created the Timing and Synchronization for Time-Sensitive Applications at this profile as part of the Audio-Visual Bridging (AVB) set of technical standards. This profile is being enhanced for the industrial ecosystem driven Time-Sensitive Networks set of technical standards under the IEEE 802.1AS-Rev working group. Some Cisco products support 802.1AS for AVB and TSN applications. This solution does not support 802.1AS at this time.

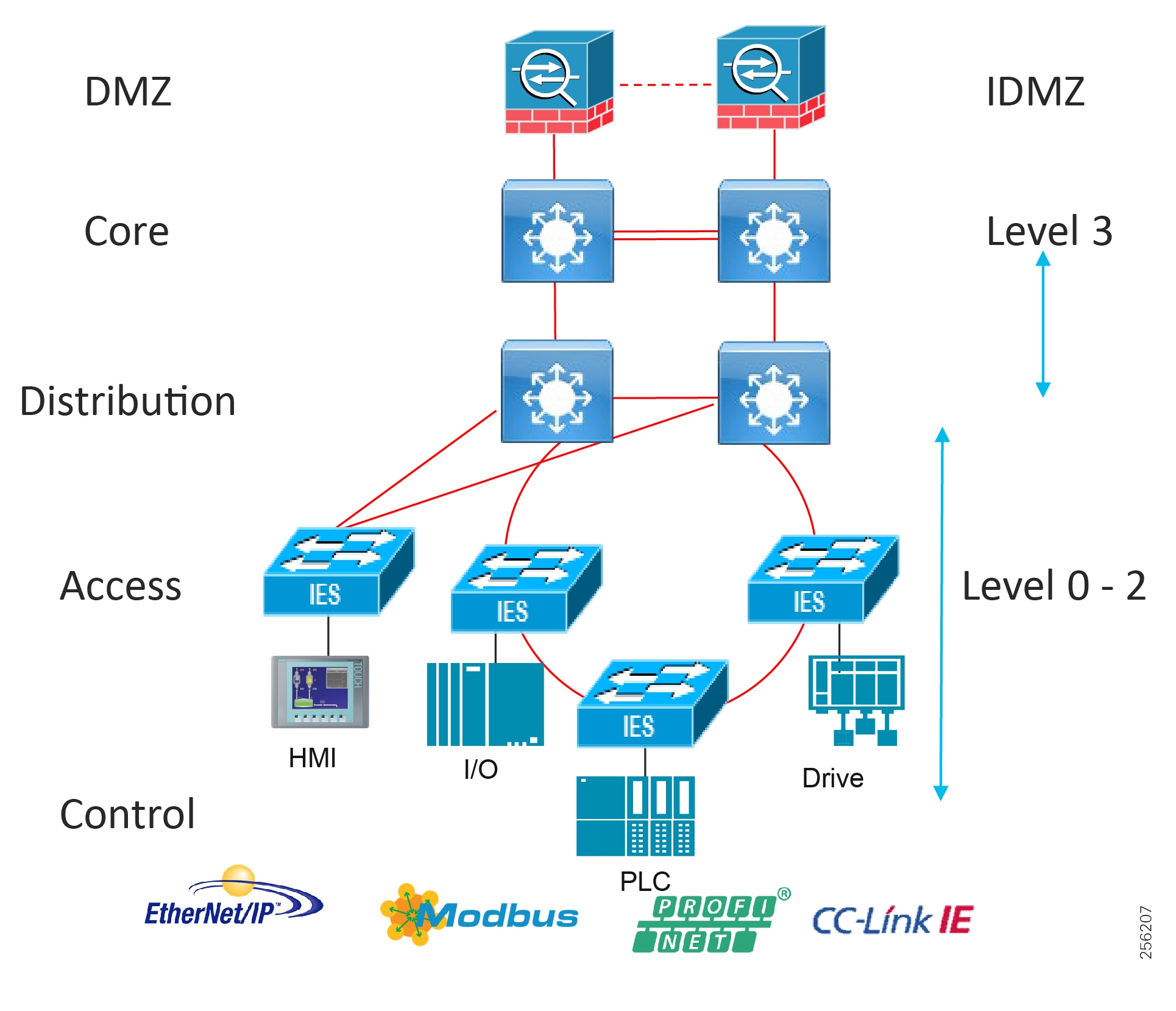

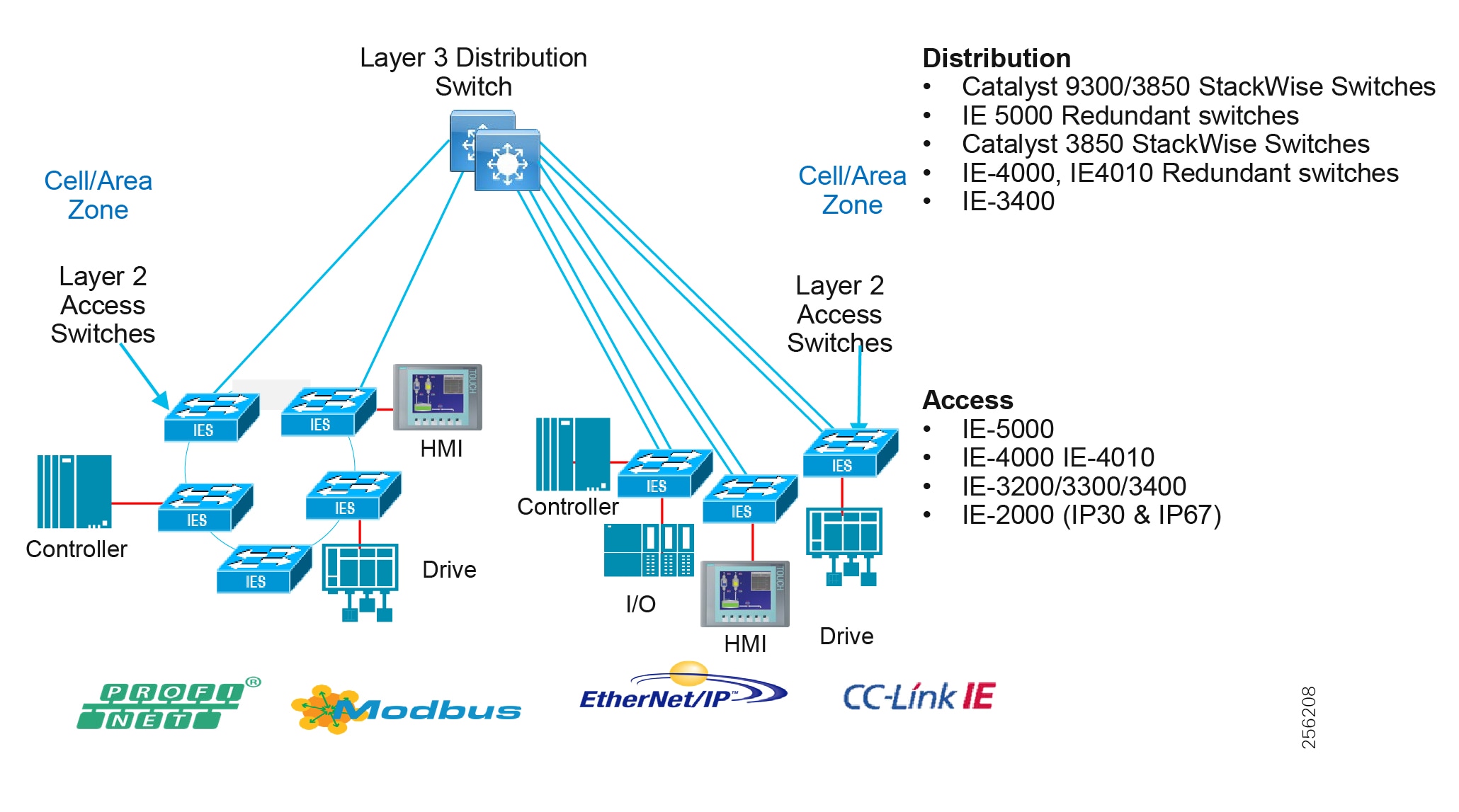

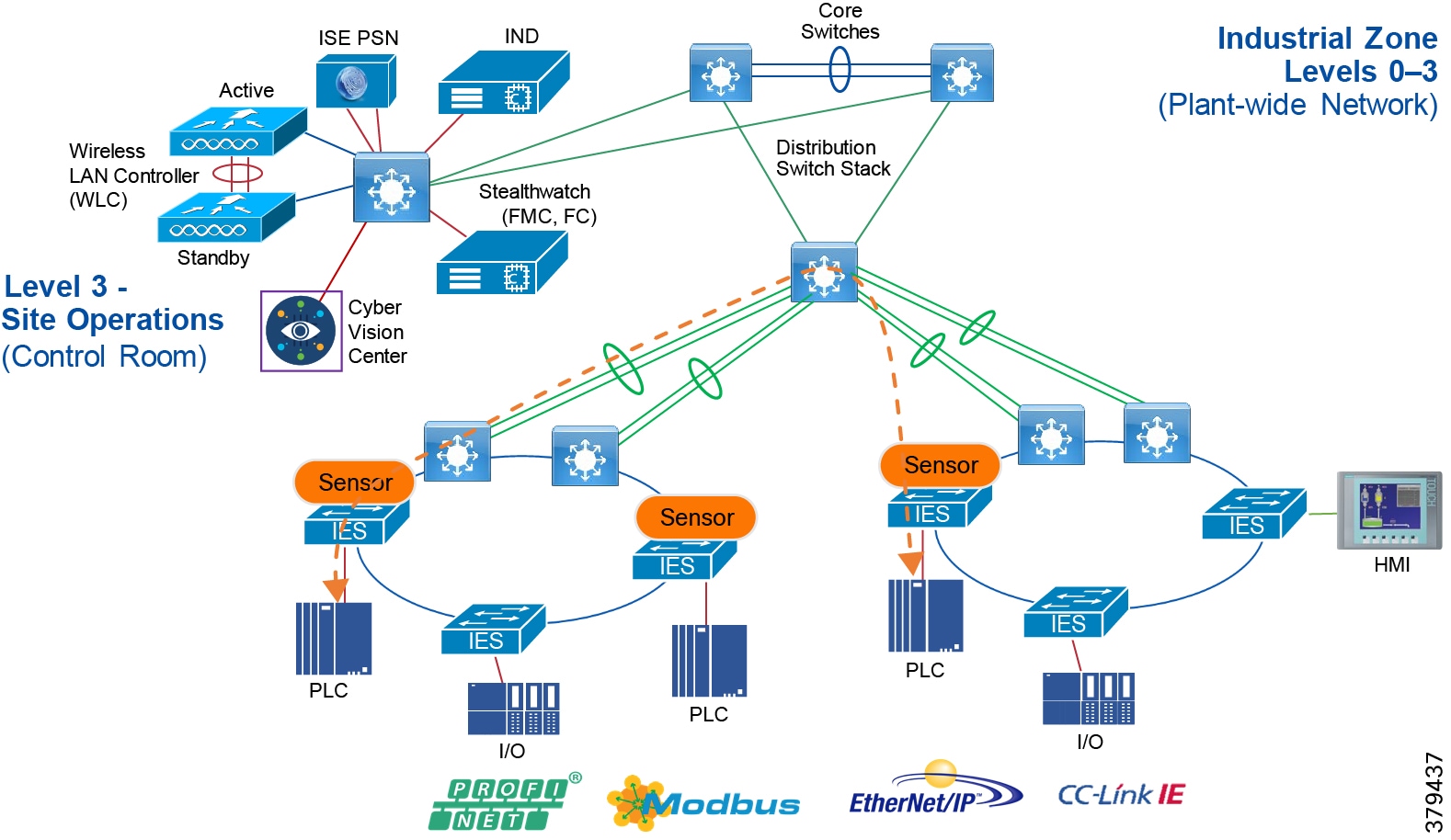

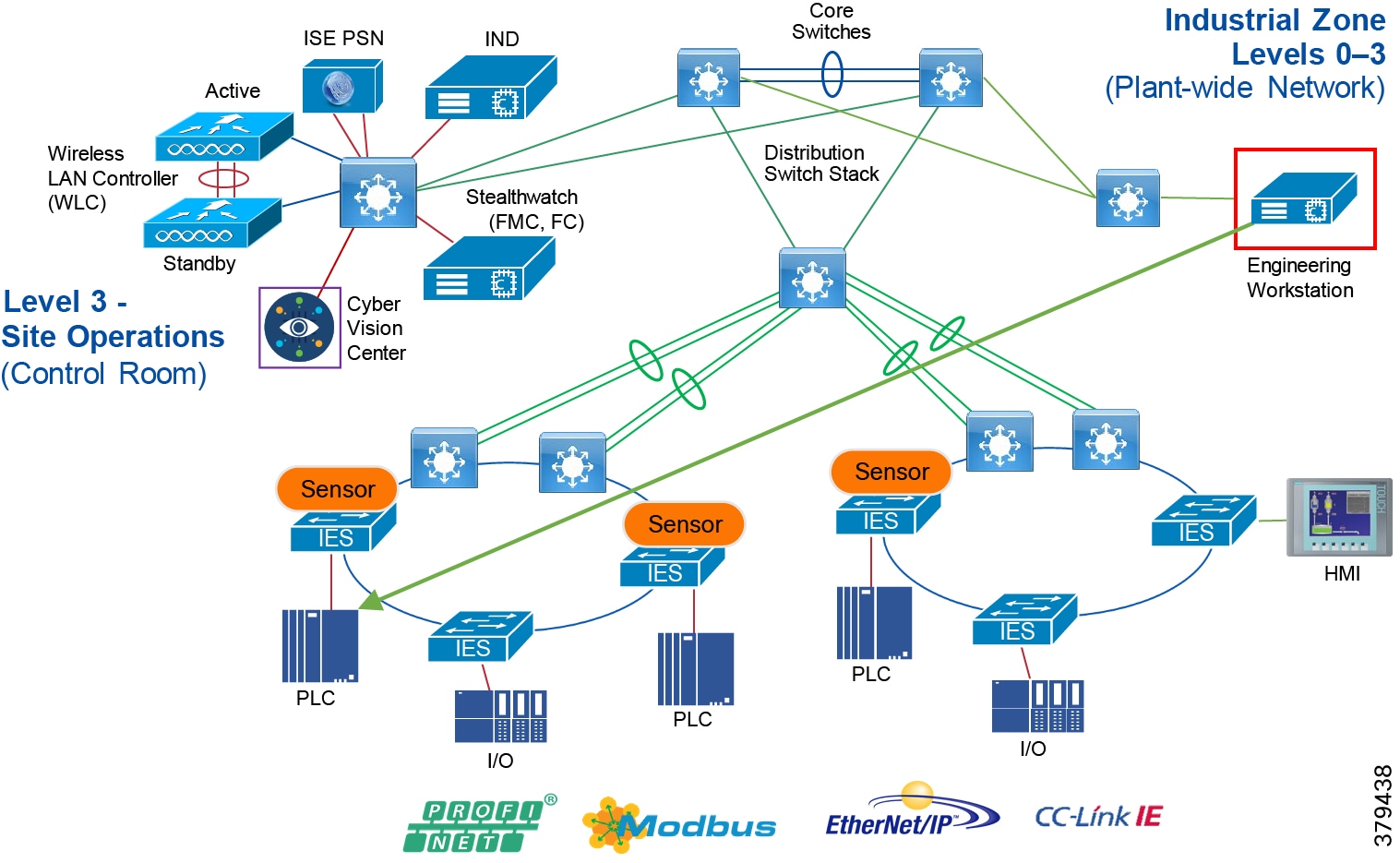

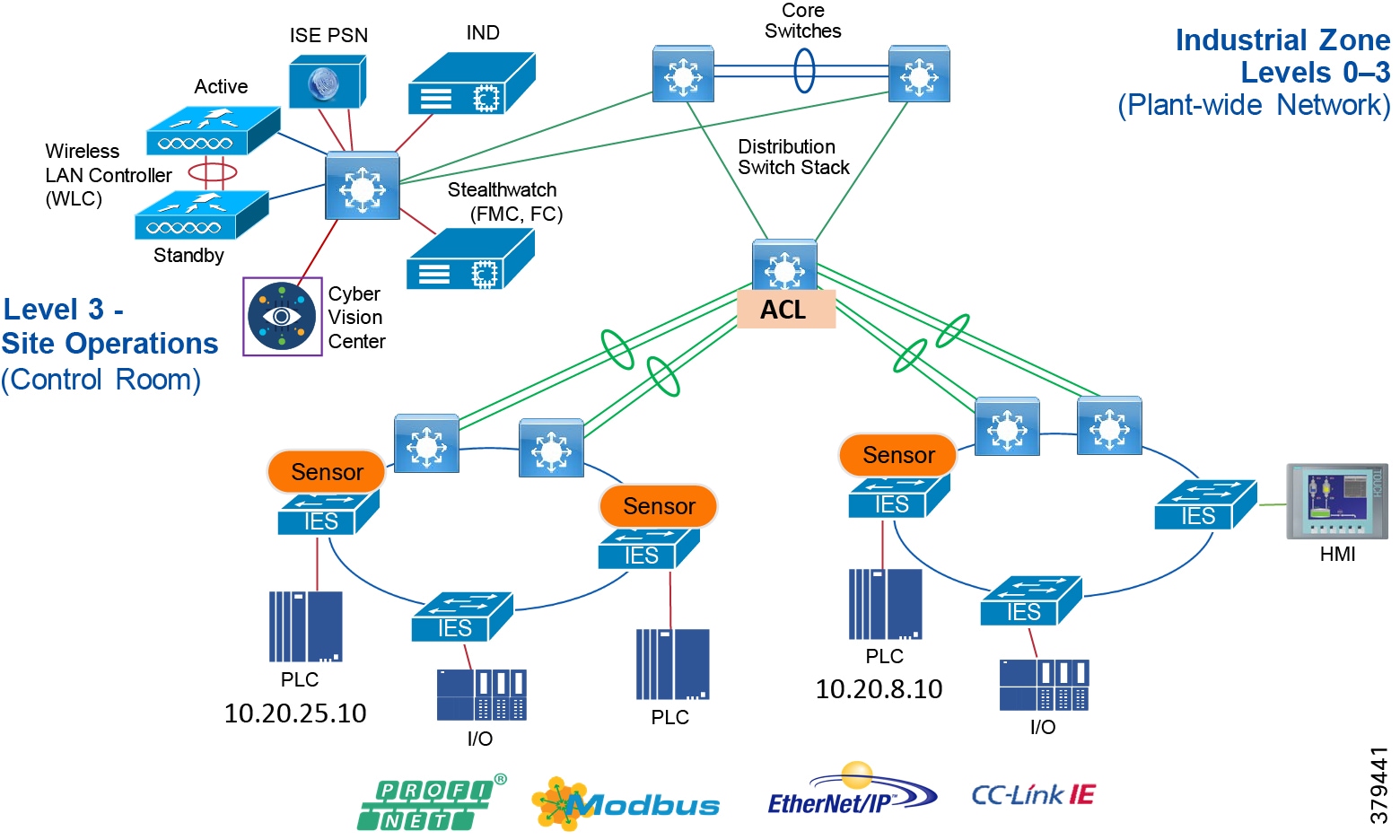

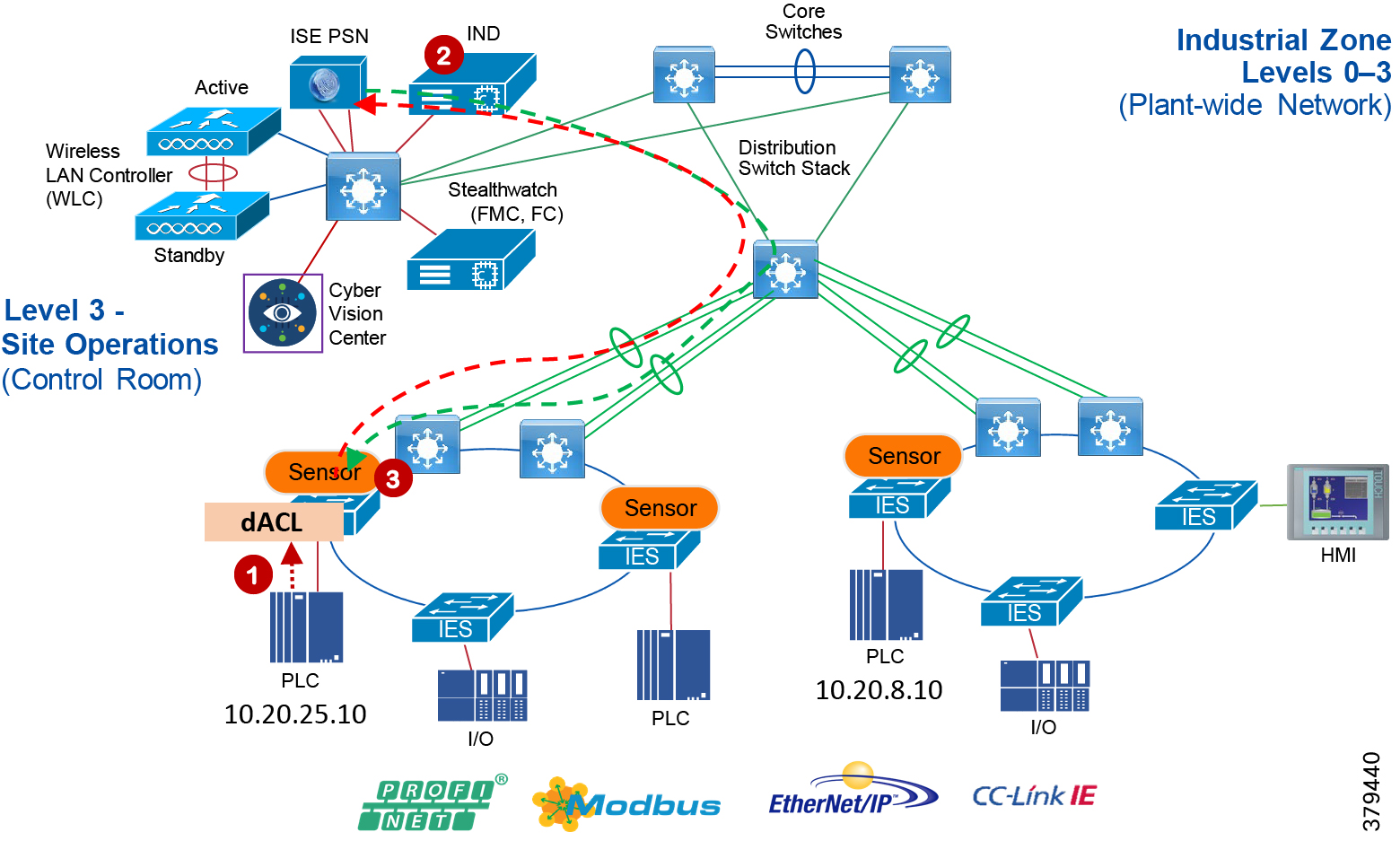

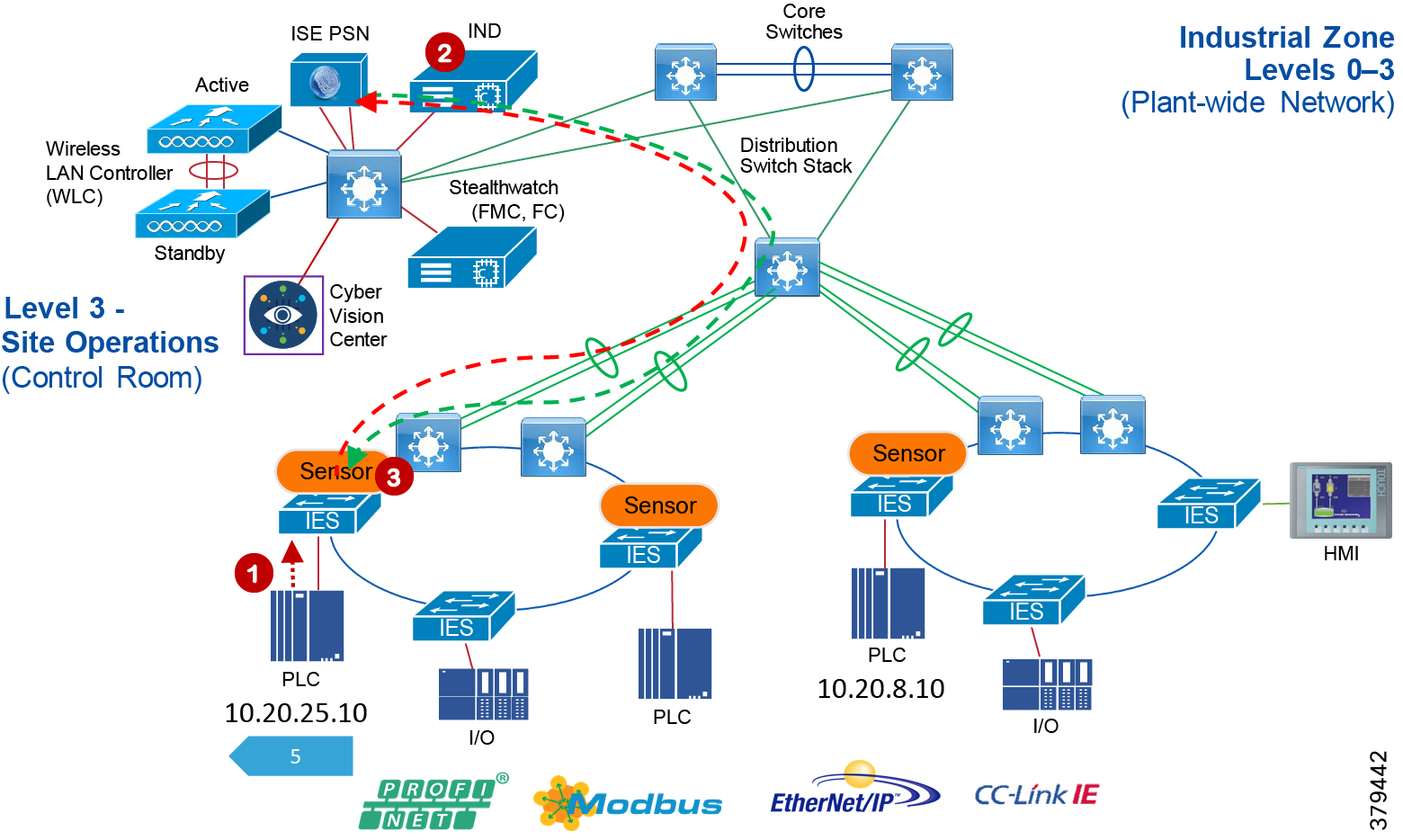

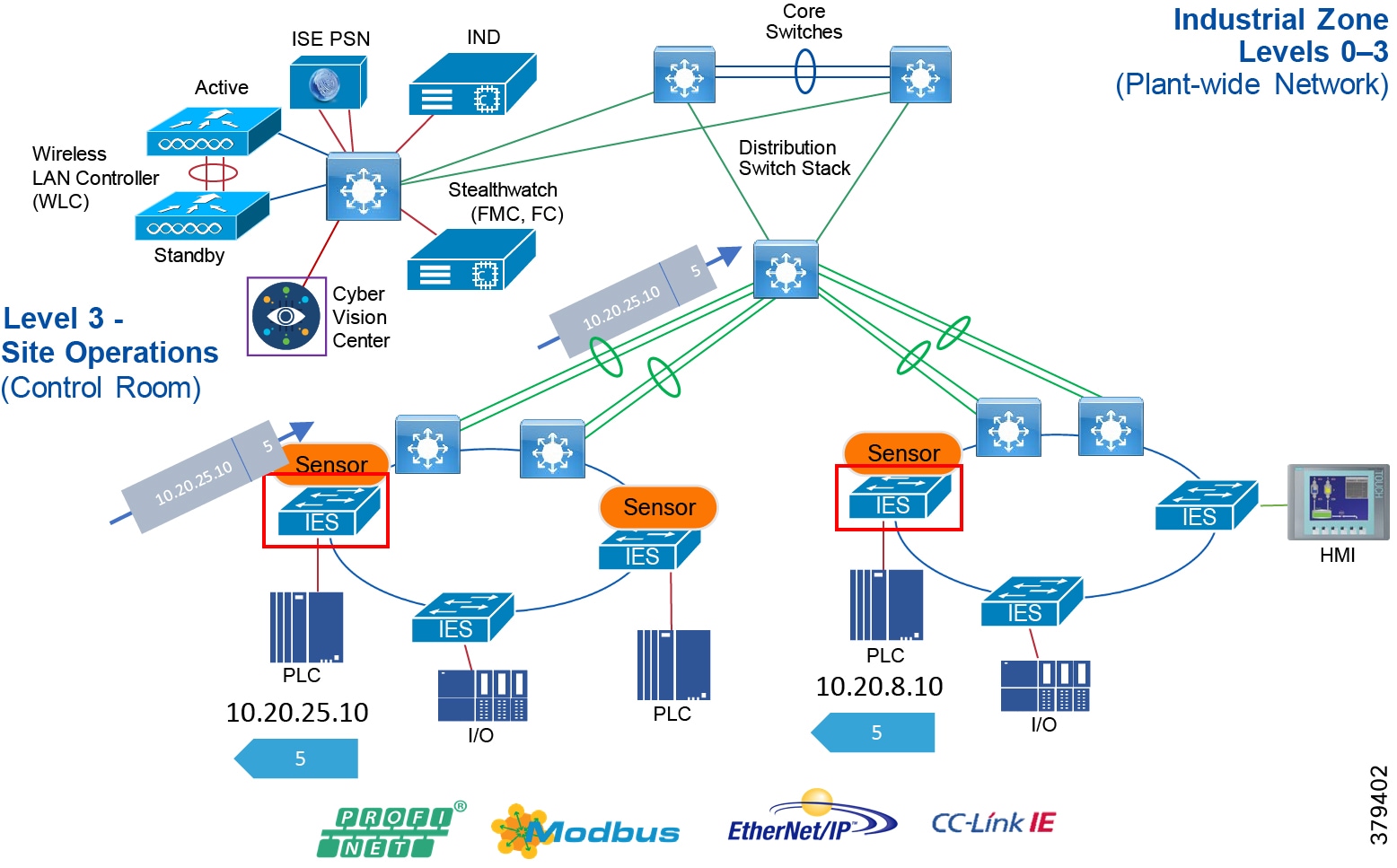

Industrial Automation Network Model and IACS Reference Architecture

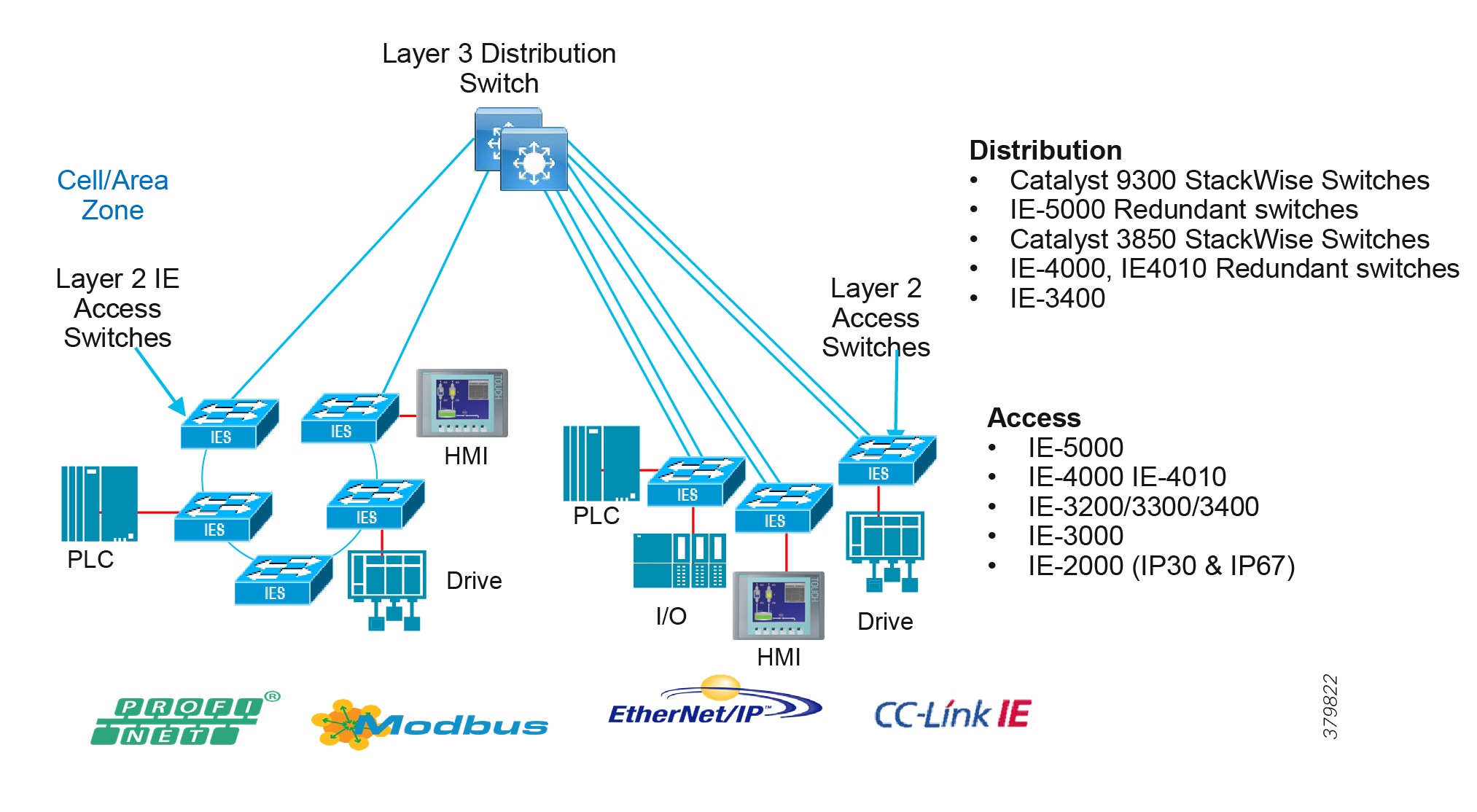

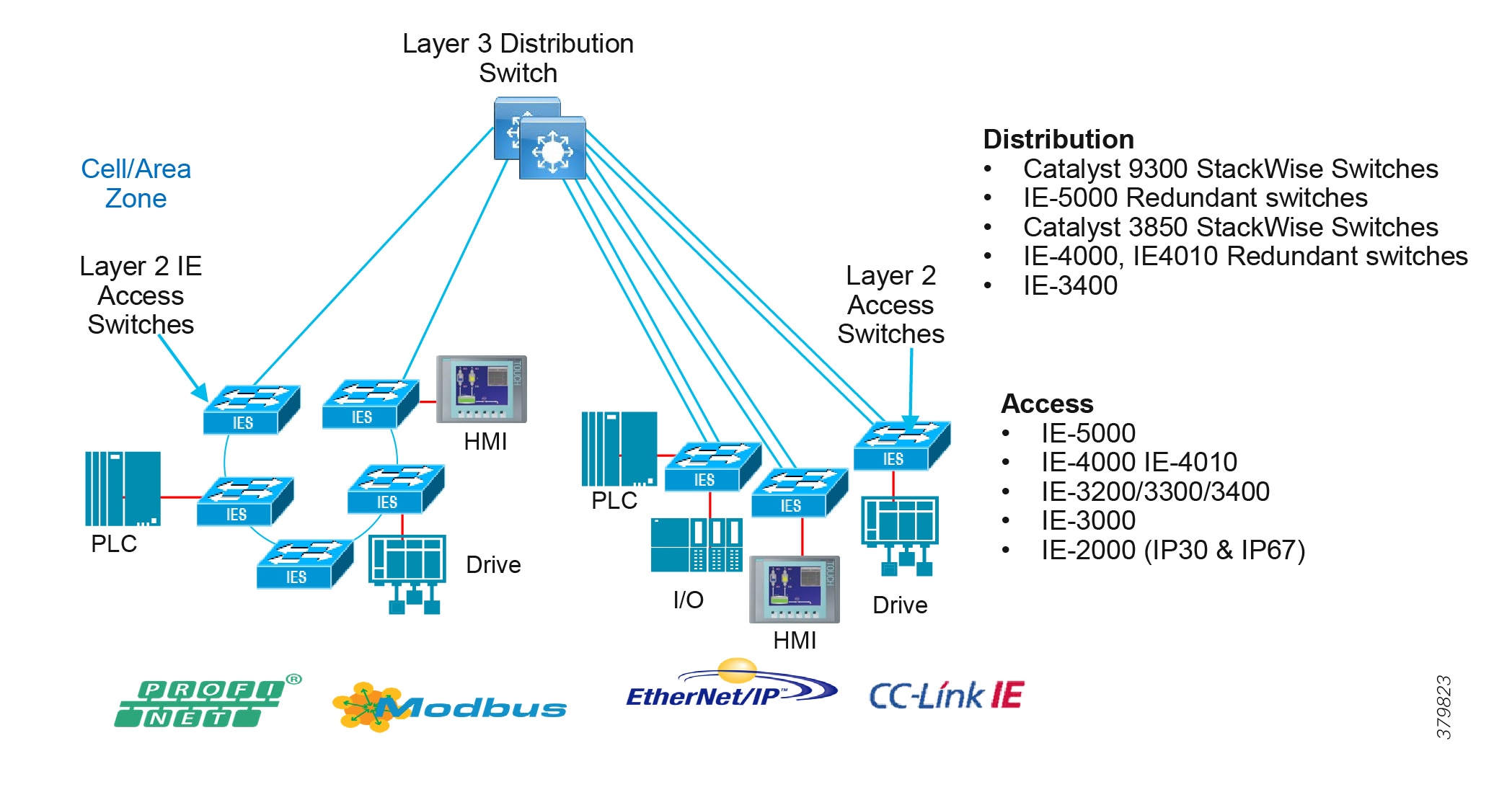

The typical enterprise campus network design is ideal for providing resilient, highly scalable, and secure connectivity for all network assets. The campus model is a proven hierarchal design that consists of three main layers: core, distribution, and access. The DMZ layer is added to provide a security interface outside of the operational plant domain. The following section maps the enterprise campus model to the IACS reference model.

Aligning the Cisco Enterprise Networking Model for the Industrial Plant

DMZ and Industrial DMZ—Level 3.5

The DMZ in the campus model typically provides an interface and restricts access into the enterprises network assets and services from the internet. The Industrial DMZ is deployed within our plant environments to separate the enterprise networks and the operational domain of the plant environment. Downtime in the IACS network can be costly and have a severe impact on revenue, therefore the operational zone cannot be impacted by any outside influences, as availability of the IACS assets and processes are paramount. Therefore network access is not permitted directly between the enterprise and the plant, however data and services are required to be shared between the operational domain and the enterprise, therefore a secure architecture for the industrial DMZ to provide secure traversal of data between the zones is required. Typical services deployed in the DMZ include Remote access servers and Mirrored services. Further details on the design recommendations for the industrial DMZ can be found later in this guide.

Figure 7 Industrial DMZ Functional Model

Core Network

The core is designed to be highly reliable and stable to aggregate all the elements in the operational plant, typically Layer 3 devices, with high speed connectivity, redundant links, and redundant hardware. Within the context of the plant architecture it aggregates all of the Cell/Area Zones and provides access to the industrial DMZ and centralized services.

For industrial automation, services required across the plant include: Production control, Historians, domain controllers, and networking security platforms such as Cisco Identity Services Engine (ISE) and Cisco Stealthwatch. The core will align with plant operations and control zone which resides at Level 3 of the Purdue model.

Summary

■![]() Provides reliable connectivity between distribution layers for large sites focusing on scale and availability

Provides reliable connectivity between distribution layers for large sites focusing on scale and availability

■![]() Enables site-wide redundancy

Enables site-wide redundancy

■![]() Allows non-disrupting in-service upgrades

Allows non-disrupting in-service upgrades

Figure 8 Enterprise Model with Industrial Zone

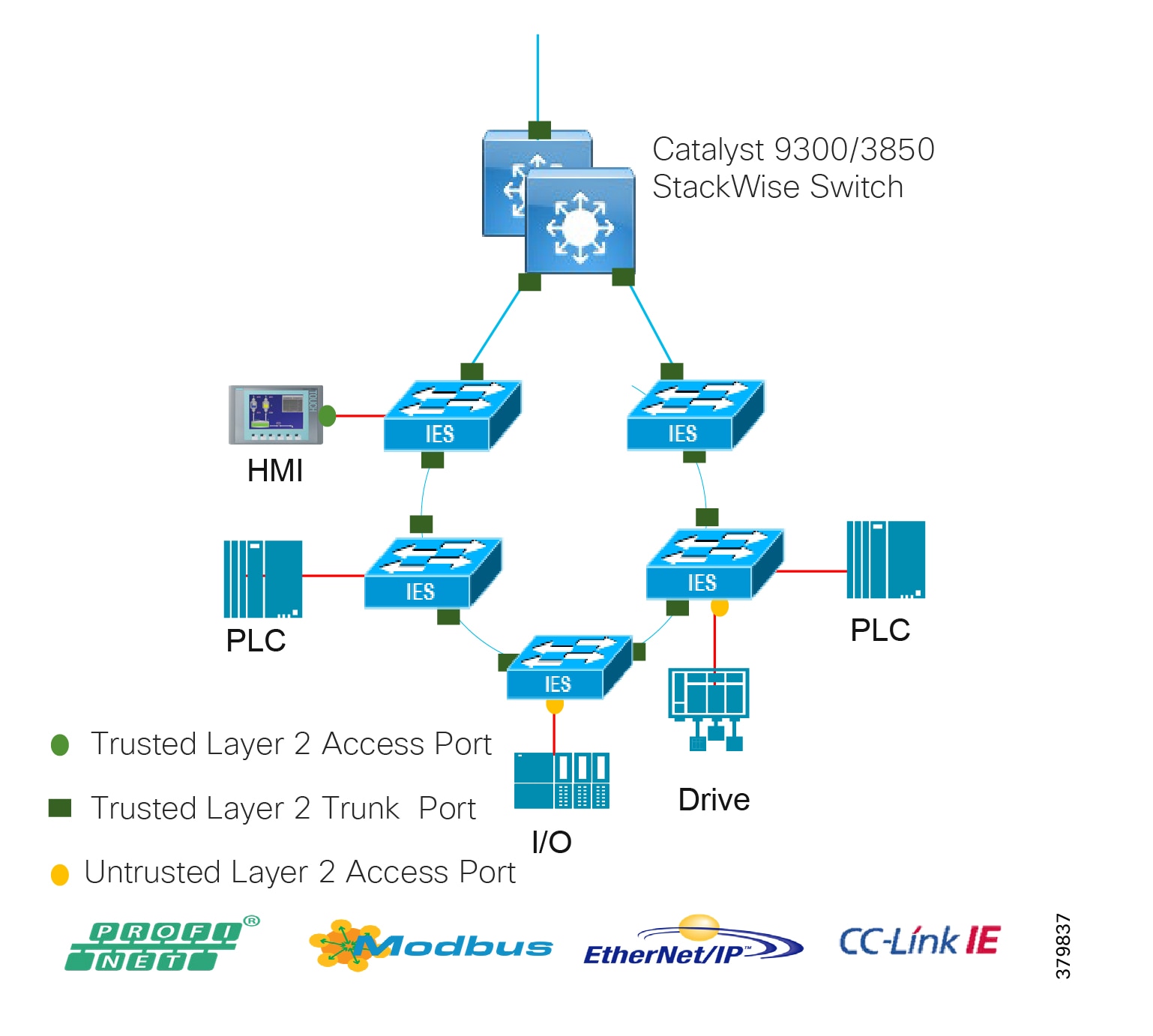

Distribution Network

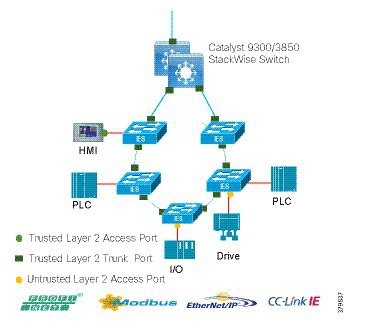

The distribution layer in its simplest form provides policy-based connectivity and demarcation between the access layer and the core layer. In the Purdue model, it is part of the Cell/Area Zone to provide aggregation and policy control and act as a demarcation point between the Cell/Area Zone and the rest of the IACS network.

Summary

■![]() Layer 3 connectivity to the core layer and Layer 2 into the access

Layer 3 connectivity to the core layer and Layer 2 into the access

■![]() Aggregates access layers and provides connectivity services

Aggregates access layers and provides connectivity services

■![]() Connectivity and policy services within the access-distribution network

Connectivity and policy services within the access-distribution network

■![]() Distribution, policy control, and isolation/demarcation points between the Cell/Area Zones and the rest of the network

Distribution, policy control, and isolation/demarcation points between the Cell/Area Zones and the rest of the network

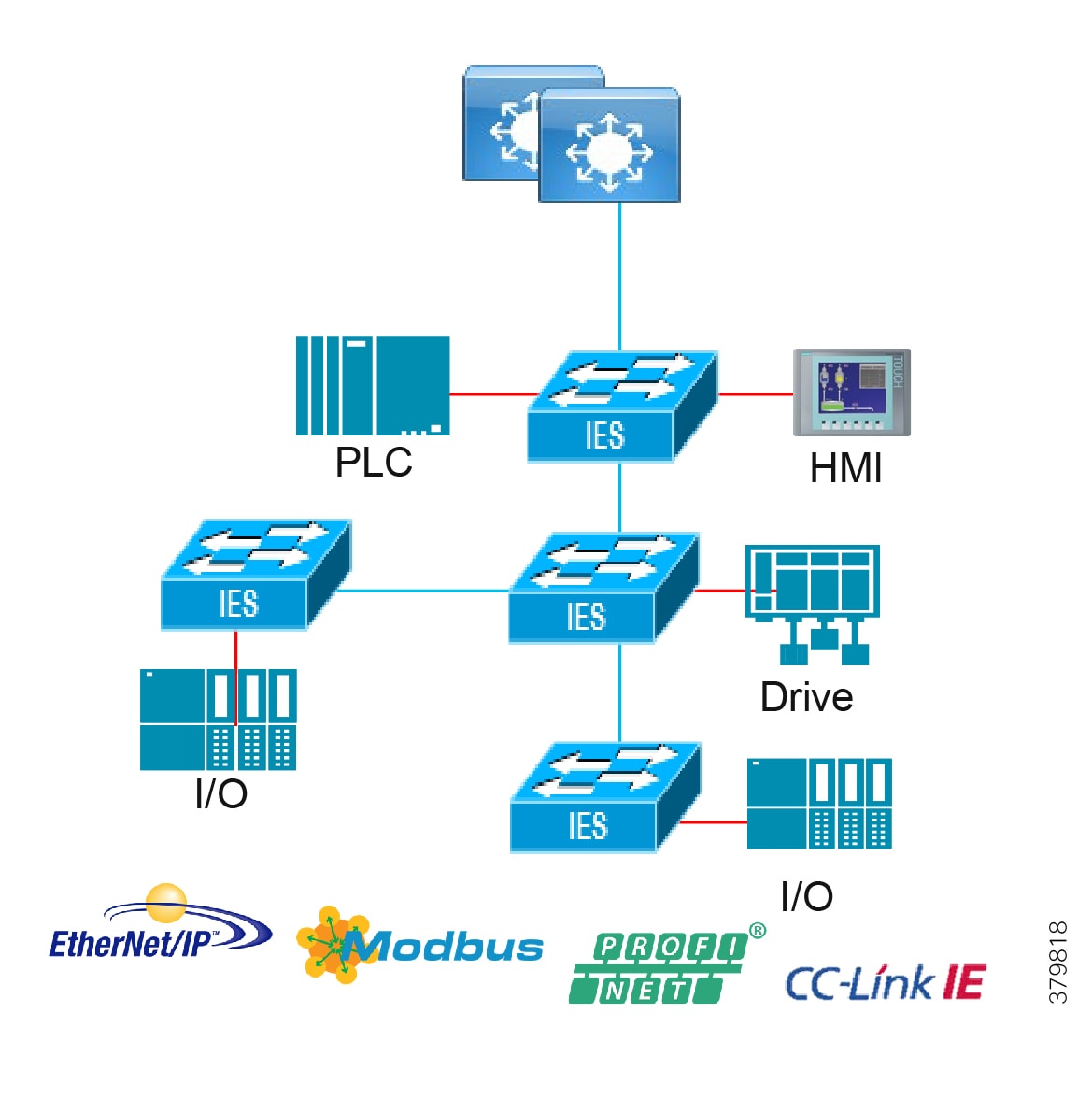

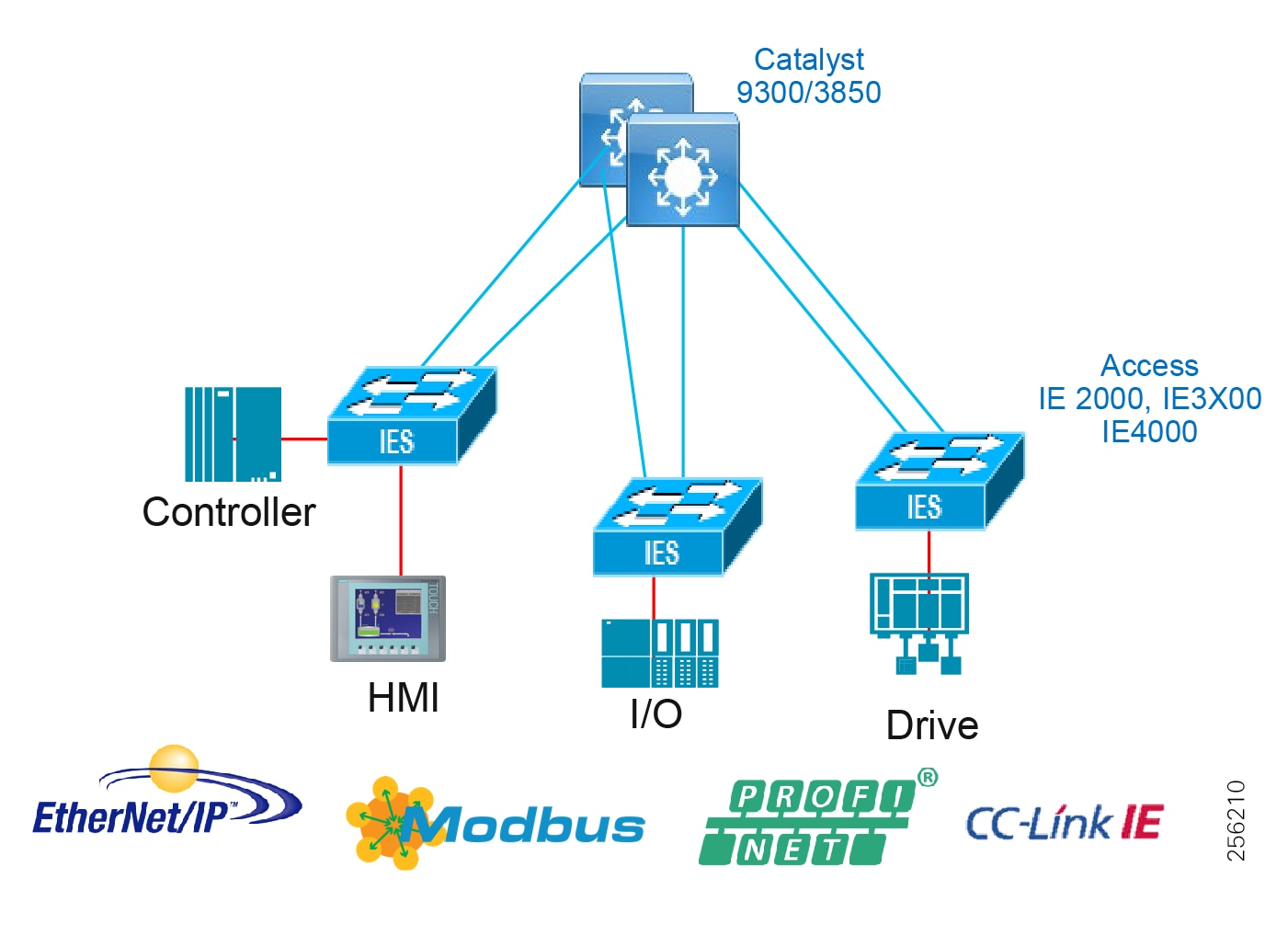

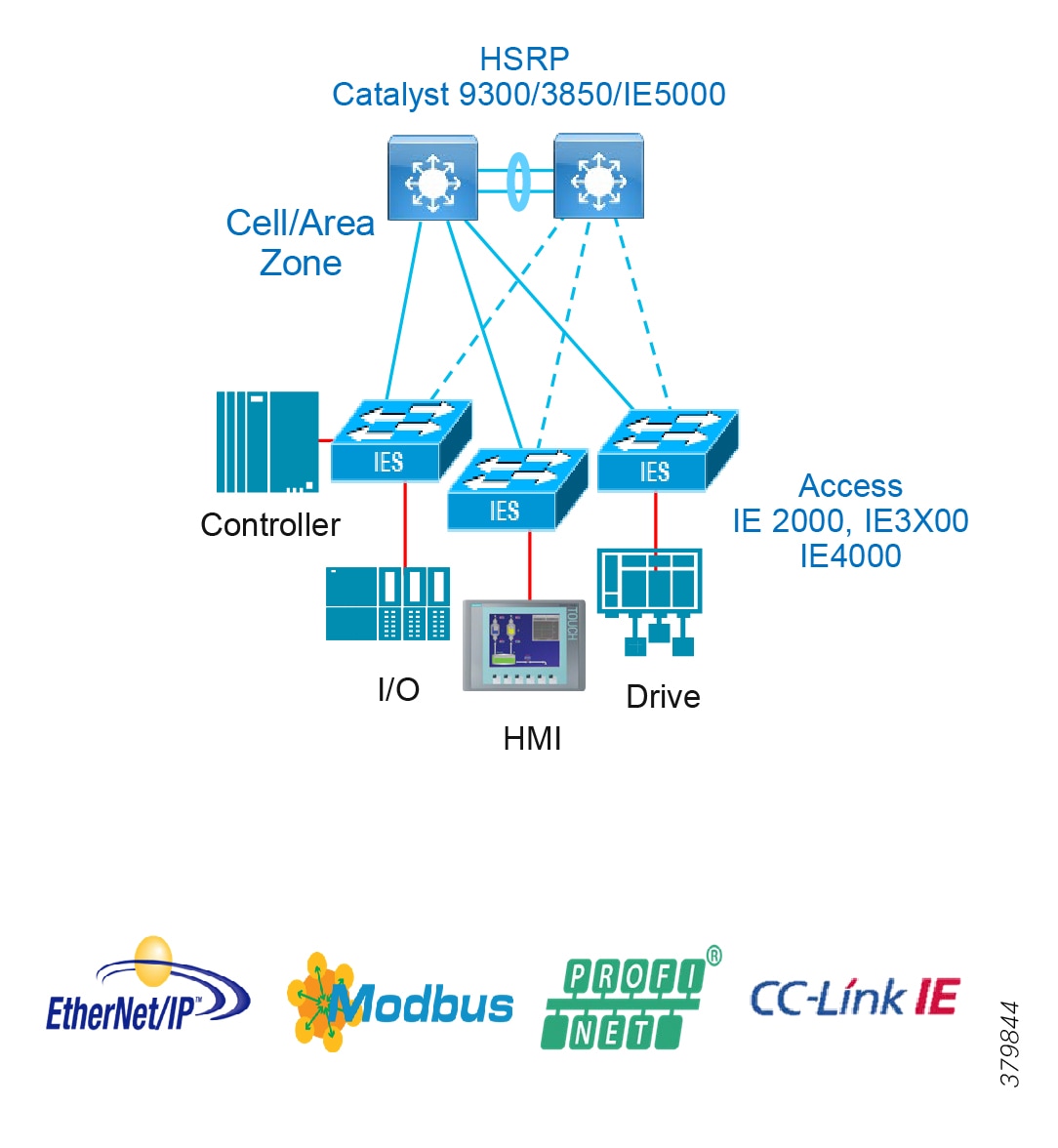

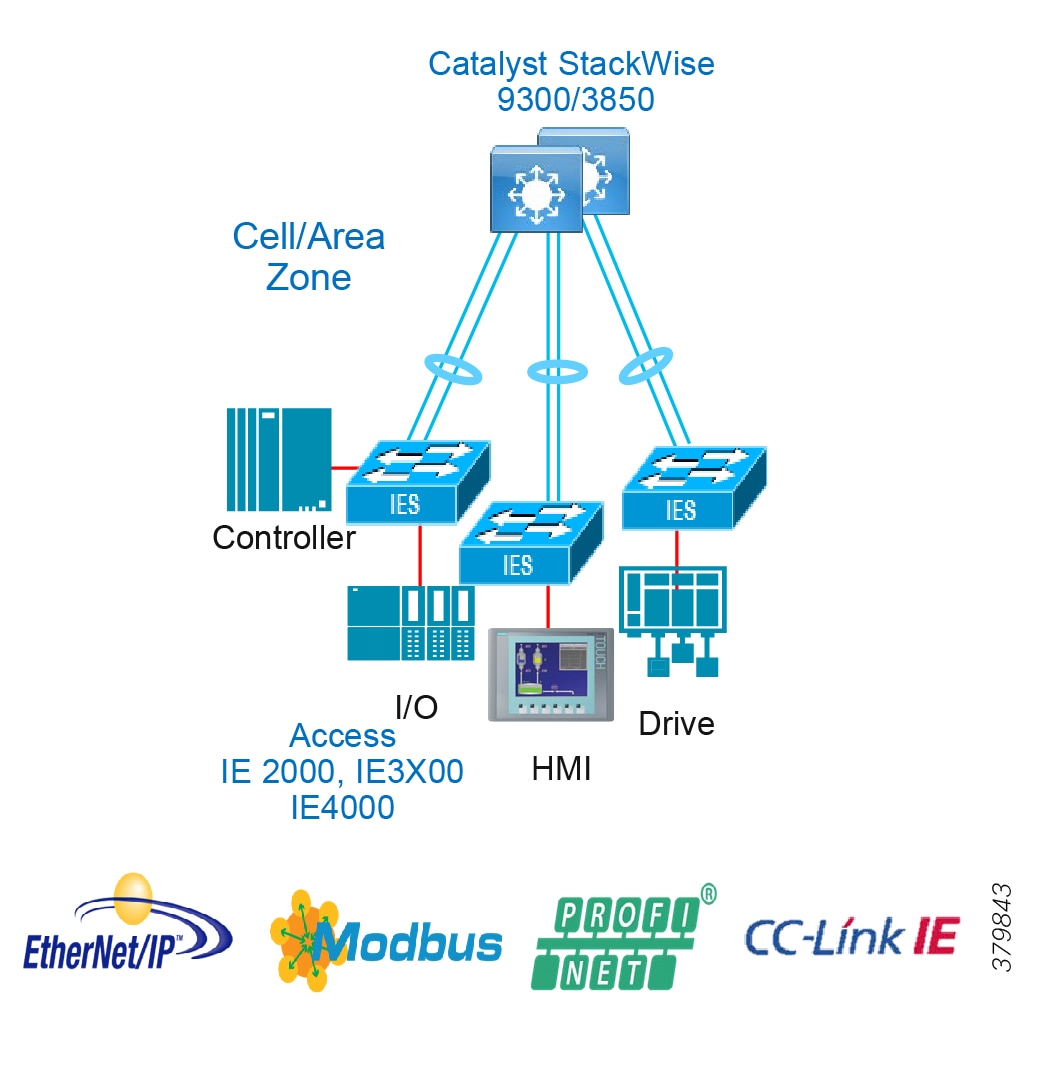

Collapsed Core Distribution Network

In small-to-medium plants, it is possible to collapse the core into the distribution switches as shown in Figure 9. However, for large plants, in which a large number of Cell/Area Zones exist, this level of hierarchical segmentation is not recommended and a traditional three-tier layer is deployed.

Figure 9 Collapsed Core/Distribution

Access Network

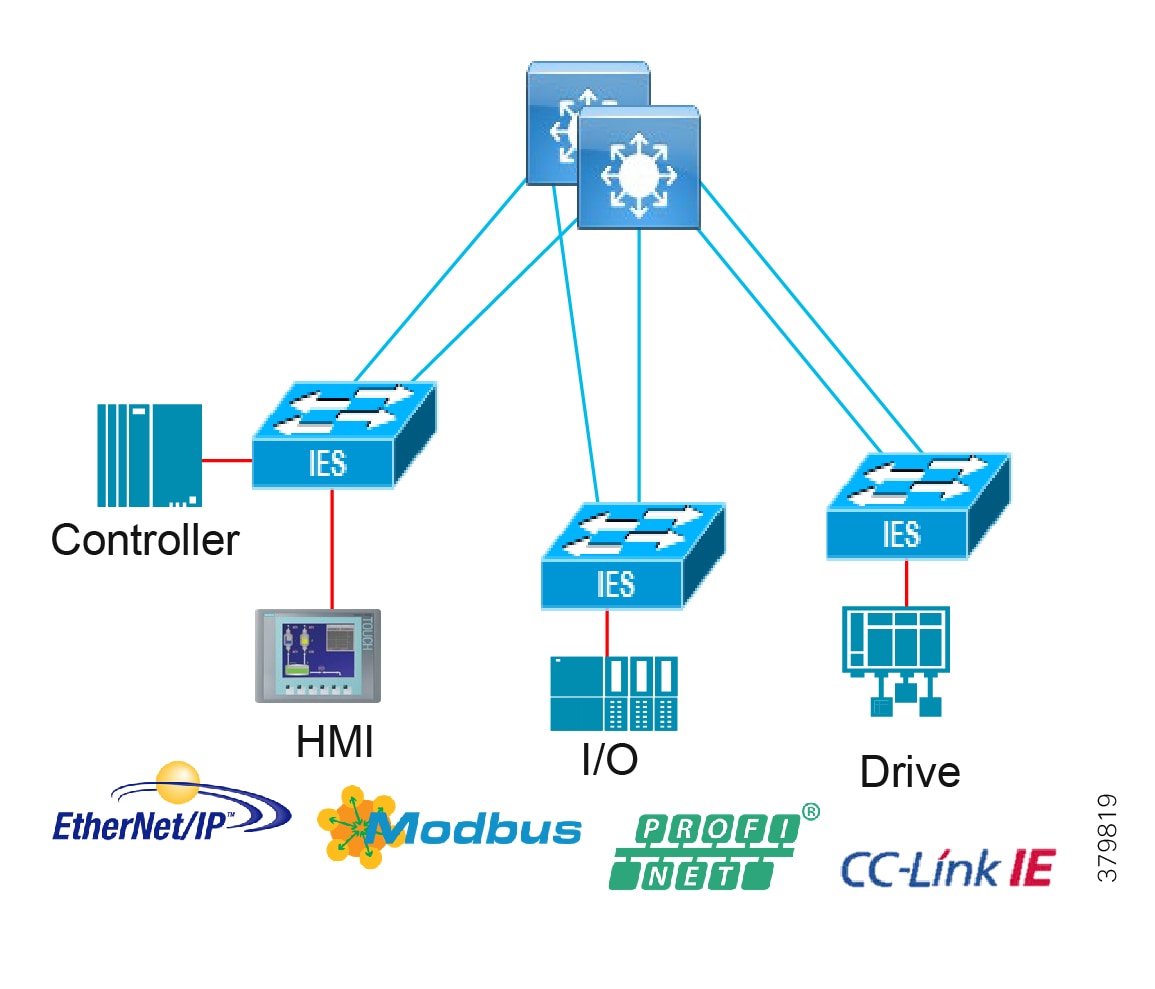

The access layer provides the demarcation between the network infrastructure and the devices that leverage that infrastructure. As such, it provides a security, QoS, and policy trust boundary. When looking at the overall IACS network design, the access switch provides the majority of these access layer services and is a key element in enabling multiple IACS network services.

The Cell/Area Zone can be considered an access layer network that is specialized and optimized for IACS networks.

Summary

■![]() Provides endpoints (PCs, controllers, I/O devices, drives, cameras, and so on) and users with access to the network

Provides endpoints (PCs, controllers, I/O devices, drives, cameras, and so on) and users with access to the network

■![]() Enforces security, segmentation, QoS, and policy trust enforcement

Enforces security, segmentation, QoS, and policy trust enforcement

■![]() Labels packets to enforce segmentation

Labels packets to enforce segmentation

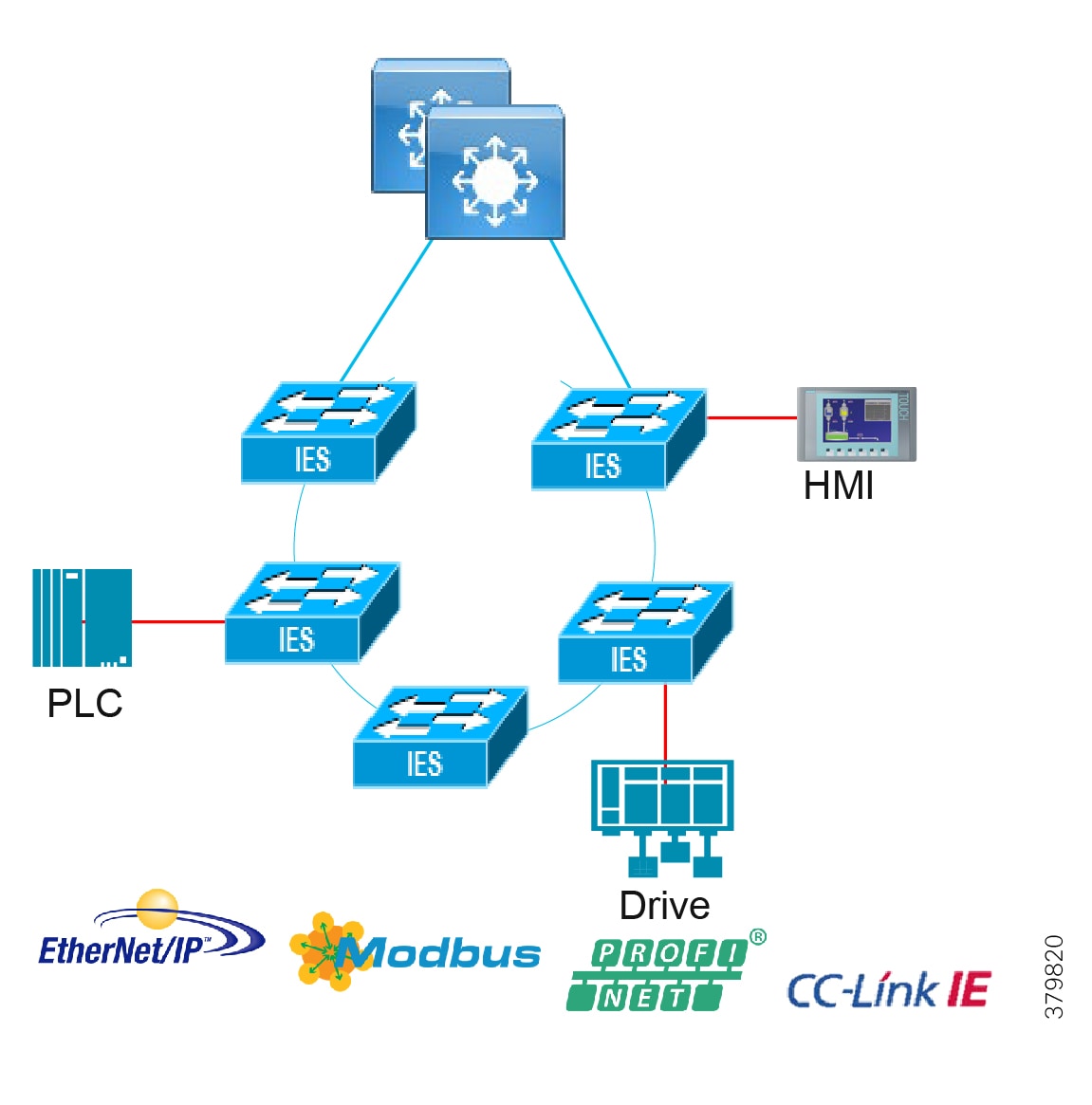

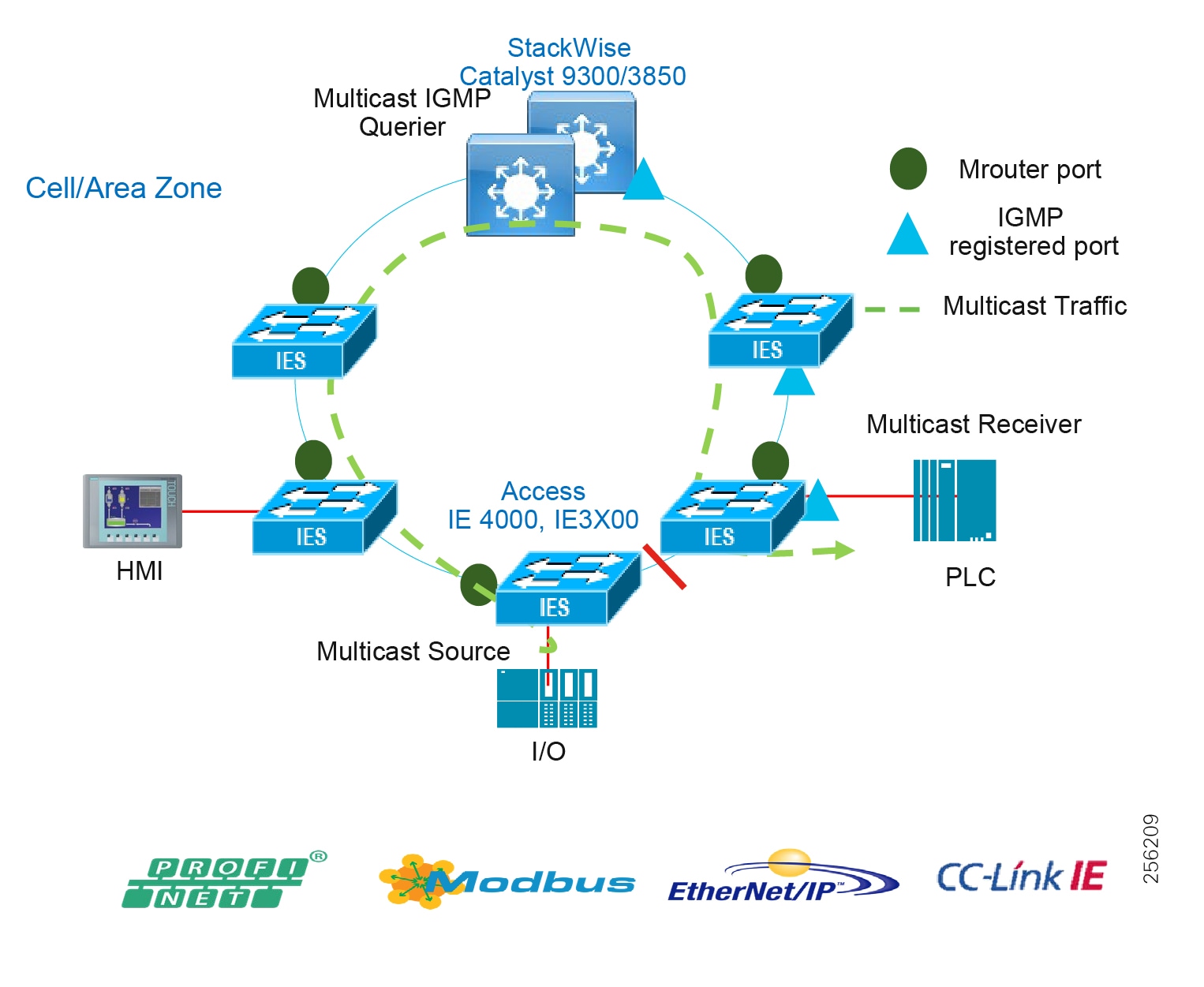

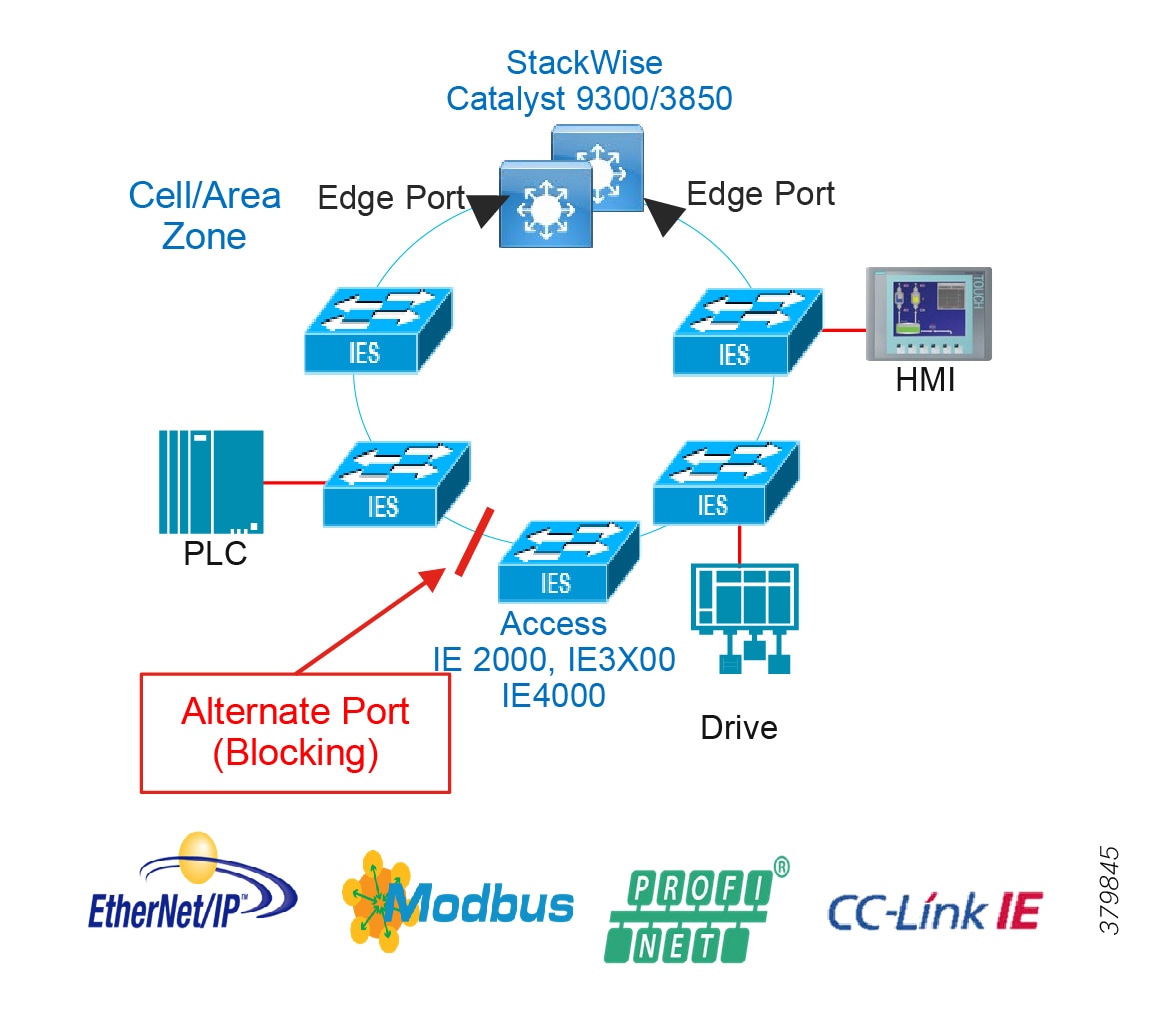

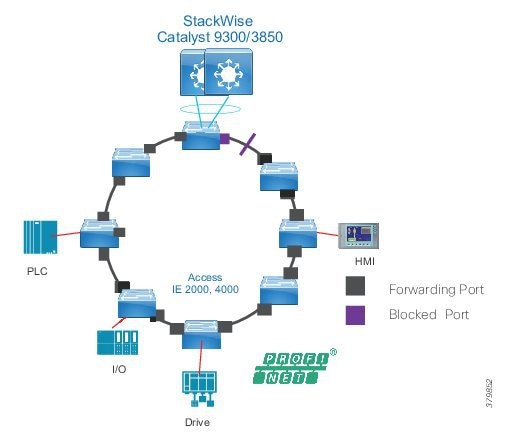

■![]() Comprised of rapid convergent ring topologies or parallel access network topologies

Comprised of rapid convergent ring topologies or parallel access network topologies

■![]() Contains potential multicast-rich local traffic flows

Contains potential multicast-rich local traffic flows

■![]() Provides Network Address Translation (NAT) options

Provides Network Address Translation (NAT) options

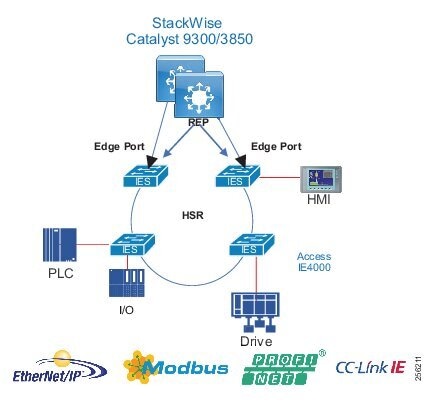

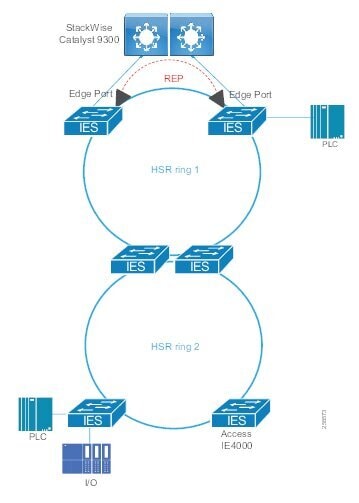

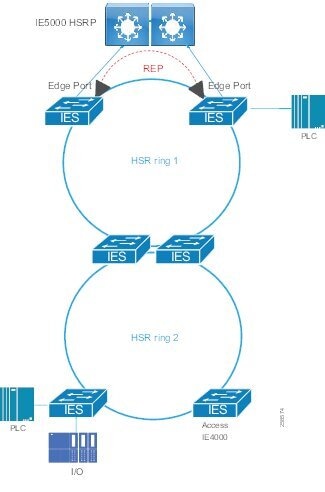

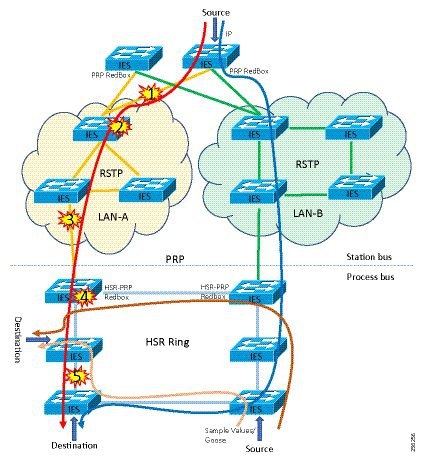

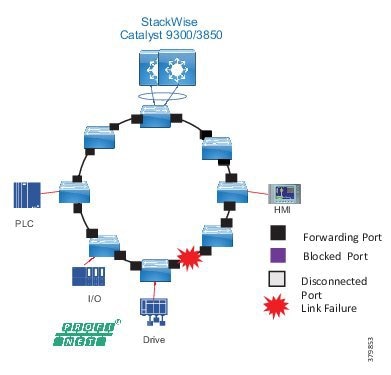

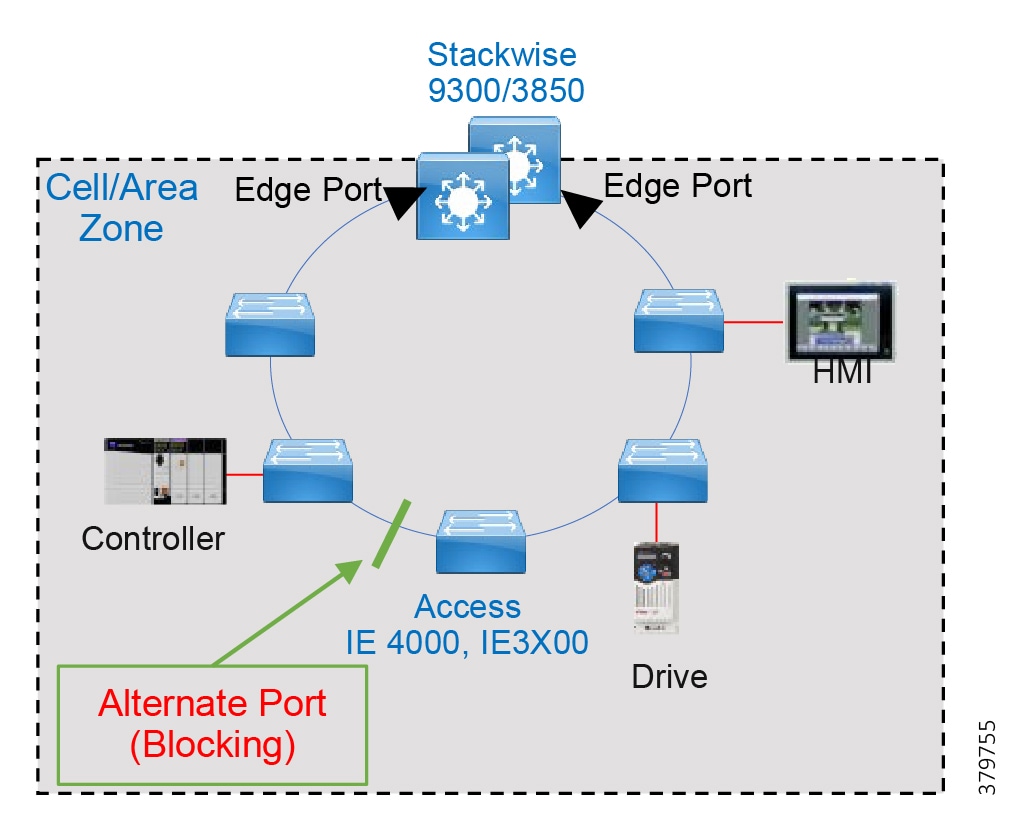

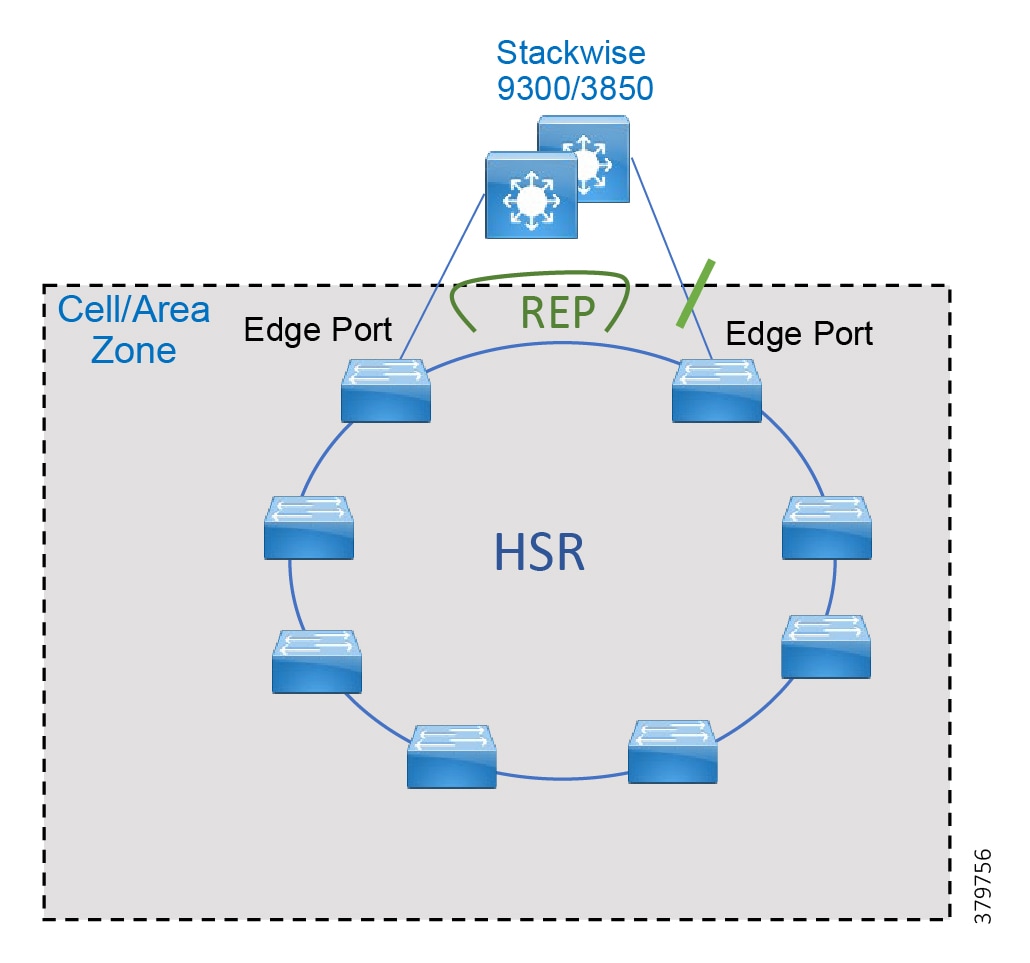

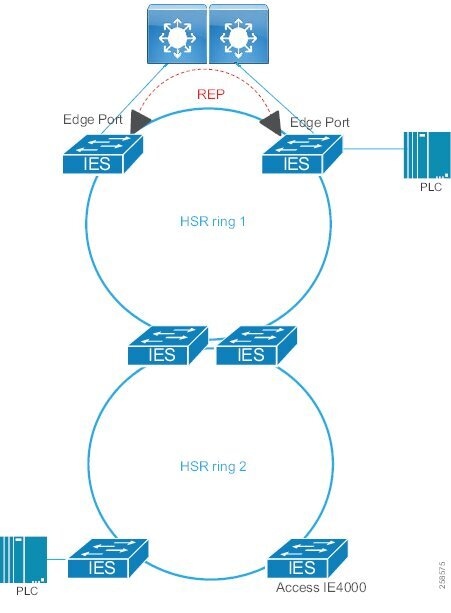

Access Network Topologies for the Industrial Plant Environments

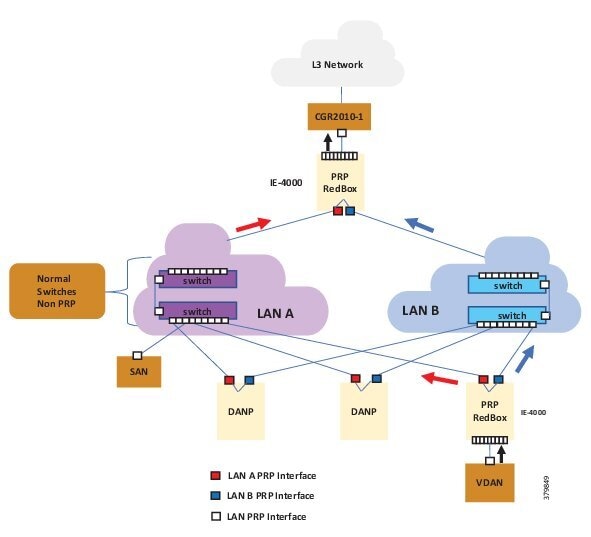

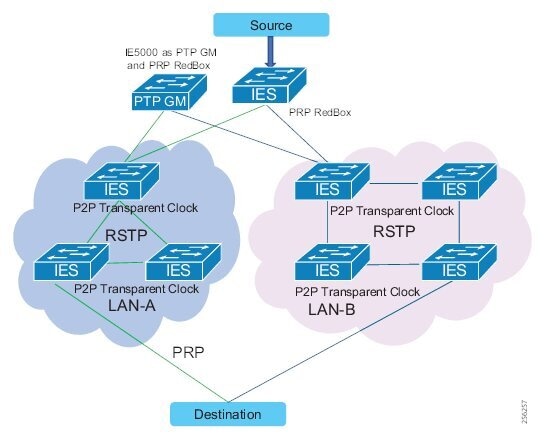

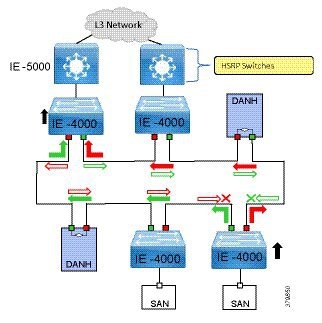

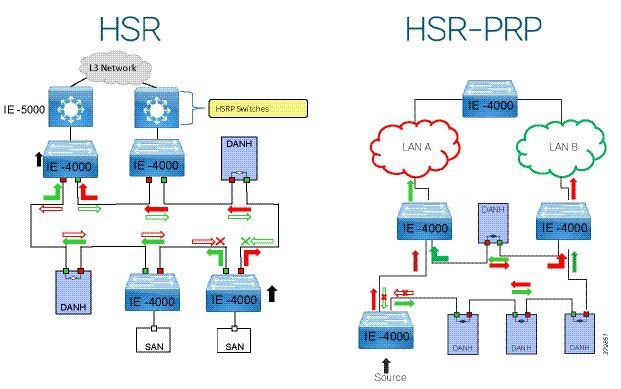

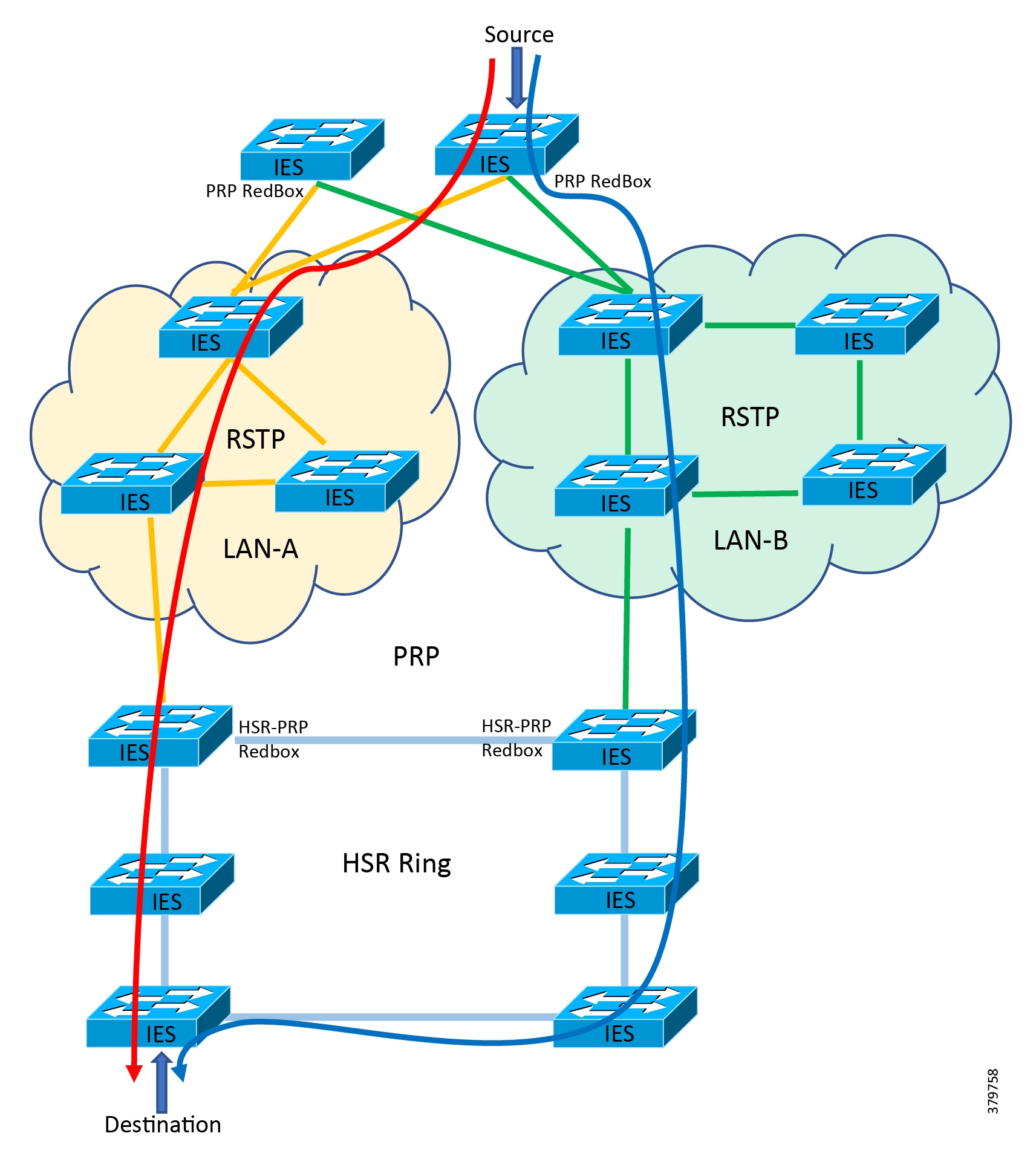

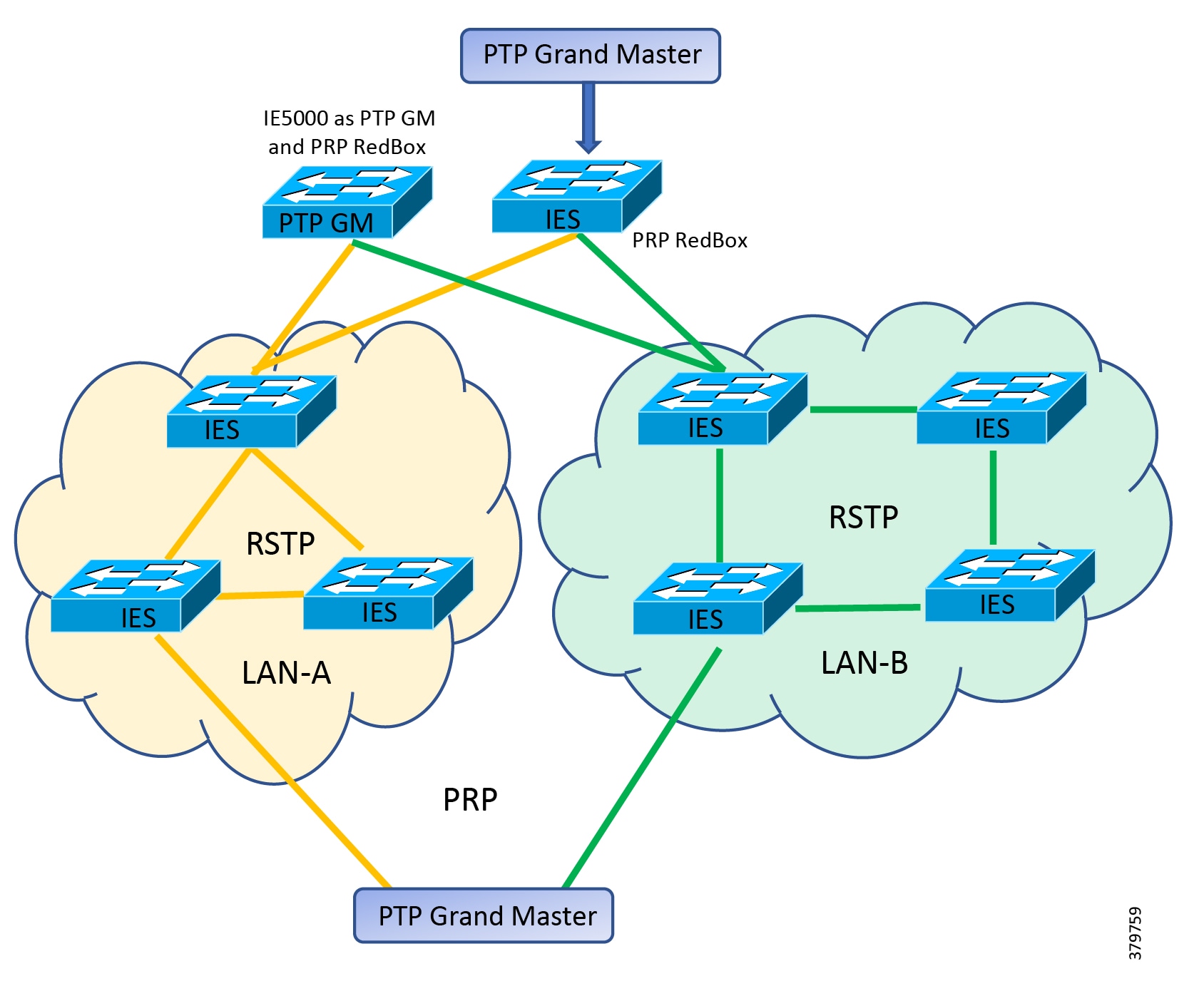

Traditional enterprise IT networks are modeled predominantly on redundant star topologies as they tend to have better performance and resiliency, however within the IACS networks there are a number of factors that define the layout of the access network. The physical layout of the plant, cost of cabling, and desired availability are three important factors in plants. For example, ring or linear topologies are more cost effective for long production lines; the cost of cabling these long production lines in a redundant star topology is prohibitive and if availability is required a ring topology map be preferred. Newer technologies, such as PRP and HSR, can provide improved ring resiliency and availability for the IACS plant. HSR can provide lossless redundancy over a ring topology and PRP provides lossless redundancy over two diverse, parallel LANs (LAN-A and LAN-B), which could be two separate rings.

The following are key considerations in determining the topology in an IACS environment:

■![]() Physical Layout—Physical layout of the process facility or the production line influences the networking topology. Installation of cabling can be expensive in industrial environments and is significantly higher than that of the enterprise. Star topologies may be cost prohibitive in long production lines; if real-time communication and availability requirements permit, ring network topology can reduce cost.

Physical Layout—Physical layout of the process facility or the production line influences the networking topology. Installation of cabling can be expensive in industrial environments and is significantly higher than that of the enterprise. Star topologies may be cost prohibitive in long production lines; if real-time communication and availability requirements permit, ring network topology can reduce cost.

■![]() Availability—Availability is a key performance metric that contributes to a plant OEE. The design of the network should enforce maximum uptime. Deploying resilient network topologies allows the network to continue to function after a loss of a link or switch failure. Although some of these events may still lead to downtime of the industrial automation and control systems, a resilient network topology may reduce that chance and should improve the recovery time.

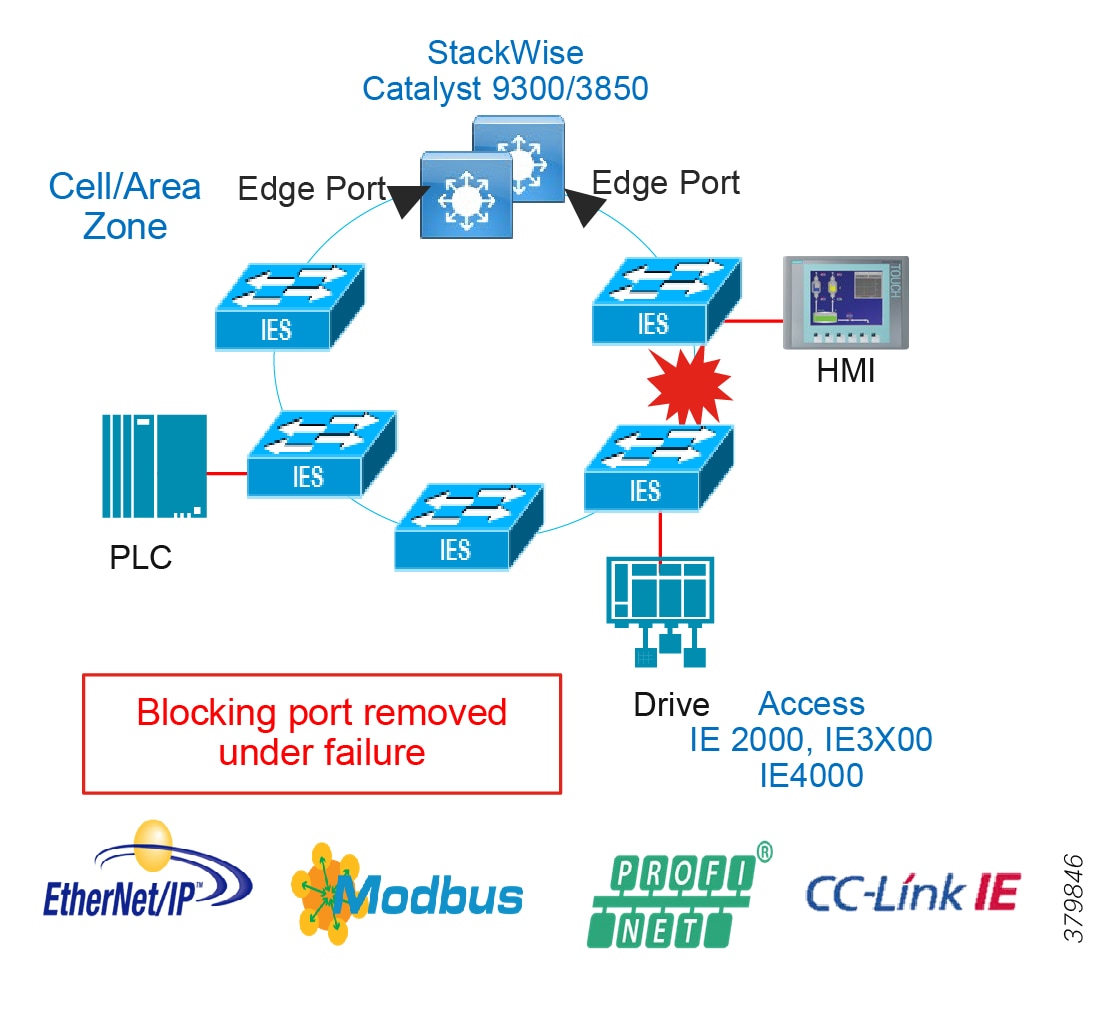

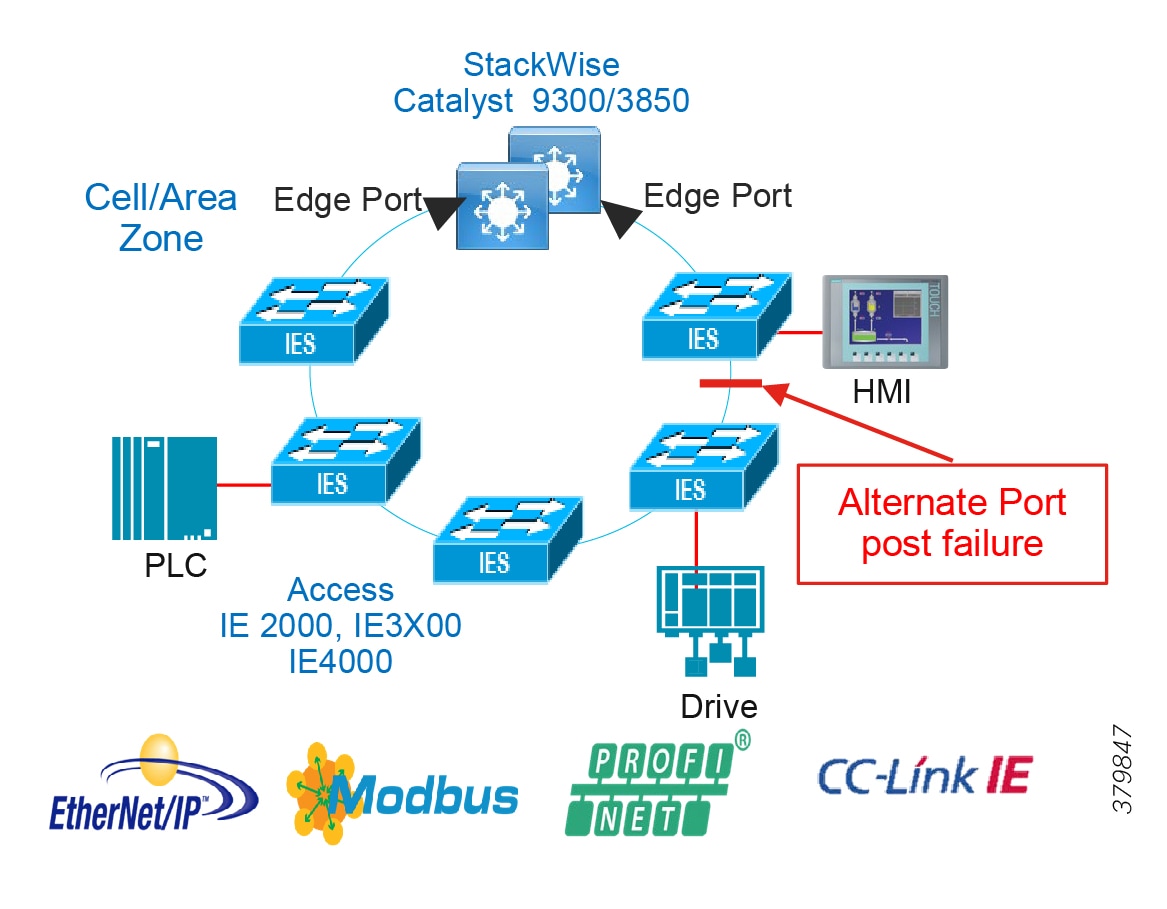

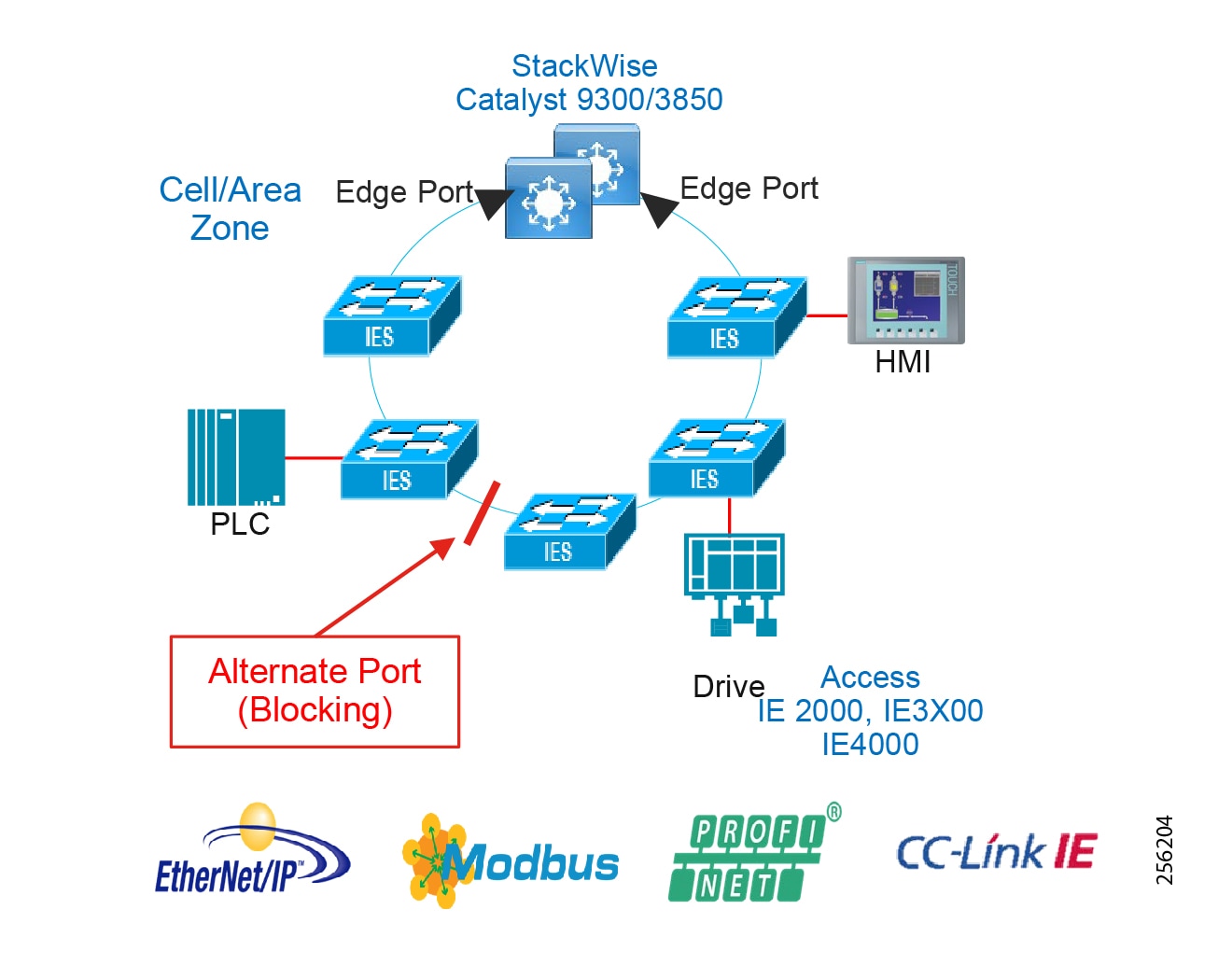

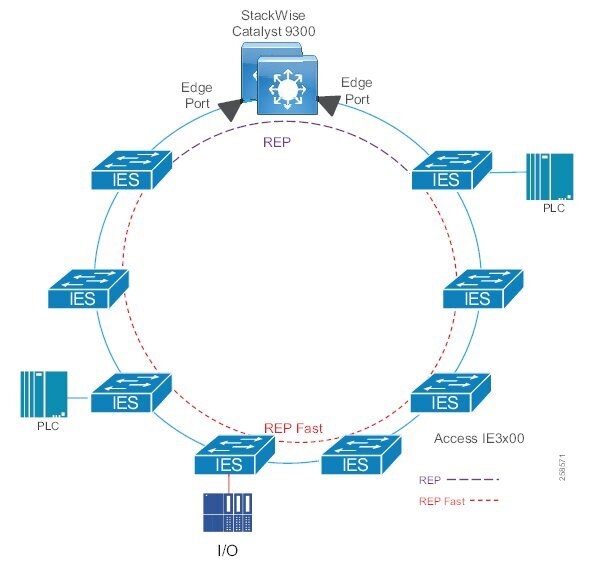

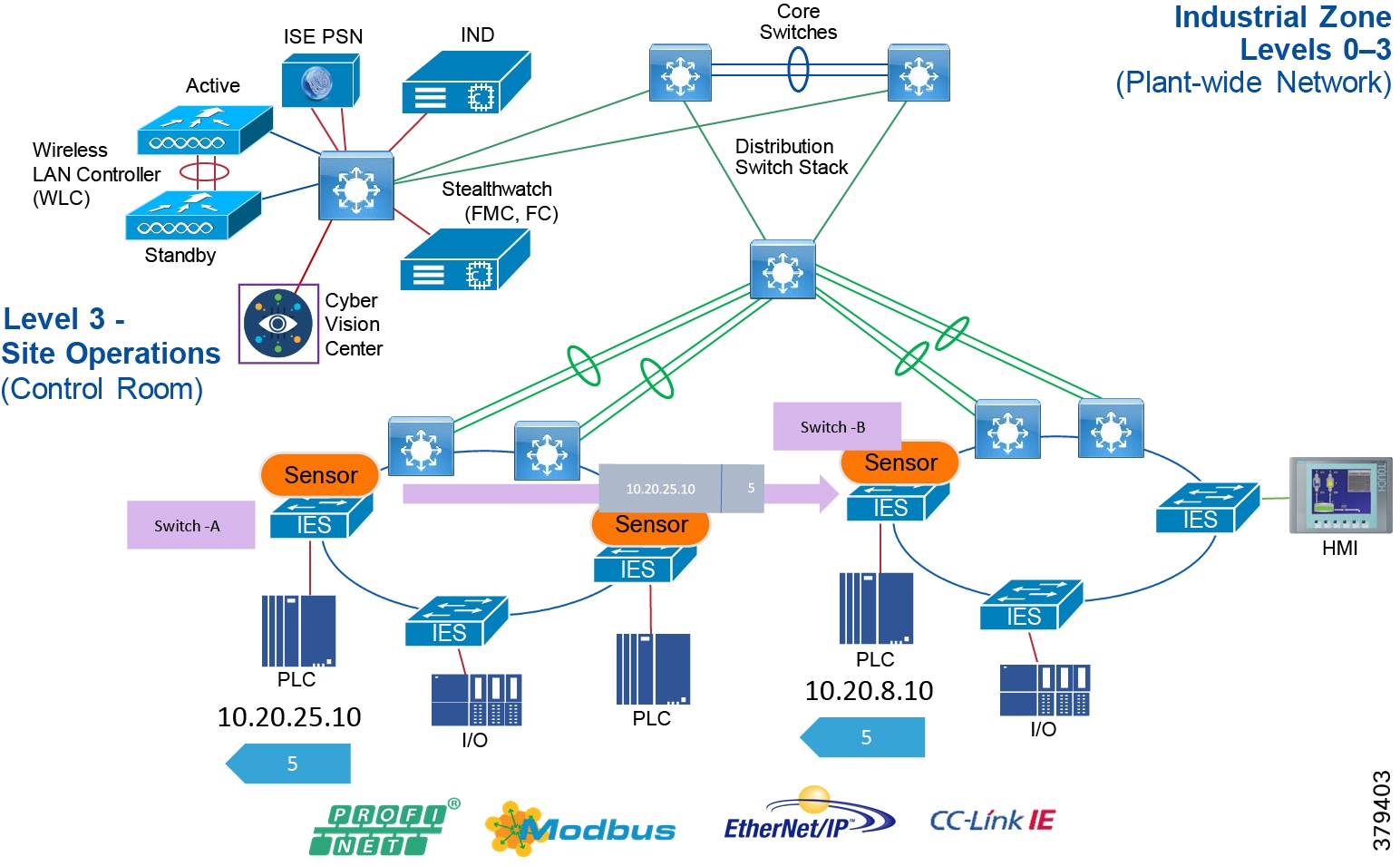

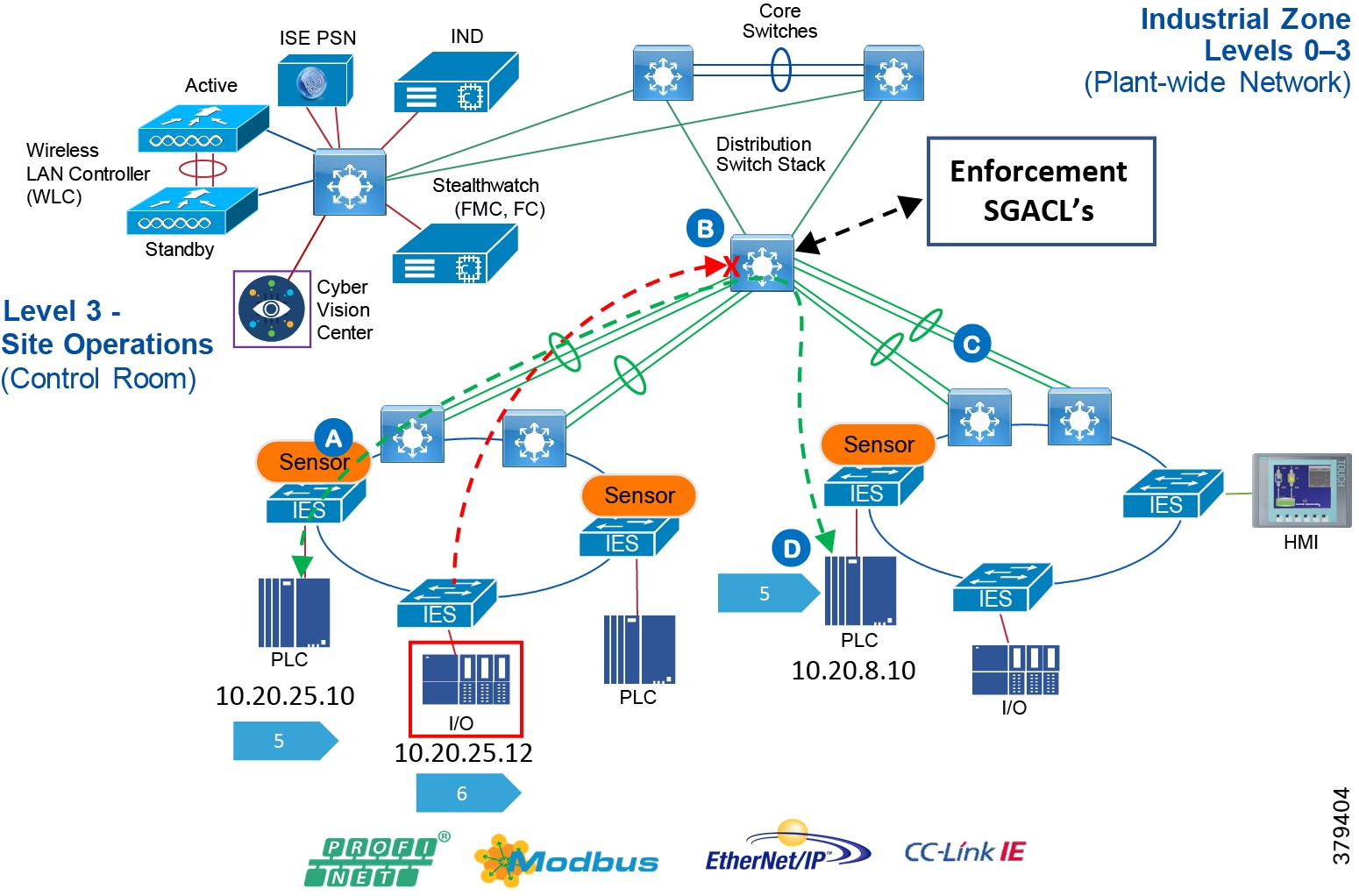

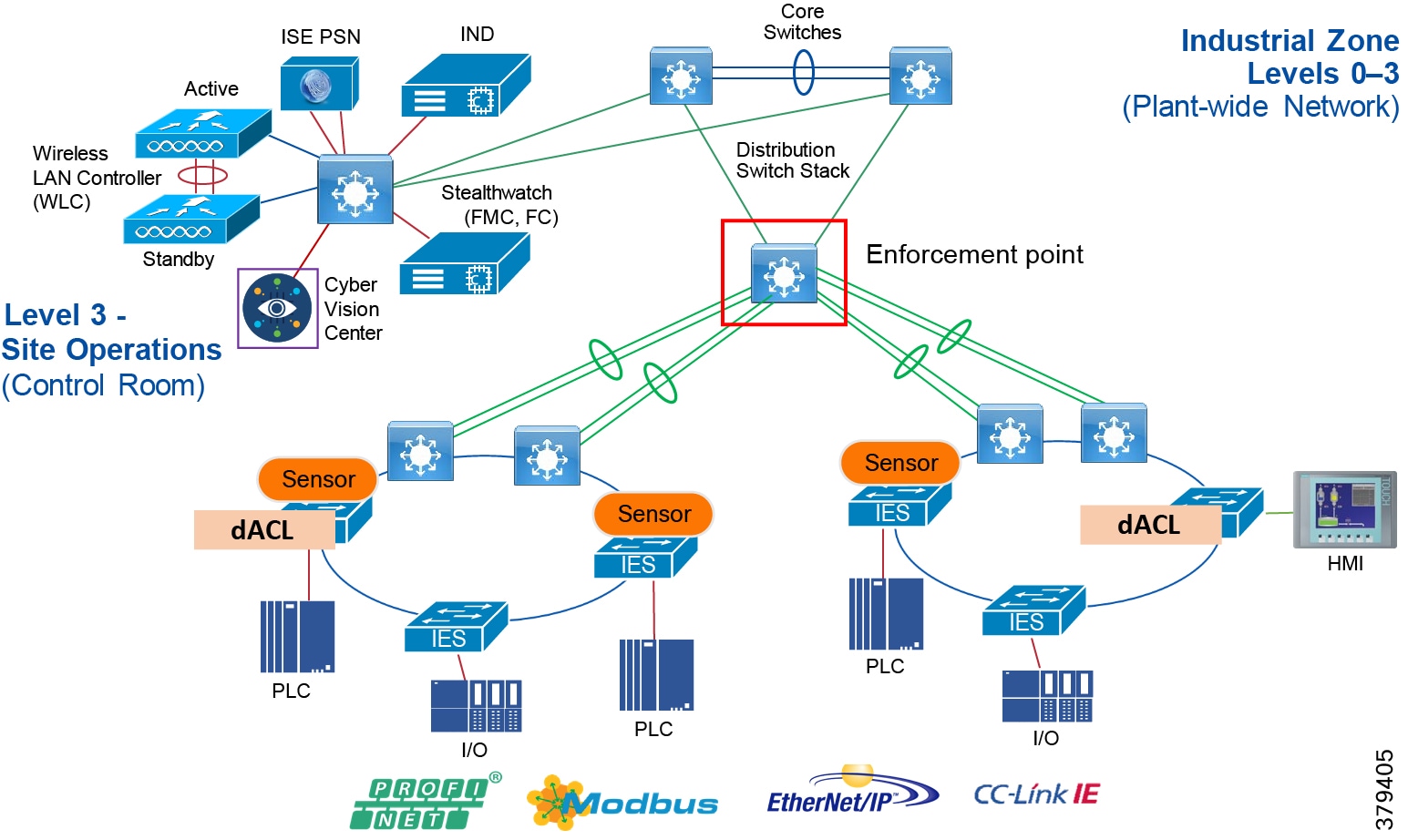

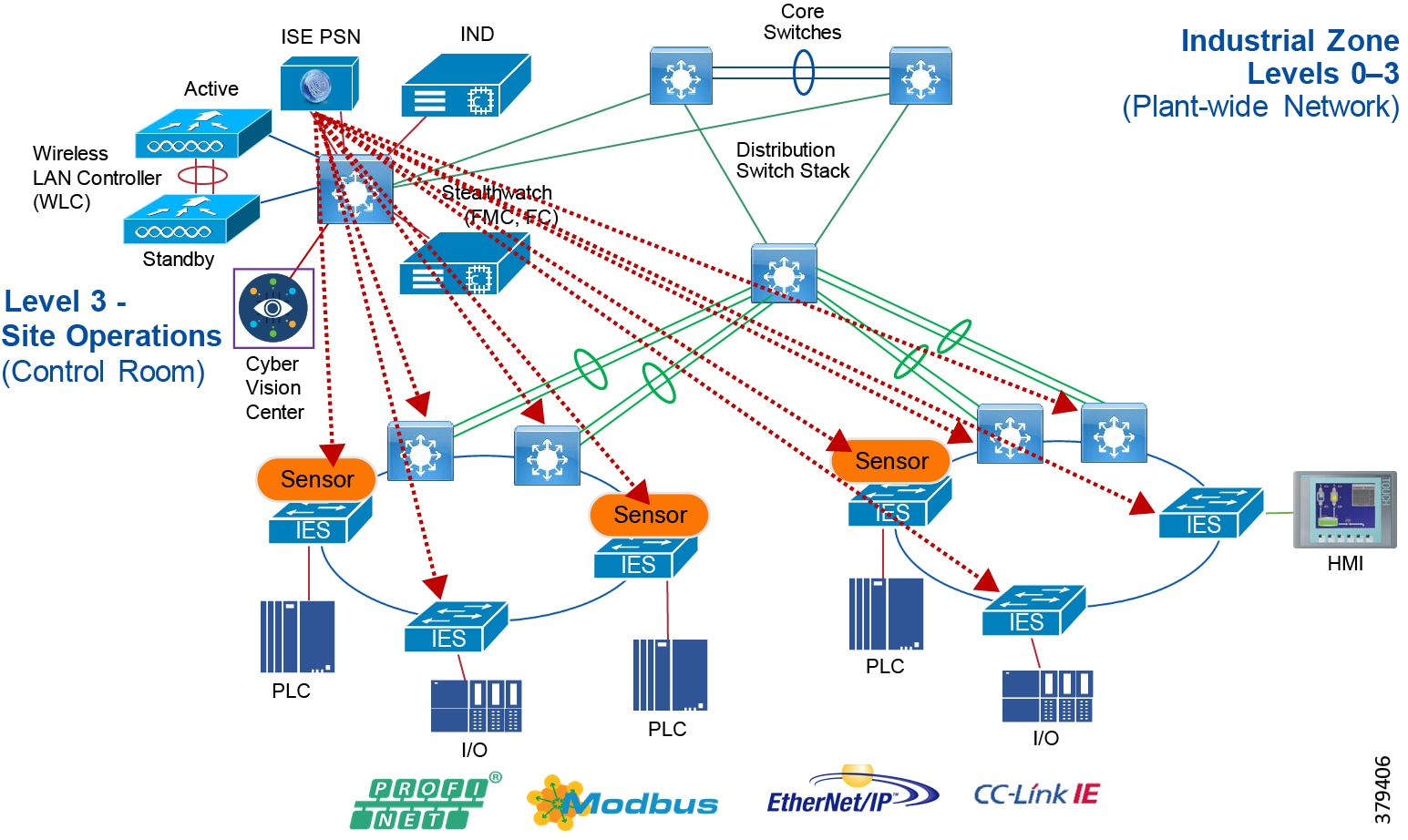

Availability—Availability is a key performance metric that contributes to a plant OEE. The design of the network should enforce maximum uptime. Deploying resilient network topologies allows the network to continue to function after a loss of a link or switch failure. Although some of these events may still lead to downtime of the industrial automation and control systems, a resilient network topology may reduce that chance and should improve the recovery time.